MM Labs Uncovers the Biases of Image Recognition

Do you see what I see? The game “I spy” is an excellent exercise in perception, where players take turns guessing an object that someone in the group has noticed. And much like how one player’s focus might be on an object totally unnoticed by another, artificial intelligences can also notice entirely different things in a single photo. Hoping to see through the eyes of AI, MediaMonks Labs developed a tool that pits leading image recognition services against one another to compare what they each see in the same image—try it here.

Image recognition is when an AI is trained to identify or draw conclusions of what an image depicts. Some image recognition software tries to identify everything in a photo, like a phone automatically organizes photos without the user having to tag them manually. Others are more specialized, like facial recognition software trained to recognize not just a face, but perhaps even the person’s identity.

This sort of technology gives your brand eyes, enabling it to react contextually to the environment around the user. Whether it be identifying possible health issues before a doctor’s visit or identifying different plant species, image recognition is a powerful tool that further blurs the boundary between user and machine. “In the market, convenience is important,” says Geert Eichhorn, Innovation Director at MediaMonks. “If it’s easier, people are willing pick up and try. This has the potential to be that simple, because you only need to point your phone and press a button.”

With image recognition, your product on the store shelf or in the world can become triggers for compelling experiences.

You could even transform any branded object into a scavenger hunt. “What Pokemon Go did for GPS locations, this can do for any object,” says Eichhorn. “Your product on the store shelf or in the world can become triggers for compelling experiences.”

Uncovering the Bias in AI

For a technology that’s so simple to use, it’s easy to forget the mechanics of image recognition and how it works. Unfortunately, this leads to an unequal experience among users that can have very powerful implications: most facial recognition algorithms still struggle to recognize the faces of black people compared to white ones, for example.

Why does this happen? Image recognition models can only identify what it’s trained to see. How should an AI know the difference between dog breeds if they were never identified to it? Just like how humans draw conclusions based on their experiences, image recognition models will each interpret the same image in different ways based on their data set. The concern around this kind of bias is two-fold.

First, there’s the aforementioned concern that it can provide an unequal experience for users, particularly when it comes to facial recognition. Developers must ensure they power their experience with a model capable of recognizing a diverse audience.

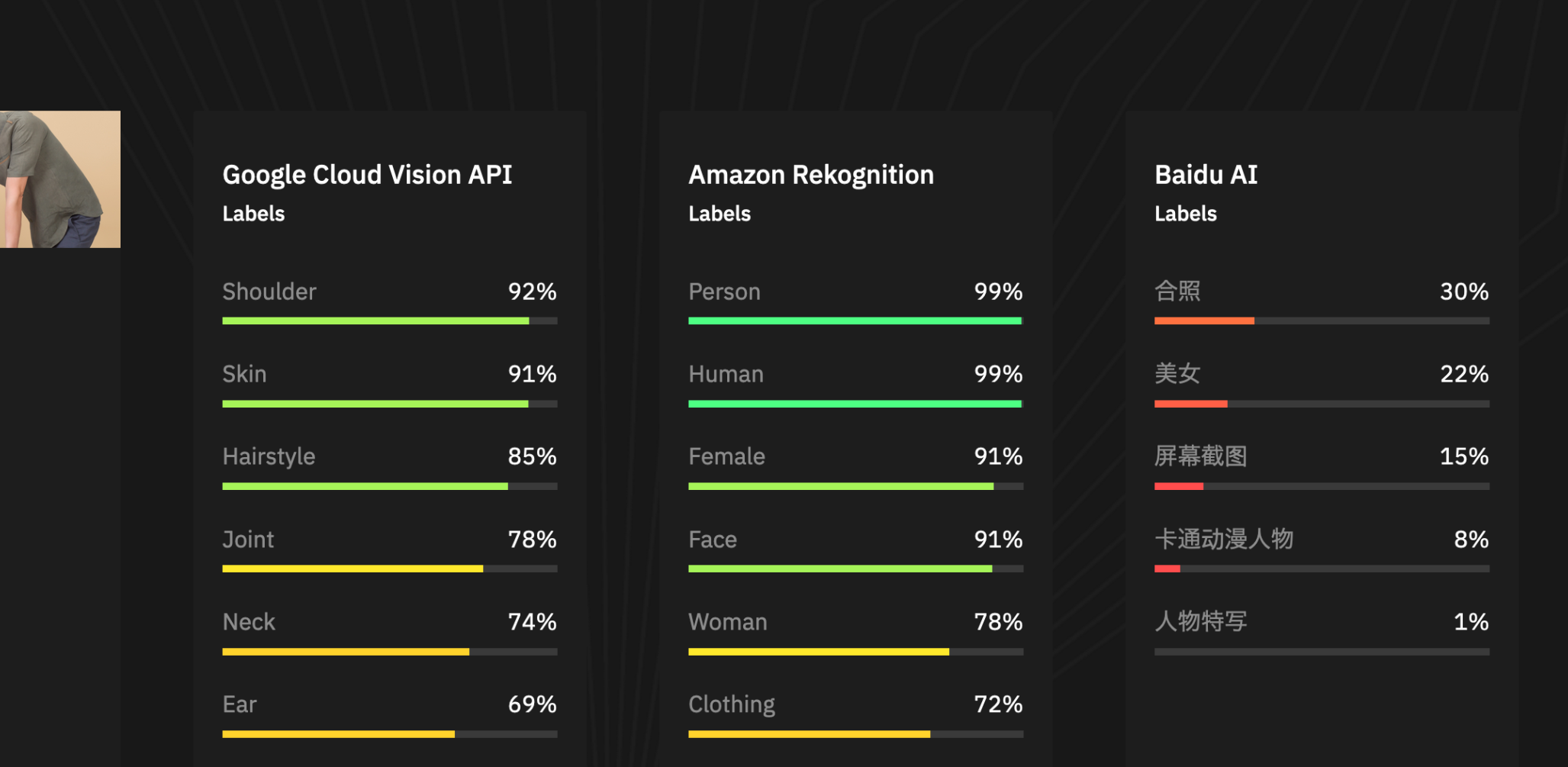

As we see in the image above, Google is looking for contextual things in the event photo, while Amazon is very sure that there is a person there.

Second, brands and developers must carefully consider which model best supports their use case; an app that provides a dish’s calorie count by snapping a photo won’t be very useful if it can’t differentiate between different types of food. “If we have an idea or our client wants to detect something, we have to look at which technology to use—is one service better at detecting this, or do we make our own?” says Eichhorn.

Seeing Where AI Doesn’t See Eye-to-Eye

Machine learning technology functions within a black box, and it’s anyone’s guess which model is best at detecting what’s in an image. As technologists, our MediaMonks Labs team isn’t content to make assumptions, so they built a tool that offers a glimpse at what several of the major image recognition services see when they view the same image, side-by-side. “The goal for this is discovering bias in image recognition services and to understand them better,” says Eichhorn. “It also shows the potential of what you could achieve, given the amount of data you can extract from an image.”

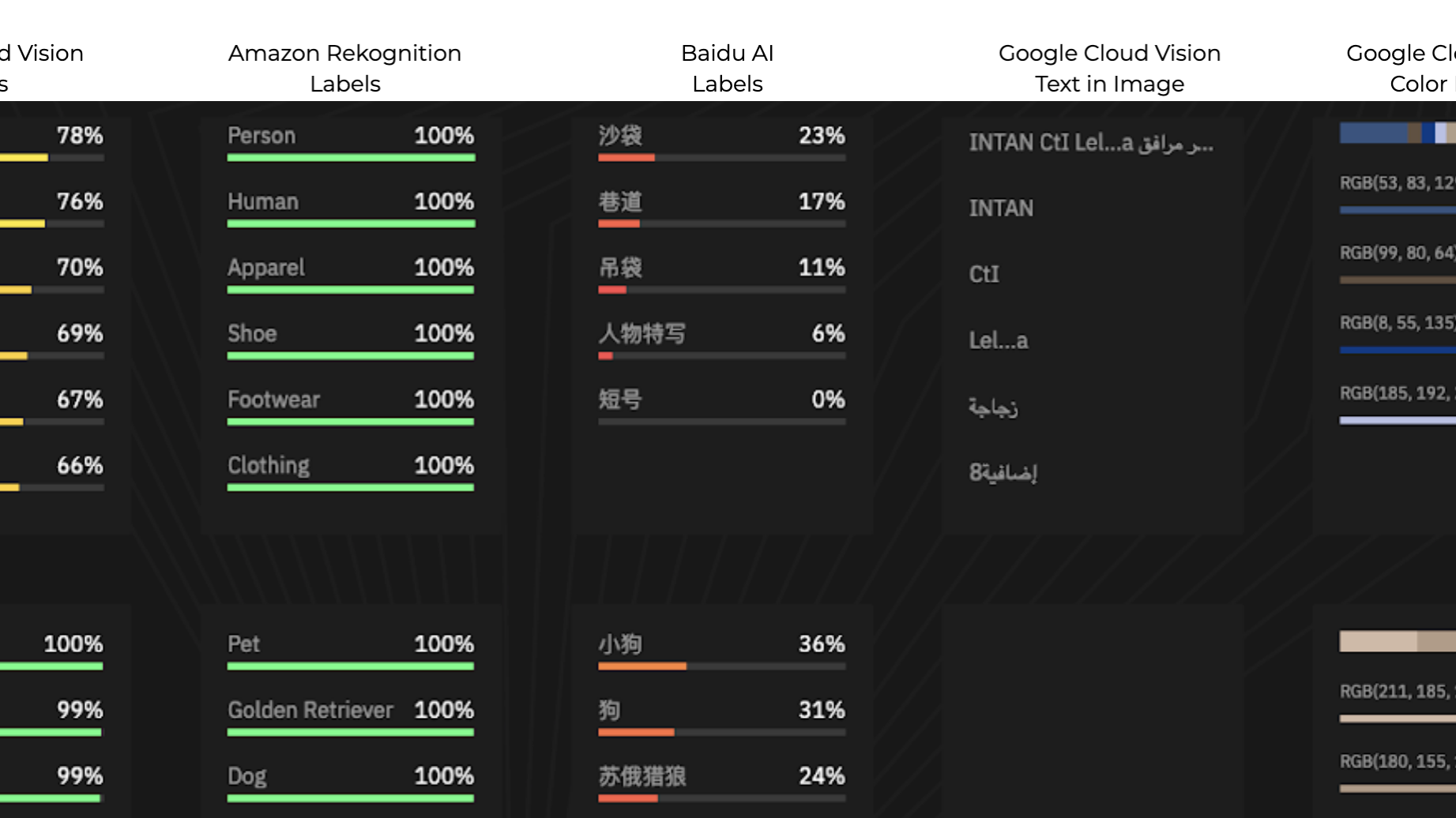

Here’s how it works. The tool lists out the objects and actions detected by Google Cloud Vision, Amazon Rekognition and Baidu AI, along with each AI’s confidence in what it sees. By toying around with the tool, users may observe differences in what each model responds to—or doesn’t. For example, Google Cloud Vision might focus more on contextual details, like what’s happening in a photo, where Amazon Rekognition is focused more on people and things.

With this tool, we want to pull back the curtain to show people how this technology works.

This also showcases some of the variety of things that can be recognized by the software, and each can have exciting creative implications: the color content of a user’s surroundings, for example, might function as a mood trigger. We collaborated DDB and airline Lufthansa to build a Cloud Vision-powered web app, for example, which recommends a travel destination based on the user’s photographed surroundings. For example, a photo of a burger might return a recommendation to try healthier food at one of Bangkok’s floating markets.

The Lufthansa project is interesting to think about in the context of this tool, because expanding it to the Chinese market required switching the image recognition from Cloud Vision to something else, as Google products aren’t utilized in the country. This gave the team the opportunity to look into other services like Baidu and AliYun, prompting them to test each for accuracy and response time. It showcases in very real terms why and how a brand would make use of such a comparison tool.

“Not everyone can be like Google or Apple, who can train their systems based on the volume of photos users upload to their services every day,” says Eichhorn. “With this tool, we want to pull back the curtain to show people how this technology works.” With a better understanding of how machine learning is trained, brands can better envision the innovative new experiences they aim to bring to life with image recognition.

Related

Thinking

-

![A person on a couch holds a smartphone displaying a football game, reaching into a chip bag, with another football game on a TV and snacks in the background.]()

Blog post

The New Playbook to Extend a Sports Spot into a Brand World By Tim Gunter 4 min read -

![A portrait of a woman in profile, facing right, with her blonde hair blurred as if in motion. She wears a black turtleneck against a dark, moody background featuring abstract magenta and purple rectangles and vertical lines. Her face is illuminated, while the rest of the image has a blurred, dreamlike quality.]()

Blog post

What 2025 Revealed About AI, and What It Unlocks in 2026 By Monks 5 min read -

![A pale pink "New message" window with thin black outlines floats against a pastel gradient background of lavender, pink, and peach. Inside the window are "To" and "Subject" fields, and a rounded rectangular "Send" button in the bottom right corner. The window has a simple close "X" icon in the top right.]()

Blog post

Building Email Inbox Trust and Strong Sender Reputation through Disciplined Warming Strategies By Bridget Creach 8 min read

Sharpen your edge in a world that won't wait

Sign up to get email updates with actionable insights, cutting-edge research and proven strategies.

Monks needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.