How Facebook Built the Festival of the Future

How Facebook Built the Festival of the Future

This year’s Facebook Connect, one of the biggest annual events in digital innovation, went entirely virtual, compressing what’s typically a multi-day experience into a 10-hour live event augmented with 14 hours of on-demand content–not an easy feat. But it’s no surprise that the event, which offers a close look at digital’s potential to connect people, serves as a model of how brands can build events that not only react to the reality we live in today, but how people will connect and collaborate digitally well into the future.

Because Facebook Connect is the only world conference dedicated to connecting people virtually though AR and VR, the event itself had to embody that promise. Partnering with Facebook, MediaMonks built an experience ecosystem that brought the event to life through livestreaming and Oculus Venues – beta early access. And through live chats and connection to developer discussion groups, attendees could interact and network throughout the event within Facebook’s social ecosystem.

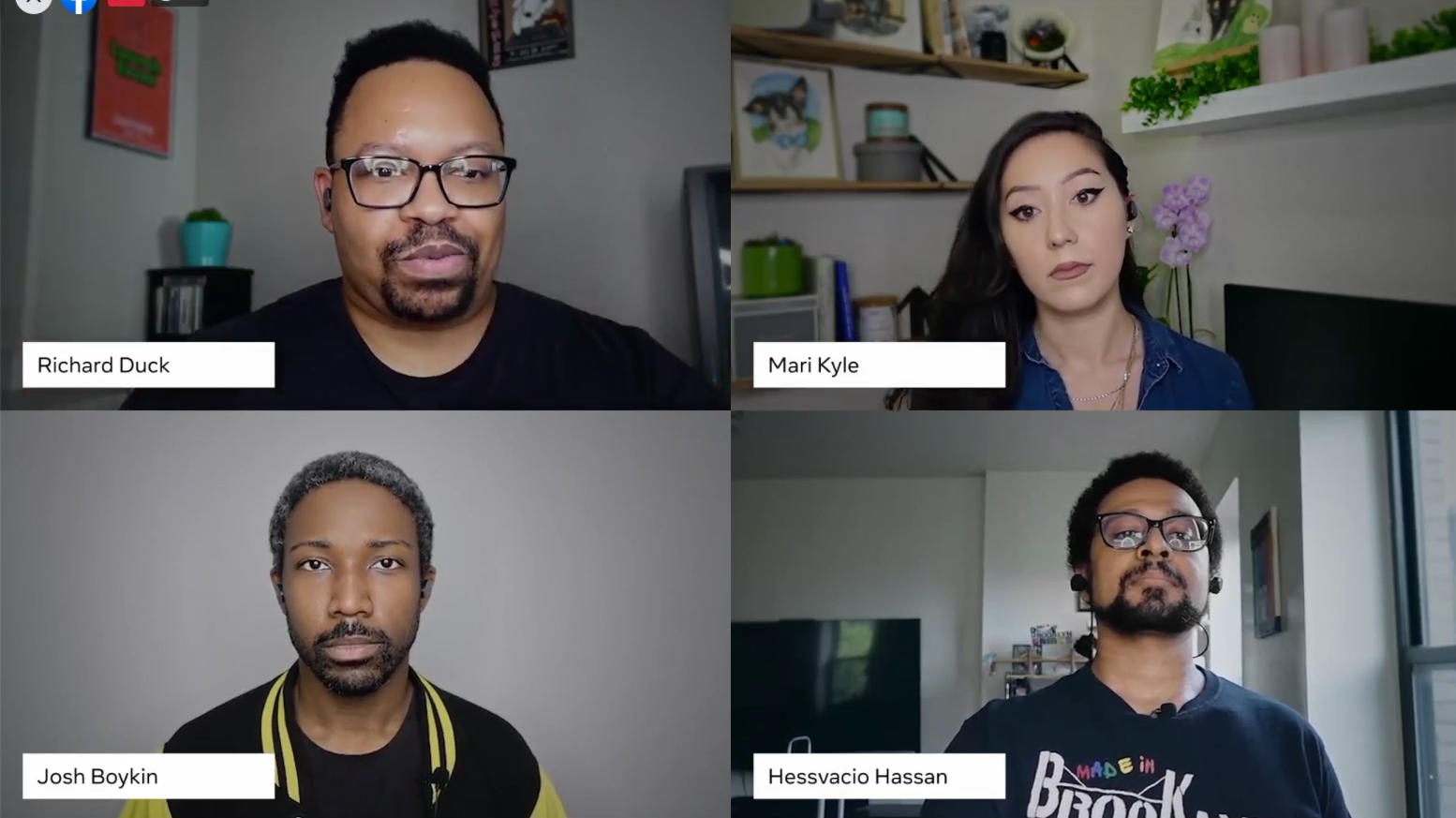

DE&I were important to the event, which featured a panel on diversity (photo above), a panel on accessibility and more.

Most importantly, the event was an example to other brands of how online events can turn digital into a true destination to meet, connect and play. While many have managed to create bombastic product reveals and virtual presentations despite the pandemic, Facebook and the MediaMonks team saw Facebook Connect as an opportunity to acknowledge the reality many of us are living and working within.

The event was a celebration of the work from home reality, and how connection, collaboration and productivity are still achievable. And for the first time, Facebook Connect was open to attendees far and wide for free. Diversity and inclusion were key pillars to ensure the event lived up to future-forward standards, with features like live captions and speakers on important topics relevant to the social climate.

Together, these elements show that building a current virtual event isn’t about just translating a series of touchpoints to digital, but rather maintaining the essence of an event’s goals within an entirely new context and experience. Here’s how it happened at Facebook Connect.

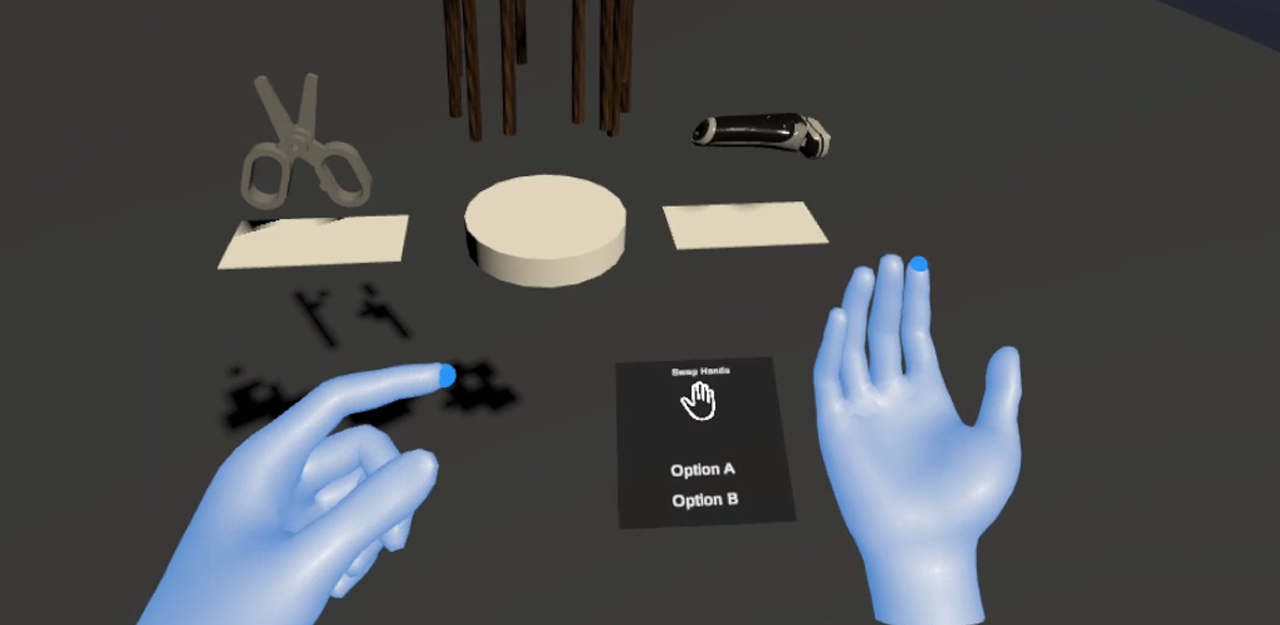

Reimagining the Product Showroom

The event kicked off with an early product reveal: the Oculus Quest 2. In a typical tradeshow setting, attendees would be able get up close and personal to view (or even try on) the product. But this wasn’t a typical event; absent of an in-person showroom floor, MediaMonks’ team of live experiential experts drip-fed exclusive, timed-release AR filters that activated on Instagram, allowing each viewer and attendee to explore new product features virtually. Invitations to “try on” the headset appeared via QR codes in interstitial segments between panels and talks.

The product’s reveal inspired coverage from outlets like the Verge and TechCrunch, and even analysis from the Motley Fool, who reported on Facebook’s belief in connecting people virtually via emerging technology. In addition to the new Oculus headset, Facebook announced a slew of other news including a VR office solution, research into a future pair of AR-enabled glasses, game announcements including Star Wars and Assasin’s Creed, and more.

By using emerging tech to highlight some of the features and possibilities of these technologies built by Facebook, the event achieves a new level of brand virtualization—essentially, building distinct environments and ecosystems that translate brand promise into digital experiences. While events are only an initial step to virtualize, this type of digital, tangible product showcase offers a peek of how brands can differentiate in their product reveals.

Enabling Excitement and Exclusivity Through Engagement

In-person events thrive on engagement and making connections. But digital ones may often lack this energy, relegating interaction to just a chat box. “We aimed for a level of two-way-interaction and built that into the system, feeding back on the energy of the audience,” says Ciaran Woods, EP Experiential & Virtual Solutions at MediaMonks. “That’s always something we’ve been pushing for in a livestream.”

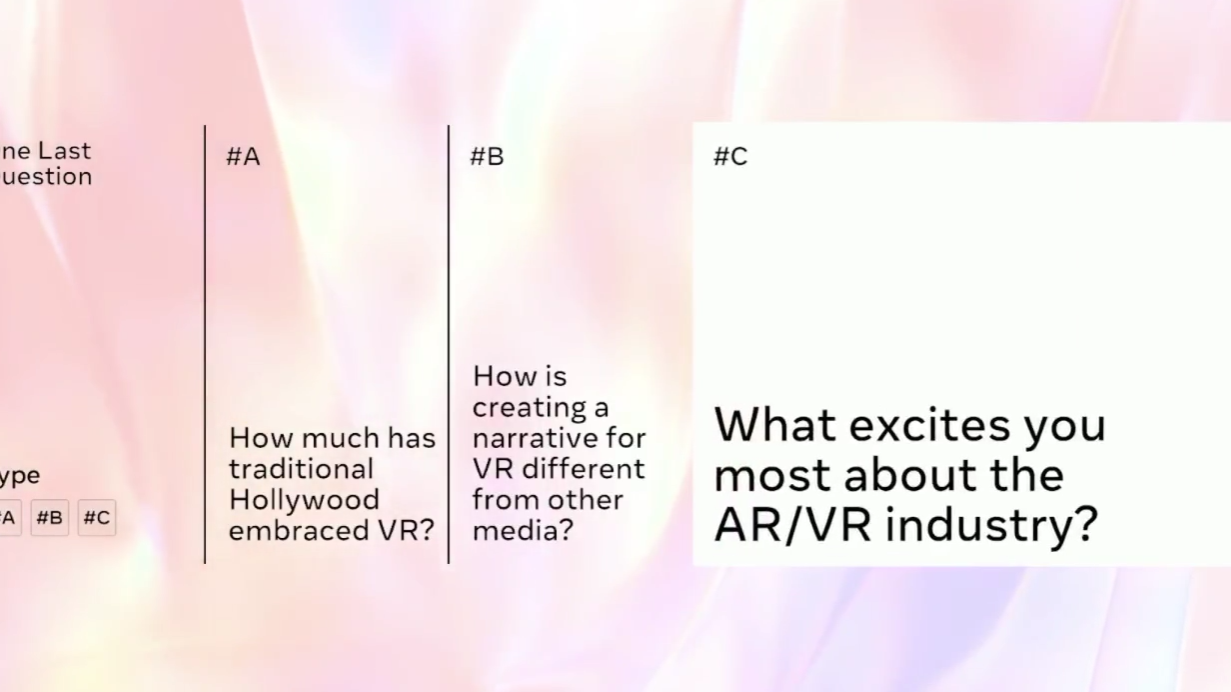

Viewers had the chance to select the last question that panelists and speakers were asked.

One of the key ideas behind Facebook Connect was to make the broadcast a real moment for audiences, rewarding those who took the time to sit down and participate live. This inspired the “one last question” at the end of talks and panels. Audiences were presented with three questions for the speaker or panel that they could vote on to ask. As viewers voted, an on-screen tally showed results in real time–made possible by LiveXP, MediaMonks’ live storytelling tool enabling a truer sense of interaction beyond just participating in the live chat.

Other immersive elements helped make Facebook Connect feel more tangible. One of the fun things about attending any event in person is taking some swag home with you. Shortly before and after the event, attendees could snag an exclusive Instagram filter that rewarded them with a personalized AR lanyard that serves as a memento of the experience. Finally, the event capped off with an exclusive talk from influential game developer John Carmack and an immersive Jaden Smith performance in VR.

Again, these features strive to put attendees “in the now.” A key challenge for digital events is evoking excitement and the feeling being present in a shared experience. What’s the difference between watching live and watching an on-demand recording? How does the event experience differentiate itself from just another livestream or video call? Brands and event organizers must consider these questions to ensure touchpoints build on excitement, promote a sense of presence and add some exclusivity to the live experience.

Connecting a Cohesive Journey

A final challenge that digital events face is building a cohesive journey across the experience. Brands often rely on external platforms and tools to host their events, with the consumer journey sometimes spread across different environments (for example, registering through a form on one page, accessing the schedule on a different platform and watching the event on a social channel). Brands serve their audiences best by building an events ecosystem that connects the experience–from lead-up to sign-up to aftercare–through a cohesive thread.

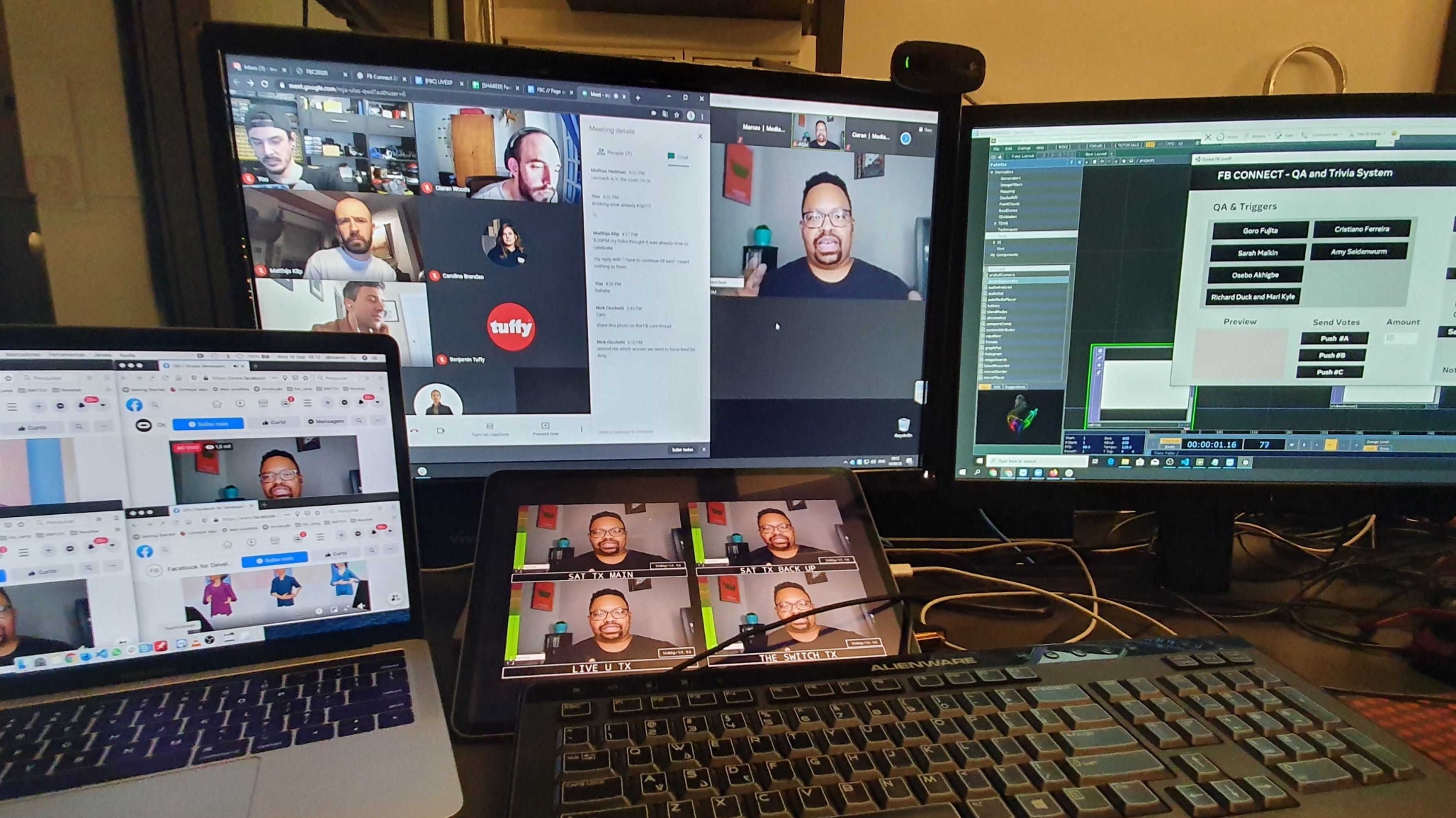

The crew worked behind-the-scenes and across borders with impressive setups to ensure things ran smoothly.

While Facebook Connect took place exclusively on Facebook platforms, bouncing between different touchpoints like Oculus Venues, Facebook Groups and AR filters on Instagram could have felt jarring if not done with elegance and skill. An impactful visual identity designed by MediaMonks made for a connected and cohesive journey from start to finish. The visual identity included not only the Facebook Connect logo, but also interstitials, animations, soundscapes and a hub page that helped attendees find what they needed.

Together, these features culminate in an experience that turns digital into a destination, inspiring and drawing together Facebook’s community of developers as they envision the future of technology. Connecting various examples of emerging technology into a cohesive experience, Facebook Connect offers a glimpse of the festival of the future capable of activating communities and strengthening brand-consumer relationships.

Virtual events virtual conference oculus facebook brand virtualization