Forrester Spotlights How to Power Up Production with Game Engine

Forrester Spotlights How to Power Up Production with Game Engine

With many consumers still at home around the world, brands’ need to show up for audiences digitally has never felt more urgent, particularly through immersive content and experiences that recapture some of what’s lost in interacting with a brand, loved ones or product in-person.

And with this need, a perhaps unlikely tool for marketers has emerged: game engines. The role of gaming in the marketing mix has also risen, providing new, engaging environments to meet consumers. Glu Mobile’s apartment decorating game Design Home, for example, lets players customize a home using real furniture from brands like West Elm and Pottery Barn—and is now even offering its own series of real-world products through its Design Home Inspired brand, effectively turning the game into a virtual retail showroom.

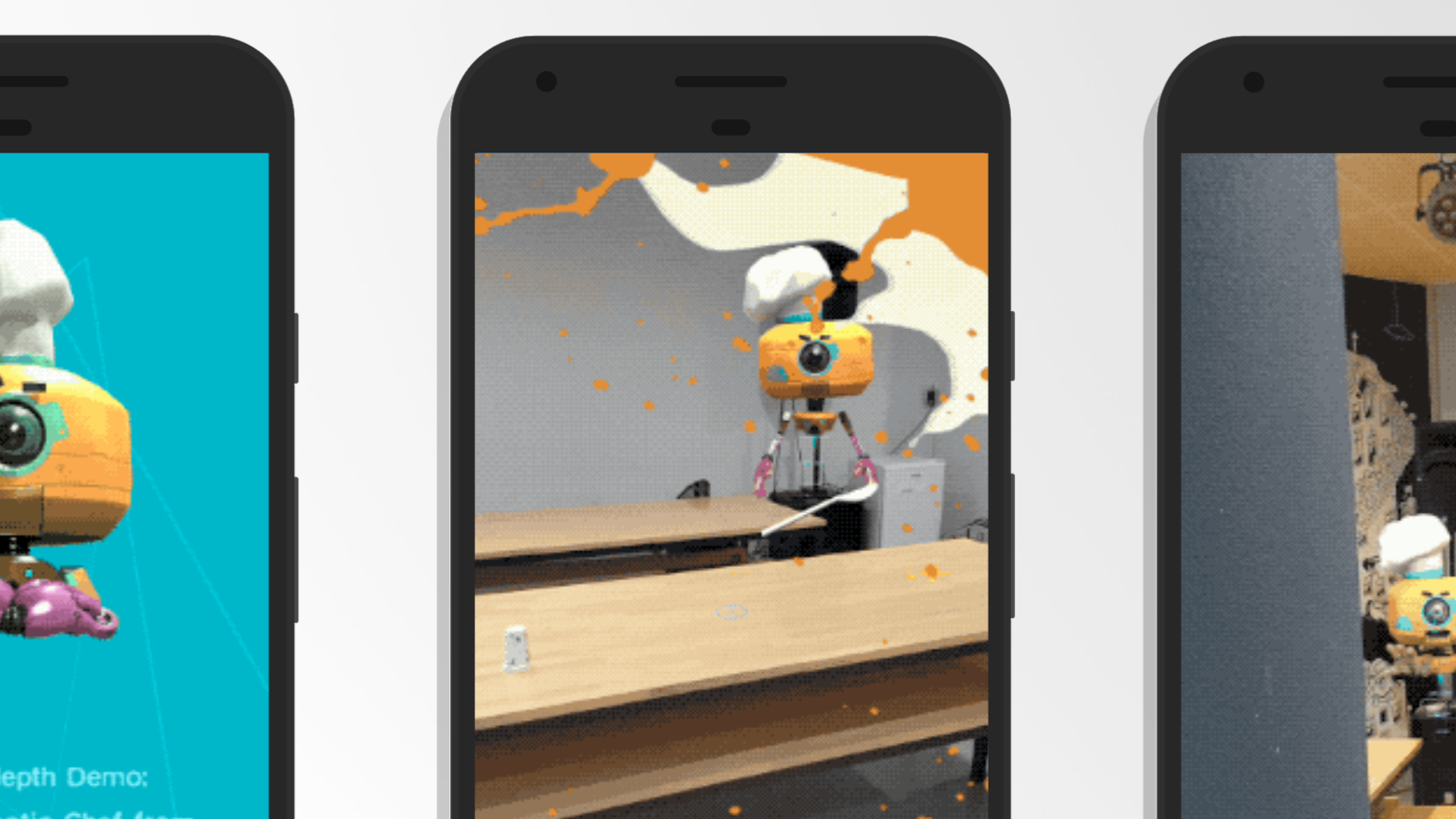

But game engines aren’t just for making content consumed in games. They also provide an environment for brands to build and develop 3D assets to use both internally and to power a variety of virtualized experiences. We recently announced receiving an Epic MegaGrant to automate virtual tabletop production using Unreal Engine, and additionally we use Unity Engine—which powers 53% of the top 1,000 mobile games within the App Store and Google Play Store—to build mobile and WebGL experiences with consumer audiences in mind.

3D Adds a New Dimension to Content Production

In addition to serving increasingly digital user behavior, the use of 3D content has the potential to help brands build efficiency across the enterprise and customer decision journey. A new report from Forrester, “Scale Your Content Creation With 3D Modeling” by Ryan Skinner and Nick Barber, details how 3D content solves a critical challenge that brands face today: the need to keep up with demand for content while faced with dwindling budgets. “Particularly in e-commerce, marketers have learned they need product images to reflect every angle, every variation, and a multitude of contexts; without updated and detailed imagery, engagement and sales suffer,” the authors write.

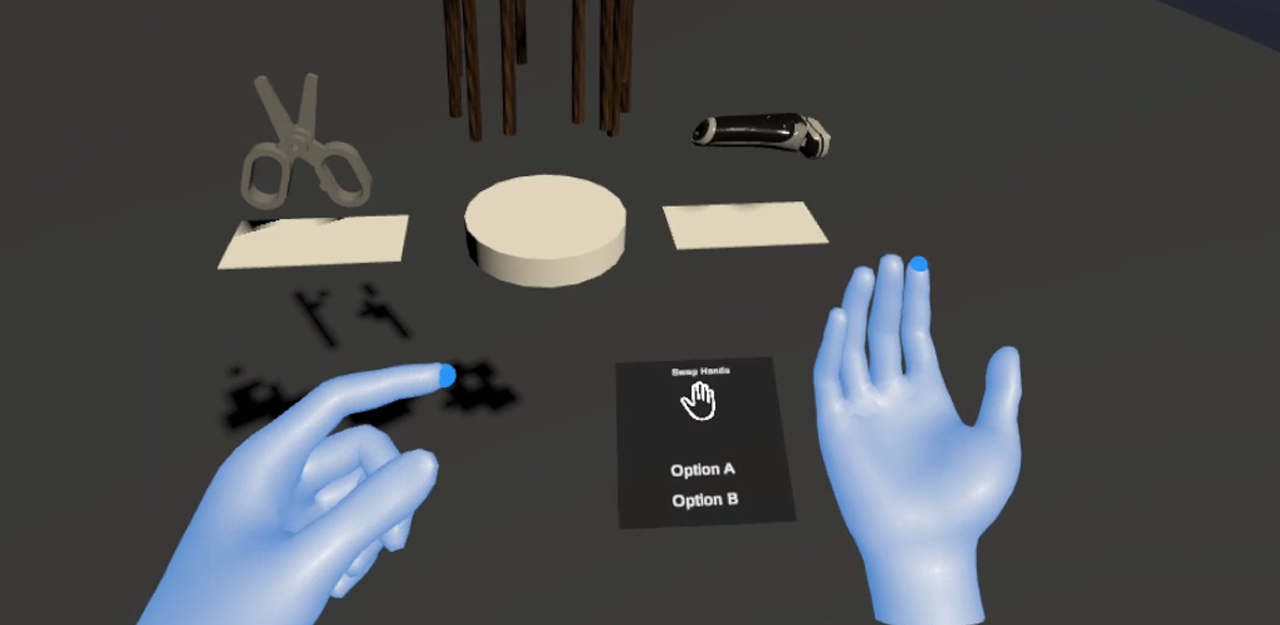

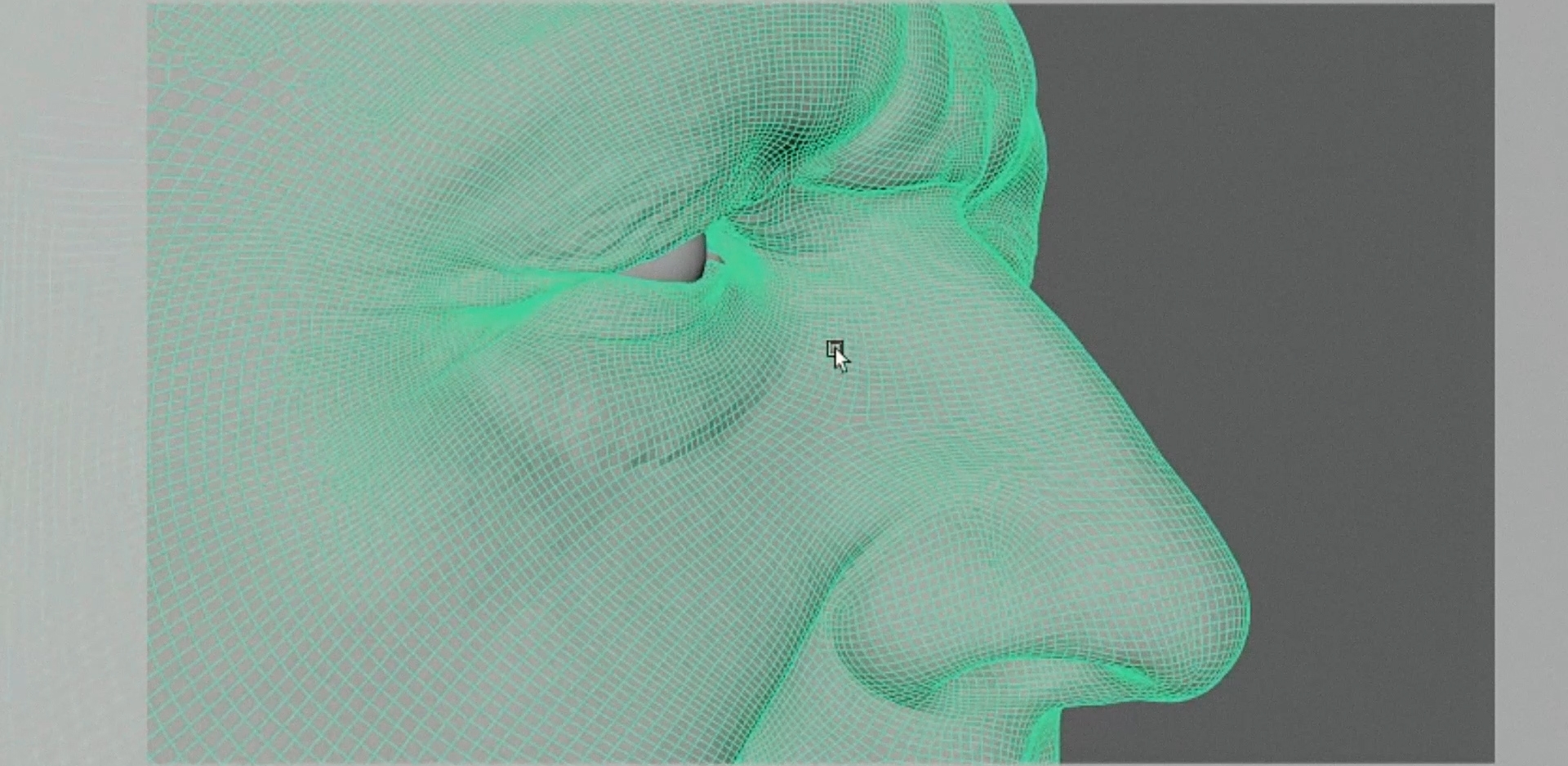

Pick your flavor: game engines let brands tweak and change assets with ease and speed.

One solution mentioned in the report is building a CGI-powered production line. MediaMonks Founder Wesley ter Haar notes in the report that “This is very top of mind for us right now,” a sentiment that is reinforced by how we’re powering creative production at scale using tools like Unreal Engine and Unity, as mentioned above.

“What’s exciting about real-time 3D is that it ticks a bunch of boxes for a brand,” says Tim Dillon, SVP Growth at MediaMonks, whose primary focus is on our game engine-related work. “You can use it in product design, in your marketing, in retail innovation–it’s touching so many different end use cases for brands.” A CAD model used internally for product design, for example, could also be used in virtual tabletop photography, in a retail AR experience, in 3D display ads and more—reconfigured and recontextualized to accomplish several of a brand’s goals in producing content and building experiences.

When it comes to content, game engines make it easier for teams to create assets at scale through variations in lighting, environment or color—especially when augmented by machine learning and artificial intelligence. “When creating content in real time, brands not only make content faster but can react and adapt to consumer needs faster, too,” says Dillon. “Things like 3D variations, camera animation and pre-visualization become much faster to achieve—and in some cases more democratic too, by putting new 3D tools in our client teams’ hands to make these choices together,” he says.

The Genesis car configurator lets users view their customizations in real time.

A case in point is the car configurator we built for Genesis, built in Unity and covered in their recent report: “25 Ways to Extent Real Time 3D Across Your Enterprise.” The web-based configurator offers a car customization experience as detailed and fluid as you’d find in a video game, letting consumers not only see what their custom model would look like with different features, but also within different environmental factors like time of day—all in real time.

Making a Lasting Impact Through Immersive Moments

Through greater adoption of immersive storytelling technologies and ultra-fast 5G connection, we are entering a virtualized era capable of placing a persistent 3D layer across real-world environments—already made possible through Unity technology and cloud anchors by Google, which anchor augmented reality content to specific locations that people can interact with over time. Consider, for example, a virtual retail environment that never closes and provides personalized service to each customer.

These experiences have become all the more relevant with the pandemic. In the Forrester report mentioned above, ter Haar says: “With COVID, we’re seeing greater interest to demo in 3D. The tactile and physical nature of seeing something makes it easier to buy.”

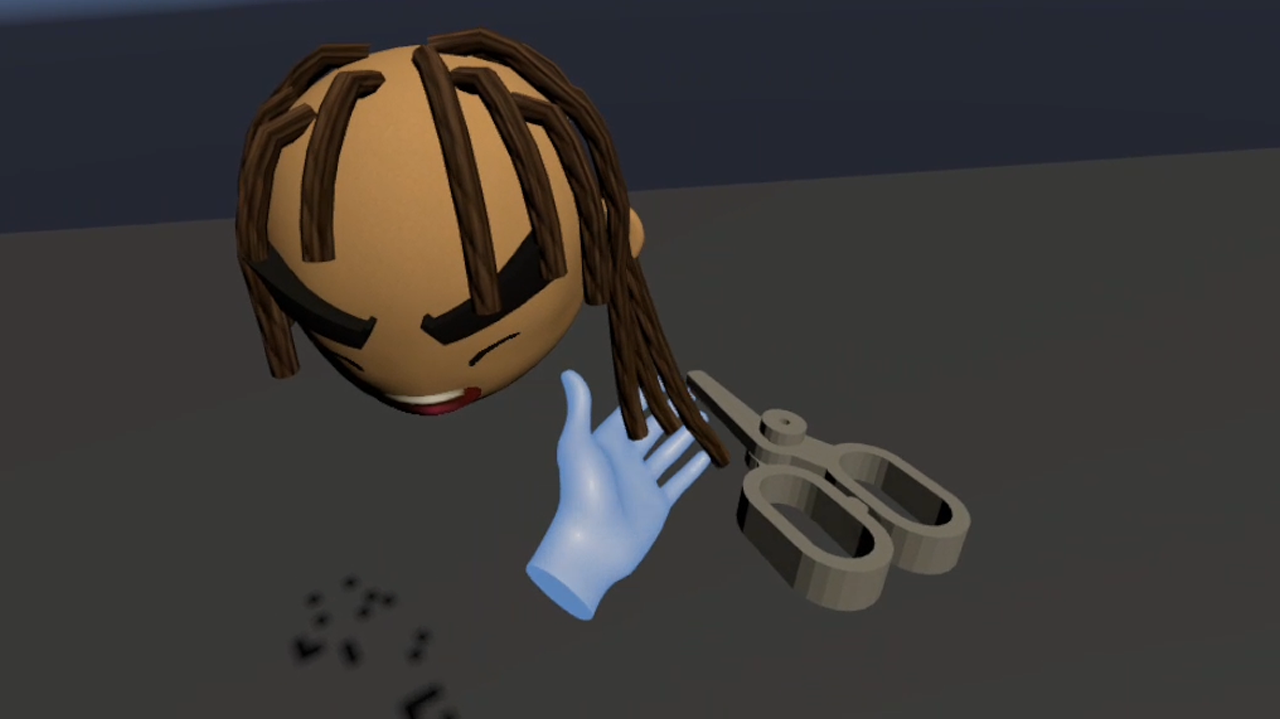

But perhaps more important for brands is that immersive experiences have the power to create real, lasting memories—a focus of a recent talk by Quentin de la Martinière (Executive Producer, Extended Realities at MediaMonks) and Ron Lee (Technical Director at MediaMonks) at China Unity Tech Week.

Spacebuzz takes students on an out-of-this-world journey through VR.

“We start from the strategy of who you want to engage with,” says Lee, delving into the storytelling potential of 3D content. “From there, we try to understand the vision we want to build to grab the user’s attention and put them in the world.” This includes deciding on the best venue for a 3D experience: augmented reality, virtual reality, mixed reality or on the web? By making the right selection, brands can build experiences that explain while they entertain.

Lee and de la Martinière showed Spacebuzz as an example of how immersive experiences can have lasting impact. Through a 15-minute VR experience built in Unity, school children are taken to space where they experience the “overview effect”: a humbling shift in awareness of the earth after viewing it from a distance.

“The technology and the story bring together the vision and the message of the experience,” says Lee. “Building that immersive environment in Unity and translating this information on a deeper level creates real memories for the kids who engage with it.” Likewise, brands can leave a memorable mark on consumers through 3D content. “These extremely personalized experiences allow the brand to leave a deep impression on audiences and intensify brand favorability,” Lee says.

From streamlining production to powering experiences across a range of consumer touchpoints, the value of 3D content is building for brands. Working closely with the developers of leading game engines that enable these experiences, like Unity and Unreal Engine, we’re helping brands add an entirely new dimension to their content and storytelling for virtualized audiences.

Game engine unity unity engine unreal engine epic games extended reality 3D content