Angelica: Hey everyone! Welcome to Scrap The Manual, a podcast where we prompt “aha” moments through discussions of technology, creativity, experimentation, and how all those work together to address cultural and business challenges. My name is Angelica.

Rushali: And my name is Rushali. We are both creative technologists with Labs.Monks, which is an innovation group within Media.Monks with a goal to steer and drive global solutions focused on technology and design evolution.

Angelica: Today, we have a new segment called “How Do We Do This?” where we give our audience a sneak peek into everyday life at Labs and open up the opportunity for our listeners to submit ideas or projects. And we figure out how we can make it real. We'll start by exploring the idea itself, the components that make it unique and how it could be further developed, followed by doing a feasibility check. And if it isn't currently feasible, how can we get it there?

Which leads us to today's idea submitted by Maria Biryukova, who works with us in our Experiential department. So Maria, what idea do you have for us today?

Maria: So I was recently playing around with this app that allows you to scan in 3D any objects in your environment. And I thought: what if I could scan anything that I have around me—let's say this mic—upload it live and see it coming to life on the XR stage during the live show?

Angelica: Awesome, thanks Maria for submitting this idea. This is a really amazing creative challenge and there's a lot of really good elements here. What I like most about this idea, and is something that I am personally passionate about, is the blending of the physical world with the digital world, because that's where a lot of magic happens.

That's where, when AR first came out, people were like, “Whoa, what's this thing that's showing up right in front of me?” Or in VR when they brought these scans of the real world into the very initial versions of Google cardboard headsets, that was like the, “Whoa! I can't believe that this is here.”

So this one's touching upon the physicality of a real object that exists in the real world…someone being able to scan it and then bring it into a virtual scene. So there's this transcending of where those lines are, which are already pretty blurred to begin with. And this idea continues to blur them, but I think in a good way, and in a way that guests and those who are part of the experience can have a role where they're beyond being a passive observer, into being an active participant into these.

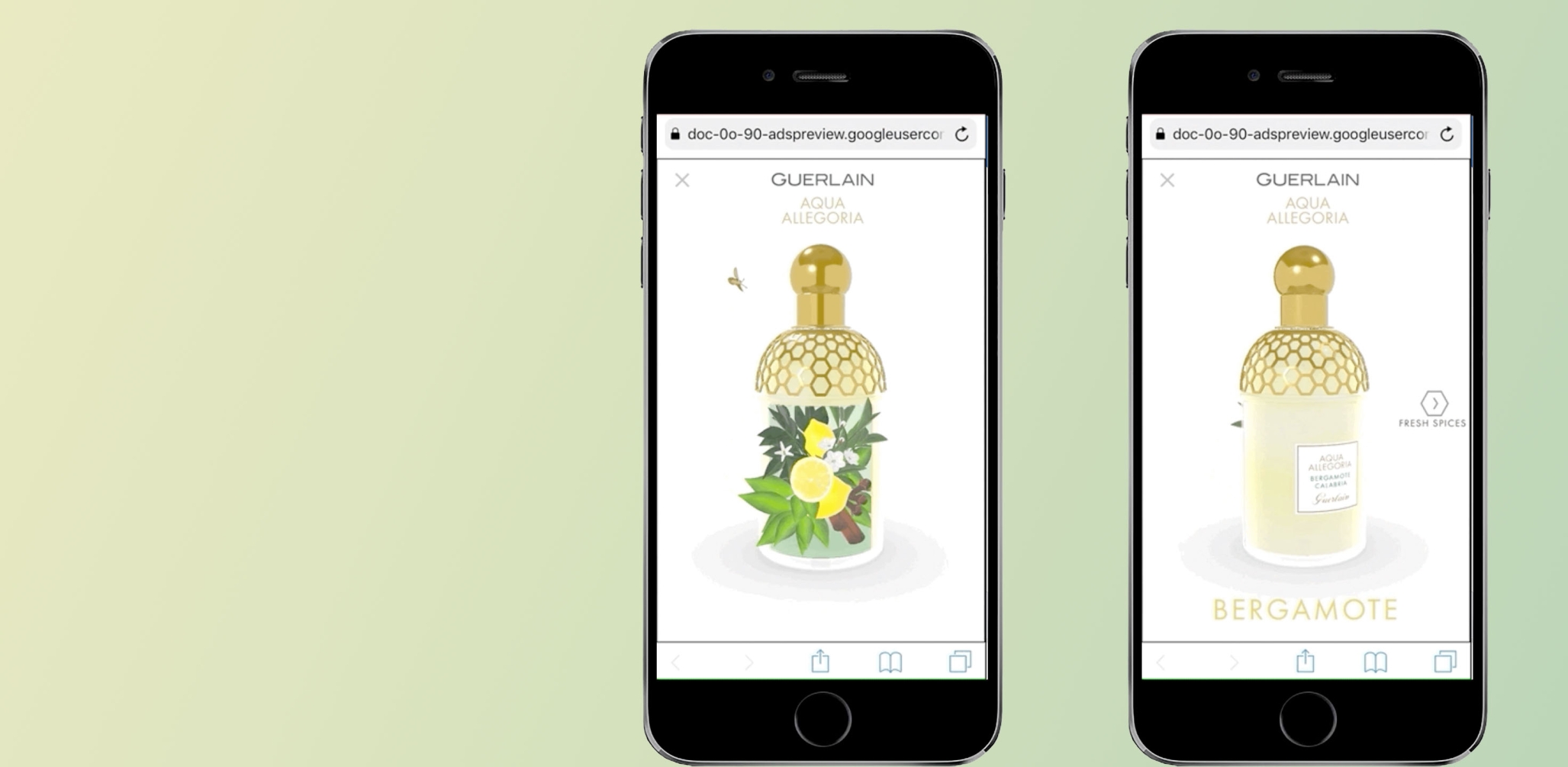

We see this a little bit with WAVE, where they have a virtual space and people are able to go to this virtual concert and essentially pay like $5 to have a little heart get thrown to Justin Bieber's head. Lovingly, of course, but you get the point. Where this one, it takes it another step further in saying, “Okay, what if there's something in my environment?”

So maybe there is an object that maybe pertains to the show in a particular way. Let's say that it's like a teddy bear. And all the people who have teddy bears around the world can scan their teddy bear and put it into this environment. So they're like, “Oh, that's my teddy bear.” Similar to when people are on the jumbotron during sports events and they're like, “Hey, that's my face up there.” And then they go crazy with that. So it allows more of a two way interaction, which is really nice here.

Rushali: Yeah. That's the part that seems interesting to me. As we grow into this world where user-generated content is extremely useful and we start walking into the world of the metaverse, scanning and getting 3D objects that personally belong to you—or a ceramic clay thing, or a pot that you made yourself—and being able to bring it into the virtual world is going to be one of the most important things. Because right now, in Instagram, TikTok, or with any of the other social platforms, we are mostly generating content that is 2D, or generating content that is textual, or generating audio, but we haven't explored extremely fast 3D content generation and exchange the way that we do with pictures and videos on Instagram. So we discussed the “why.” It's clearly an interesting topic, and it's clearly an interesting idea. Let's get into the “What.”

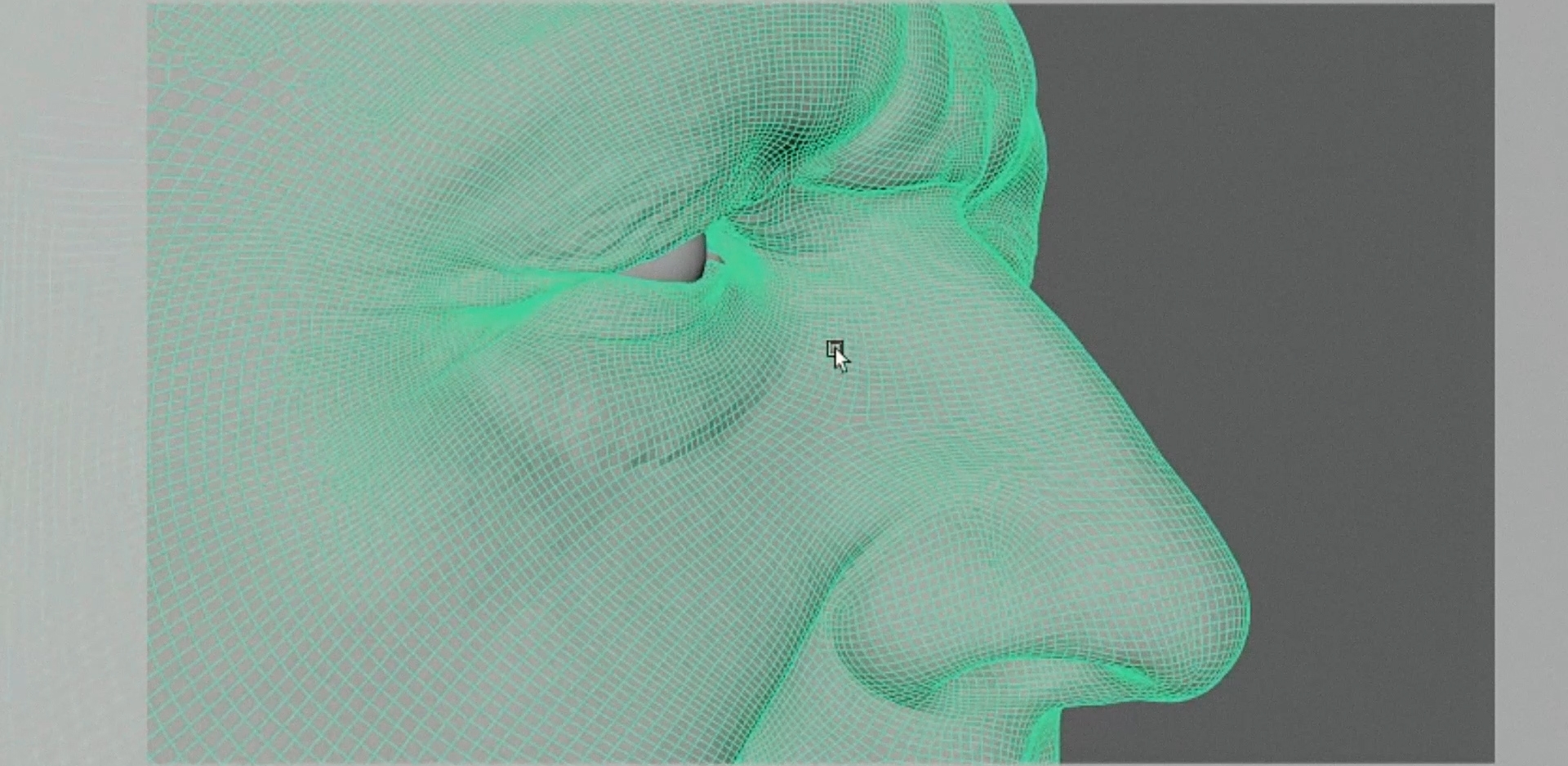

Angelica: Yeah. So from what we're hearing on this idea, we have scanning the object, which will connect to 3D scanning and photogrammetry, which we can get a little bit into the differences between the two different types of technologies. And then when the scan is actually added into the environment, is it cleaned up? Is it something where it acts as its own 3D model without any artifacts from the environment that it was originally scanned in? And a part of that is also the compositing. So making sure that the object doesn't look like a large ray of sunlight when the event is very moody and dark. It needs to fit within the scene that it's within.

And we're hearing content moderation, in terms of making sure that when guests of any kind become a little bit more immature than the occasion requires, that it filters out those situations to make sure that the object that needs to be scanned in the environment is a correct one.

Rushali: Absolutely. What was interesting while you were walking through all the different components was the way that this idea comes together: it's just so instantaneous and real time that we need to break down how to do this dynamically.

Angelica: Yeah. And I think that's arguably the most challenging part, aside from the content moderation aspect of it. Let's use photogrammetry as an example. Photogrammetry is the process of taking multiple pictures of an object from as many sides as you can. An example of this is with Apple's Object Capture API. You just take a bunch of photos. It does its thing, it processes it, it thinks about it. And then after a certain amount of time (sometimes it's quick, sometimes it's not…depends on how high quality it needs to be), it'll output a 3D model that it has put together based on those photos.

Rushali: Yeah. So the thing that I wanted to add about photogrammetry, that you described very well, was that in the last just five years, photogrammetry has progressed from something very basic to something outrageously beautiful very quickly. And that one of the big reasons for that is how the depth sensing capability came in and became super accessible.

Imagine someone standing on a turntable and taking pictures from each and every angle and we are turning the turntable really slowly and you end up having some 48,000 pictures to stitch together and then create this 3D object. But a big missing piece in this puzzle is the idea of depth. Like is this person's arm further away or closer? And when the depth information comes in, suddenly it becomes a lot more evolved to have a 3D object with that depth information. So iPhones’ having a depth sensing camera, closer to last year and the year before that, have really enhanced the capabilities.

Angelica: Yeah, that's a good point. There is an app that had been doing this time-intensive and custom process for a very long time. But then when Apple released the Object Capture API, they said, “Hey, actually, we're going to revamp our entire app using this API.” And they even say that it's a better experience for iPhone users because of the combination of the Object Capture API and leveraging the super enhanced cameras that are now coming out of just a phone.

Android users, you're not out of the woods here. Some Samsung phones, like the Samsung 20 and up have a feature embedded right in the phone software where you can do the same process that I was just mentioning earlier about a teddy bear.

There's a test online where someone has a teddy bear to the left of a room, they scan it and then they're able to do a point cloud of the area around it. So they could say, “Okay, this is the room. This is where the walls are. This is where the floor is.” And then paste that particular object that they just scanned into the other corner of the room and pin it or save it. So then if they leave the app, come back in, they can load it, and that virtual object is still in the same place because of the point cloud scanning their room, their physical room, and they put it right back where it was. So it's like you got a physical counterpart and a digital counterpart. It's more than just iPhones that are having these enhanced cameras. That experience is made possible because Samsung cameras are also getting better and better over time.

The process I was just explaining about the teddy bear, the point cloud, and placing it into an environment…that is a great example of 3D scanning, where you can move around the object but it's not necessarily taking a bunch of photos and stitching them together to create a 3D model. The 3D scanning part is a little bit more dynamic. But it is quite light sensitive. So, for example, if you're in a very sunny area, it'll be harder to get a higher quality 3D model from that. So keeping in mind, the environment is really key there. Photogrammetry as well, but 3D scanning is especially sensitive to this.

Rushali: So children, no overexposure kindly…

Angelica: …of photos. Yes. [laughter]

Though, that scanning process can take some time and it can vary in terms of fidelity. And then also that 3D model may be pretty hefty. It may be a pretty large file size. So then that's when we're getting into the conversation of having this be uploaded to the cloud and offload some of that storage there. Not beefing up the user's phone, but it goes somewhere else and then the show could actually funnel that into the experience, after the content moderation part of course.

Rushali: You've brought up a great point over here as well, because a big, big, big chunk of this is also fast internet at the end of the day because 3D files are heavy files. They are files that have a lot of information about the textures.

The more polygons there are, the heavier the files. All of that dramatic graphics part comes into play and you are going to get stuck if you do not have extremely fast internet. And I *wink, wink* think 5G is involved in this situation.

Angelica: Yeah, for sure. 5G is definitely a point of contention in the United States right now, because they're converting to that process, which is affecting aviation and the FAA and other things like that. So it's like, yeah, the possibilities with 5G are huge, but there's some things to work out still.

Rushali: So that's the lay of the land of 3D scanning and photogrammetry. And we do have apps right now that in almost real time can give you a 3D scan of an object in their app. But the next part is the integration of this particular feature with a live show or a virtual ecosystem or putting it into a metaverse. What do you think that's going to look like?

Angelica: This will involve a few different components. One: being the storage onto the cloud, or a server of some kind that can store, not just one person’s scan, but multiple people's scans. And I could easily see an overload situation where if you say to an audience of Beliebers, “Hey, I want you to scan something.”

They're like, “Okay!” And you got 20,000 scans that now you dynamically have to sift through and have those uploaded into the cloud to then be able to put into the experience. I can anticipate quite an overload there.

Rushali: You're absolutely on point. You're in a concert: 20,000 to 50,000 people are in the audience. And they are all scanning something that they have either already scanned or will be scanning live. You better have a bunch of servers there to process all of this data that's getting thrown your way. Imagine doing this activity, scanning an object and pulling it up in a live show. I can 100% imagine someone's going to scan something inappropriate. And since this is real time, it's gonna get broadcasted on a live show. Which brings into the picture the idea of curation and the idea of moderation.

Angelica: Because adults can be children too.

Rushali: Yeah, absolutely. If there's no moderation…turns out there's a big [adult product] in the middle of your concert now. And what are you going to do about it?

Angelica: Yeah exactly. Okay, so we've talked about how there are a lot of different platforms out there that allow for 3D scanning or the photogrammetry aspect of scanning an object and creating a virtual version of it, along with a few other considerations as well.

Now we get into…how in the world do we do this? This is where we explore ways that we can bring the idea to life, tech that we can dive a bit deeper into, and then just some things to consider moving forward. One thing that comes up immediately (we've been talking a lot about scanning) is how do they scan it? There's a lot of applications that are open source that allow a custom app to enable the object capture aspect of it. We talked about Apple, but there's also a little bit that has been implemented within ARCore, and this is brought to life with the LiDAR cameras. It's something that would require a lot of custom work to be able to make it from scratch. We would have to rely on some open source APIs to at least get us the infrastructure, so that way we could save a lot of time and make sure that the app that's created is done within a short period of time. Because that's what tends to happen with a lot of these cool ideas is people say, “I want this really awesome idea, but in like three months, or I want this awesome idea yesterday.”

Rushali: I do want to point out that a lot of these technologies have come in within the last few years. If you had to do this idea just five years ago, you would probably not have access to object capture APIs, which are extremely advanced right now because they can leverage the capacity of the cameras and the depth sensing. So doing this in today's day and age is actually much more doable, surprisingly.

And if I had to think about how to do this, the first half of it is basically replicating an app like Qlone. And what it's doing is, it's using one of the object capture APIs, but also leveraging certain depth sensing libraries and creating that 3D object.

The other part of this system would then be: now that I have this object, I need to put it into an environment. And that is the bigger unknown. Are we creating our own environment or is this getting integrated into a platform like Roblox or Decentraland? Like what is the ecosystem we are living within? That needs to be defined.

Angelica: Right, because each of those platforms have their own affordances to be able to even allow for this way of sourcing those 3D models dynamically and live. The easy answer, and I say “easy” with the lightest grain of salt, is to do it custom because there's more that you can control within that environment versus having to work within a platform that has its own set of rules.

We learned this for ourselves during the Roblox prototype for the metaverse, where there are certain things that we wanted to include for features, but based on the restrictions of the platform, we could only do so much.

So that would be a really key factor in determining: are we using a pre-existing platform or creating a bespoke environment that we can control a lot more of those factors?

Rushali: Yeah. And while you were talking about the ecosystem parts of things, it sort of hit me. We're talking about 3D scanning objects, like, on the fly as quickly as possible. And they may not come out beautifully. They may not be accurate. People might not have the best lighting. People might not have the steadiest hands because you do need steady hands when 3D scanning objects. And another aspect that I think I would bring in over here when it comes to how to do this is pulling in a little bit of machine learning so that we can predict which parts of the 3D scan have been scanned correctly versus scanned incorrectly to improve the quality of the 3D scan.

So in my head, this is a multi-step process: figuring out how to object capture and get that information through the APIs available by ARCore or ARKit (whichever ones), bring the object and run it through a machine learning algorithm to see if it’s the best quality, and then bring it into the ecosystem. Not to complicate it, but I feel like this is the sort of thing where machine learning can be used.

Angelica: Yeah, definitely. And one thing that would be interesting to consider is that the dynamic aspect of scanning something and then bringing it live is the key part in all this. But it also has the most complications and is the most technology dependent, because there's a short amount of time to do a lot of different processes.

One thing that I would recommend is: does it have to be real time? Could it be something that's done maybe a few hours in advance? Let's say that there's a really awesome Coachella event where we have a combination of a digital avatar influencer of some kind sharing the stage with the live performer. And for VIP members, if they scan an object before the show, they will be able to have those objects actually rendered into the scene.

So that does a few different things. One: it decreases the amount of processing power that's needed because it's only available for a smaller group of people. So it becomes more manageable. Two: it allows for more time to process those models at a higher quality. And three: content moderation. Making sure that what was scanned is something that would actually fit within the show.

And there is a little bit more of a back and forth. Because it's a VIP experience, you could say: “Hey, so the scan didn't come out quite as well.” I do agree with you, Rushali, that having implementation of machine learning would help in assisting this process. So maybe having it a little bit of time before the actual experience itself would alleviate some of the heaviest processing and the heaviest usage that can cause some concerns when doing it live.

Rushali: And to add to that, I would say this experience (if we had to do it today in 2022) would probably be something on the lines of this: you take the input at the start of a live show, and use the output towards the end of it. So you have those two hours to do the whole process of moderation, to do the whole process of passing it through quality control. All of these steps that need to happen in the middle.

Also, there's a large amount of data transfers happening as well. You're also rendering things at the same time and this is a tricky thing to do in real time as of today. You need to do it with creative solutions, with respect to how you do it. And not with respect to the technologies you use, because the technologies currently have certain constraints.

Angelica: Yeah, and technology changes. That's why the idea is key because maybe it's not perfectly doable today, but it could be perfectly doable within the next few years. Or even sooner, we don't know what's going on behind the curtain of a lot of the FAANG companies. New solutions could be coming out any day now that enable some of these pain points within the process to be alleviated much more.

So we've talked about the dynamic aspect of it. We've talked about the scanning itself, but there are some things to keep in mind either for those scanning an object. What are some things that would help with getting a clean scan?

There's the basics, which is avoid direct lighting. So don't do the theater spotlight on it because then that’ll blow out the picture. Being uniformly lit is a really important thing here, making sure to avoid shiny objects. While they're pretty pretty, they are not super great at being translated into reliable models because the light will reflect off of them.

Those are just a few, and there's definitely others, but those are some of the things that during this process would be a part of the instructions when the users are actually scanning this. After the scan is done, like I mentioned, there are some artifacts that could be within the scan itself. So an auto clean process would be really helpful here, or it has to be done manually. The manual part would take a lot more time, which would hurt the feasibility aspect of it. And that's also where maybe the machine learning aspect could help with that.

And then in addition to cleaning it up would be the compositing, making sure that it looks natural within the environment. So all those things would have to be done either as a combination of an automated process or a manual process. I could see where the final models that are determined to be put into the show, those can be a more manual process to make sure that the lighting suits the occasion. And if we go with the route that you mentioned, which is do it at the very beginning of the show, then we have a bunch of time (and I say a bunch it's really two hours optimistically) to do all of these time-intensive processes and make sure that it's relevant by at the end of the show.

Moderation is something we've also talked about quite a bit here as well. There's a lot of different ways for moderation to happen, but it's primarily focused on image, text and video. There is a paper out of Texas A&M University that does explore moderation of 3D objects, more to prevent the NSFW (not safe for work) 3D models from showing up when teachers just want their students to look up models for 3D printing. That's really the origin of this paper. And they suggested different ways that the learning process of moderation could be done, which they mention is the human-in-loop augmented learning. But it's not always reliable. This is an exploratory space that there's not a lot of concrete solutions in. So this would be something that would be one of the heavier things to implement, just looking at the entire ecosystem of the concept of what would need to be implemented.

Rushali: Yeah, if you had to add a more sustainable way. And when I say sustainable, I mean, not with respect to the planet, because this project is not at all sustainable, considering there’s large amounts of data being transferred. But coming back to making the moderation process more sustainable, you can always open it up to the community. So the people who are attending the concert decide what goes in. Like maybe there's a voting system, or maybe there is an automated AI that can detect whether someone has uploaded something inappropriate. There's different approaches within moderation that you could take. But for the prototype, let's just say: no moderation because we are discussing, “How do we do this?” And simplifying it is one way of reaching a prototype.

Angelica: Right, or it could be a manual moderation.

Rushali: Yes, yes.

Angelica: Which would help out, but you would need to have the team ready for the moderation process of it. And it could be for a smaller group of people.

So it could be for an audience of, let's say 50 people. That's a lot smaller of an audience to have to sift through the scans that are done versus a crowd of 20,000 people. That would definitely need to be an automated process if it has to be done within a short amount of time.

So in conclusion, what we've learned is that this idea is feasible…but with some caveats. Caveats pertaining to how dynamic the scan needs to be. Does it need to be truly real time or could it be something that can take place over the course of a few hours, or maybe even a few days or a few weeks? It makes it more or less feasible depending upon what the requirements are there.

The other one is thinking about the cleanup, making sure that the scan is fitting with the environment, it looks good, all those types of things. The moderation aspect to make sure that the objects that are uploaded are suited to what needs to be implemented. So if we say, “Hey, we want teddy bears in the experience,” but someone uploads an orange. We probably don't want the orange, so there is a little bit of object detection there.

Okay, that's about it. Thanks everybody for listening to Scrap The Manual and thank you, Maria, for submitting the question that we answered here today. Be sure to check out our show notes for more information and references of things that we mentioned here. And if you like what you hear, please subscribe and share. You can find us on Spotify, Apple Podcasts, and wherever you get your podcasts.

Rushali: And if you want to suggest topics, segment ideas, or general feedback, feel free to email us at scrapthemanual@mediamonks.com. If you want to partner with Media.Monks Labs, feel free to reach out to us over there as well.

Angelica: Until next time…

Rushali: Thank you!