Monks and Google Cloud: Powering the Future

Monks and Google Cloud: Powering the Future

I am proud to announce Monks has achieved two distinguished Google Cloud partner specializations: Data Analytics and Marketing Analytics. These accolades mark a significant milestone in our journey as long-time, strategic partners with Google, solidifying our commitment to pushing the boundaries of marketing and technology.

A Legacy of Strategic Partnership

For years, Monks has partnered with Google to elevate our clients' marketing strategies to unprecedented heights.

Our collaboration has been driven by a shared vision of delivering transformative solutions that empower businesses to harness the full potential of data and technology. Securing these specializations is a testament to the depth of our expertise and the strength of our partnership with Google Cloud.

Driving Innovation Through Commitment

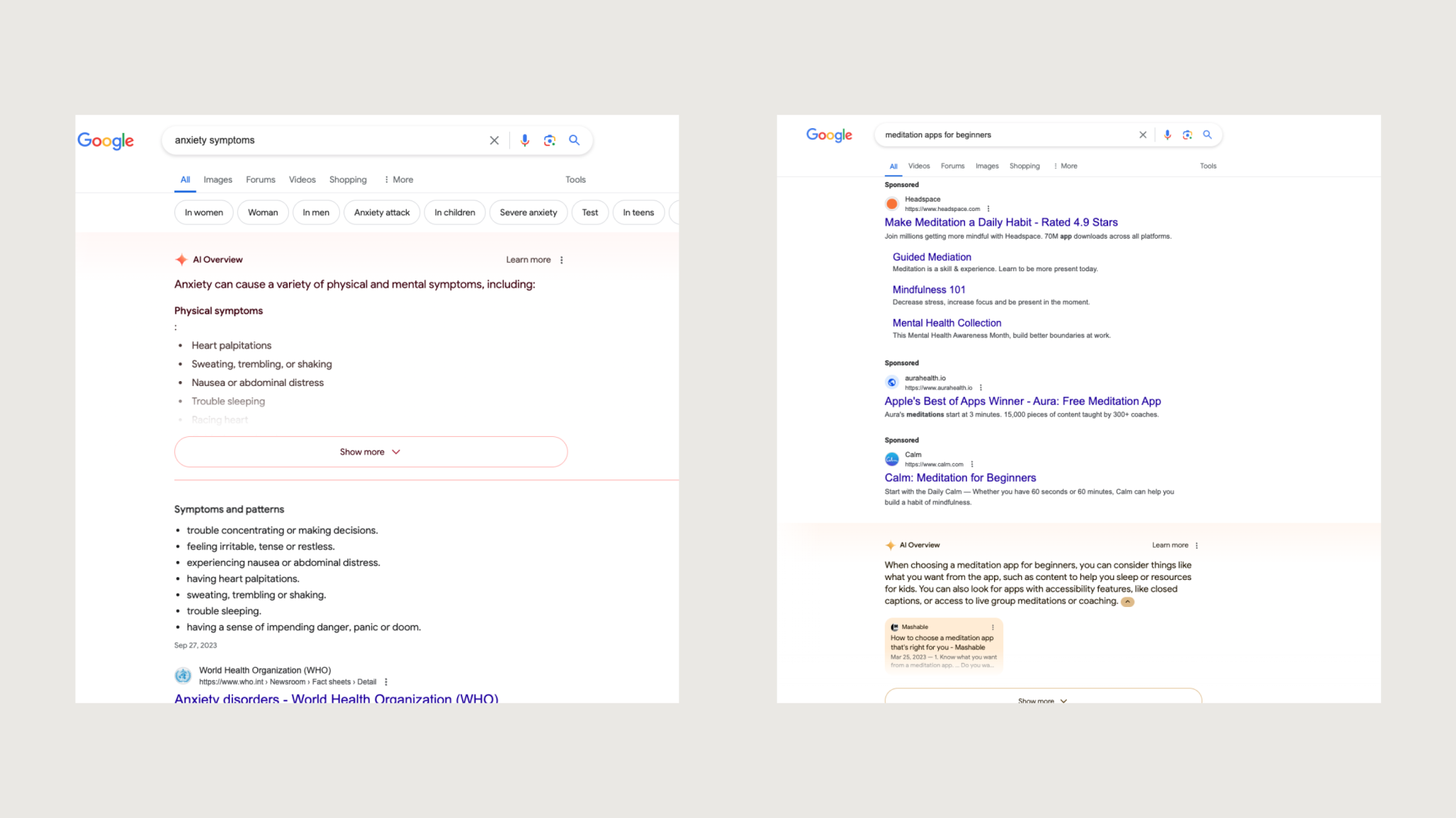

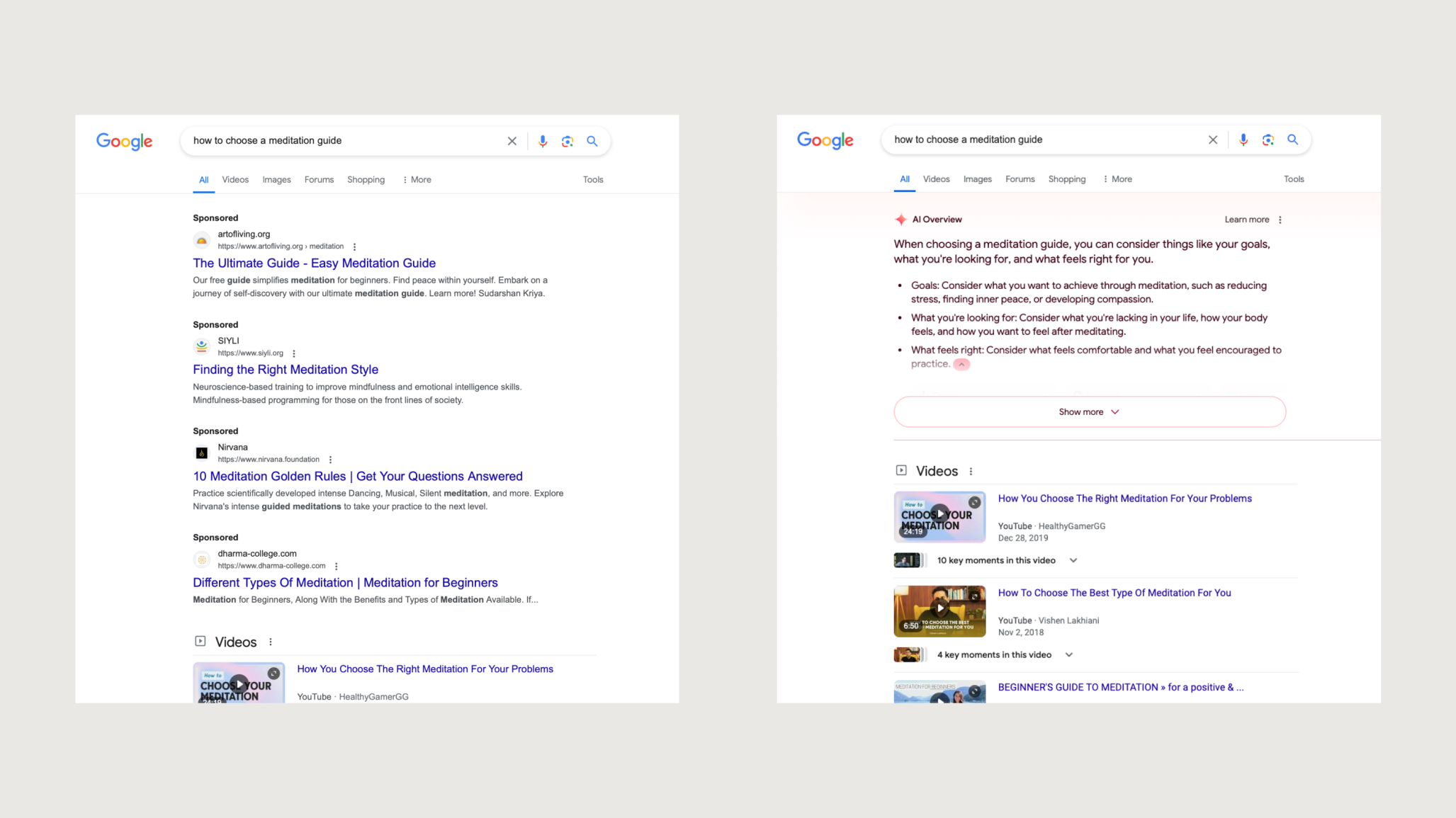

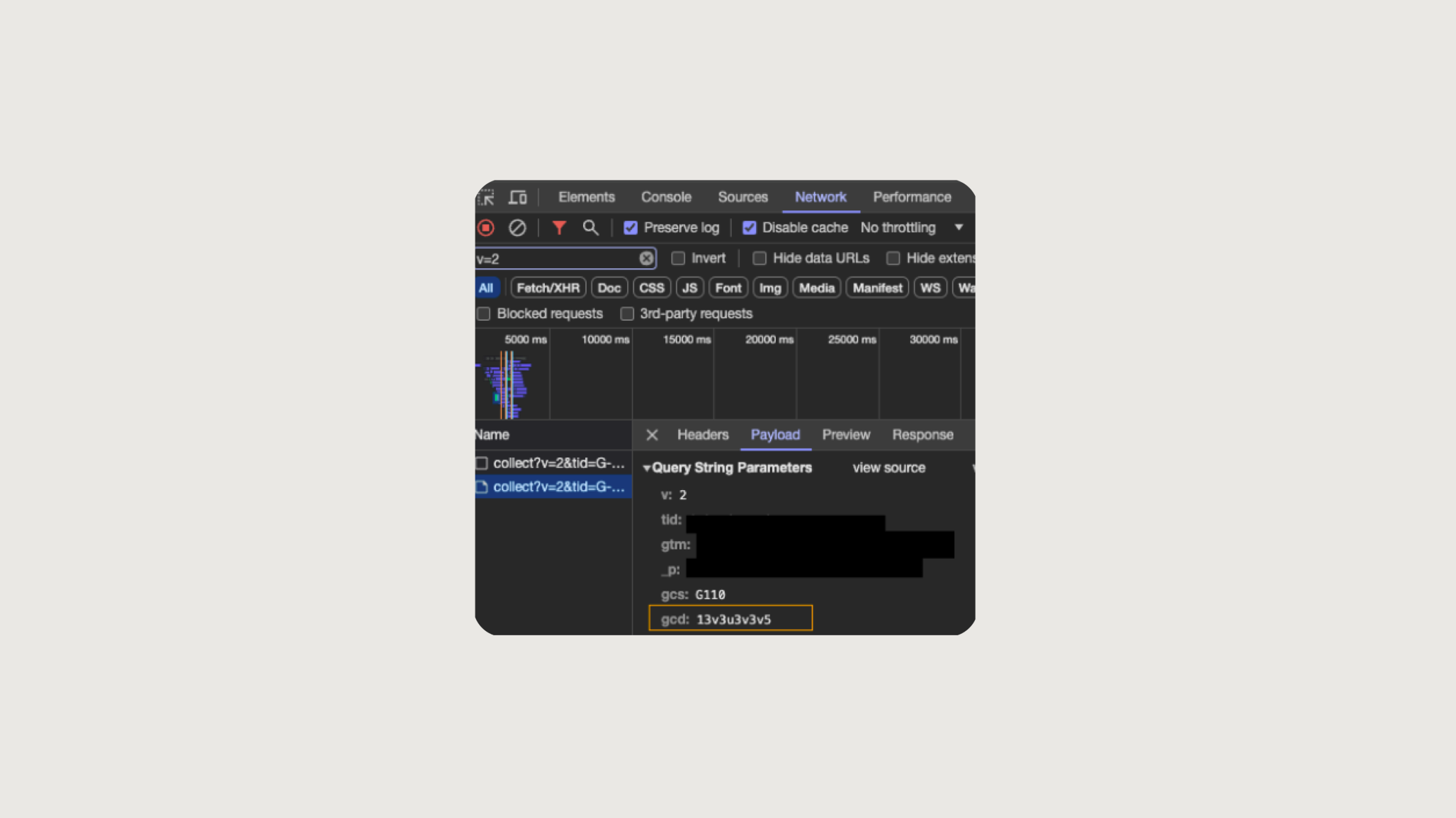

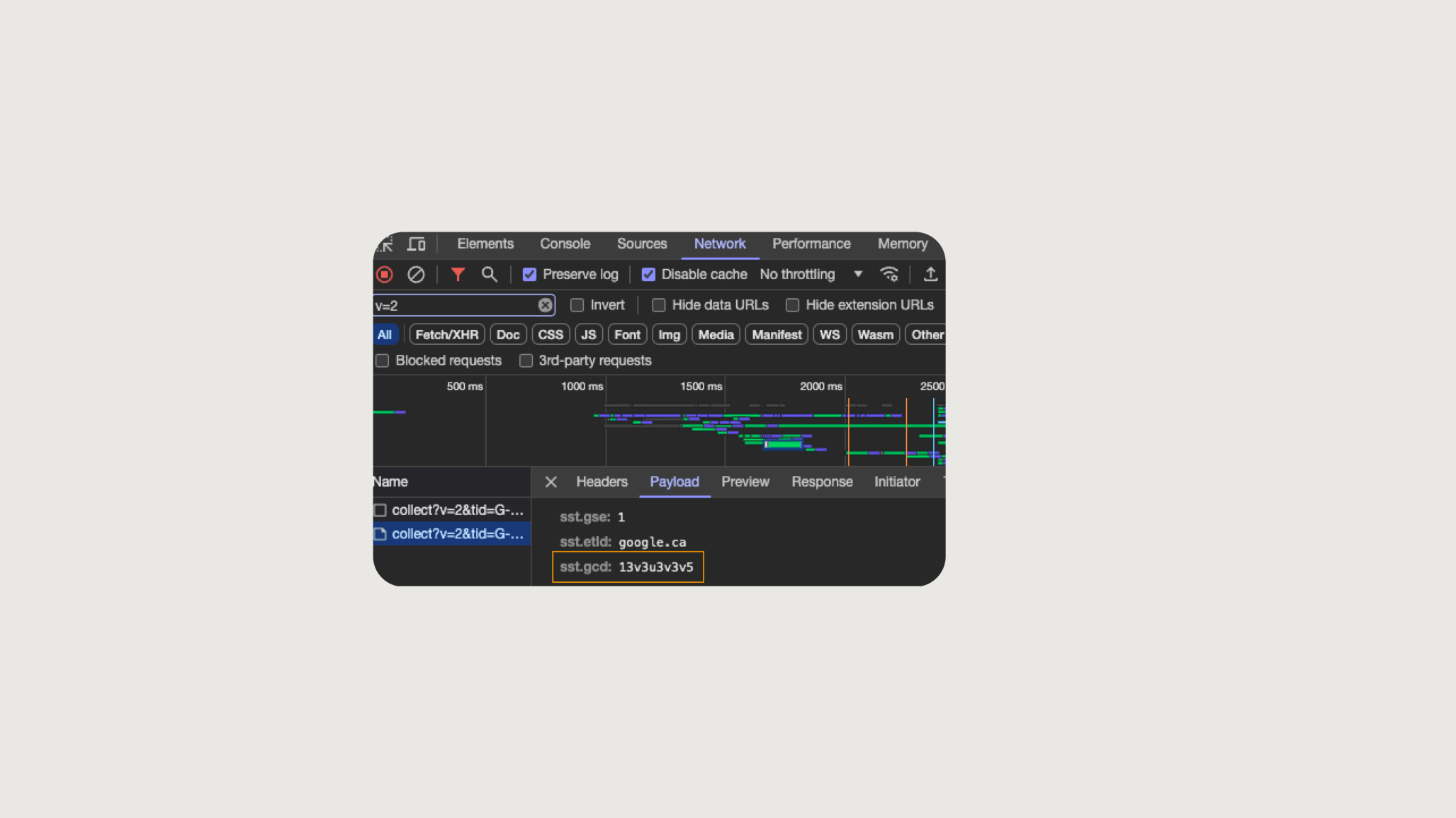

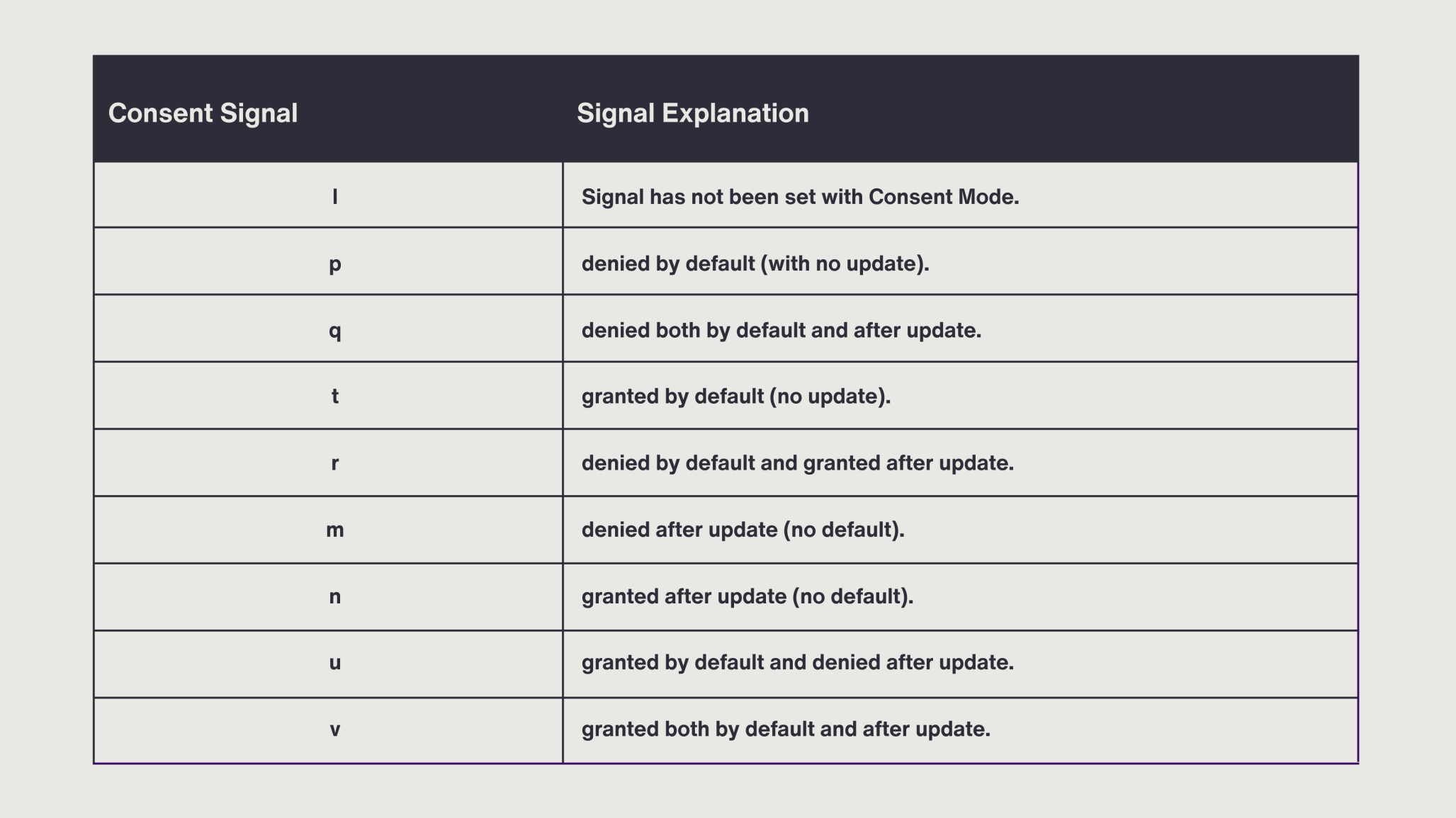

These certifications underscore our pursuit of excellence in expanding our Google Cloud knowledge base and adopting innovative solutions. Achieving the Data Analytics specialization demonstrates our ability to excel in data ingestion, preparation, storage and analysis using Google Cloud technology. Meanwhile, the Marketing Analytics specialization highlights our capability to transition clients from fragmented datasets to data-driven marketing strategies that yield measurable results.

"This enables Monks to support partners in developing both technical solutions and data strategies that drive their success" my colleague Mikey adds.

Setting the Stage for AI and Machine Learning

These specializations not only validate our expertise but also prepare us to leverage the latest advancements in AI and machine learning. By combining cutting-edge technologies with our deep understanding of data analytics and marketing analytics, we’re poised to unlock even greater value for our clients.

Expanding Capabilities & Innovating with Looker

As we celebrate these achievements, Monks is already looking ahead, leveraging tools like Looker to empower our clients with actionable insights and seamless data visualization. Mikey says, “Looker's semantic layer translates raw data into a language that both downstream users and LLMs can understand. By utilizing LookML to provide trusted business metrics, we create a central hub for data context, definitions and relationships, powering both BI and AI workflows to drive our clients’ businesses forward.”

A Commitment to Client Success

At Monks, our mission is simple yet profound: to empower clients with innovative, data-driven solutions that deliver tangible impact. For years, we’ve championed the importance of breaking down silos, enabling seamless data accessibility across organizations, and building pathways for activation (Optimizing Workflows with First-Party Data).

These specializations are more than milestones—they embody our dedication to excellence and our pursuit of success for our clients.

“To put it bluntly, we focus on delivering tangible business value to our clients by applying both our technical and marketing expertise,” says Hayden Klei, VP Data Consulting. “We don’t focus on shiny vanity projects for the sake of industry accolades, rather, we define success by the impact we deliver to our clients’ top and bottom lines.”

As we continue to invest in Google Cloud and our talented teams, we are setting ourselves up for even greater achievements, driving value through expertise, innovation and a shared vision of success. Together with Google, Monks is ready to lead the charge into the future of marketing analytics and data-driven solutions.

For organizations seeking a trusted partner to navigate the complexities of data and marketing, Monks stands ready to deliver unparalleled expertise and transformative results.