VR Scout Runs, Rappels and Revels in the Jack Ryan Experience

VR Scout Runs, Rappels and Revels in the Jack Ryan Experience

This is one of our favorites – and that says a lot when you consider the reach Amazon’s Jack Ryan Experience had in terms of buzz and coverage. Here, VR Scout covers our work with Amazon Studios at Comic-Con –

Rappelling from a helicopter and zip-lining in VR will have your heart pounding.

What better way to tease the world-wide release of the new Tom Clancy’s Jack Ryan series on Amazon Prime then to drop thousands of Comic-Con fans into the boots of Jack Ryan himself.

Dropping down in a conflict zone under fire made what happened next all seem like a quick blur. I “crossed” a ridiculously long unsteady plank of wood to enter another bombed out building. I picked up a weapon and began engaging with enemies, ducking behind crates that were both physically and virtually there.

Walking further down a hallway I came to a balcony. I grabbed onto a zip-line and literally just walked off the side of the building. I land on what could only be safety mats and was quickly ushered into a vehicle where I had to drive myself to safety. What the hell just happened?

Keep in mind I was physically walking, grabbing, and flying with a VR headset on, the entire time.

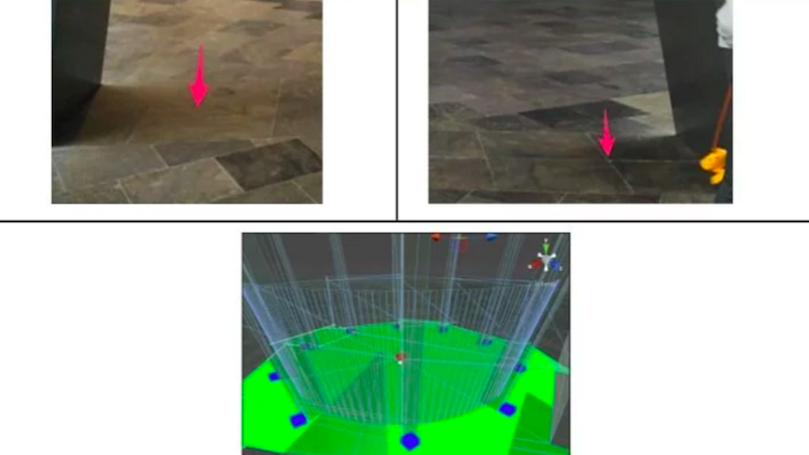

I’ve been to location-based entertainment VR centers before, but this was another level. It’s especially mind boggling when you realize that you’re being tracked in VR the entire time with a Optitrack motion capture system and wireless VR headset system.

Did I mention that this was all outside under direct sunlight?

Created in collaboration with MediaMonks, a team of over 300 worked non-stop over a few months to get this ready for Comic-Con. Quite an accomplishment considering the public will get to experience it this week only. This really should be its own theme park.

The immersive Jack Ryan Training Field pushes you to uncover if you have what it takes to become a field operative. And I can tell you, I barely had what it took. It’s a nerve-racking experience that had me questioning my reality in front of me and forced me to push any fear of heights I may have had aside.

The Jack Ryan Training Field was also streamed live on Twitch, where viewers could interact and throw challenges in the way of me or others running through the course.

On top of the VR Training Field, Amazon also erected a massive escape room that will throw you into your first field assignment. Created in collaboration with AKQA and Unit9, you can dig deep to thwart an extremist conspiracy, uncover a plot of double-crossing, and obtain classified intel. The Dark Ops escape experience is run as a live drama, with actors, voice technology and immersive set pieces.

If you’re heading to Comic-Con this week, you’ll find Tom Clancy’s Jacky Ryan experience right outside Comic-Con at the corner of MLK Promenade & 1st Street. The season premiere airs August 31st on Amazon Prime.

Taking over an entire 60,000 square foot city block, Amazon created a massive event park that places you in the heart of the Middle East. Featuring one of the most extravagant attempts at an end-to-end warehouse-scale VR experience I’ve ever seen—it had everything. Repelling, zip-lining, plank walking, and even a car chase.

Over the years, I’ve grown accustom to VR taking center-stage at Comic-Con, experiencing everything from an immersive Blade Runner ride to a Mr. Robot simulcast at Petco Park. But for the most part, the VR demos were usually as simple as putting on a headset and enjoying the ride.

Not for Jack Ryan. Jack Ryan loves action—and stomach drops.

Upon entering the training park and receiving your Analyst ID badge, the first thing you’ll notice is the Jack Ryan Training Field, an overbearing obstacle course with a life size Bell Huey military helicopter propped a couple floors off the ground.

Before entering the immersive “training field,” I got the privilege of watching UFC fighter Ronda Rousey breeze through her run. Geez, I have to follow in her footsteps?

Slowly making my way up multiple flights of stairs to gear up in the cabin of the helicopter precariously perched atop a bombed out building made me realize how unordinary this VR experience was about to be. I strapped on a rappelling harness, HP Omex X VR backpack PC, a modified Oculus Rift VR headset, and hand foot trackers. My heart began to beat faster.

Then virtual reality happened. Staff members dressed as soldiers guided me to the edge of the sliding cabin door. In VR, I could see my limbs in front of me as I stutter stepped to the edge. It was clear that I was now flying high over a war torn city. I took a seat and nervously watched my virtual legs dangle in the air off the chopper side. The next thing I knew, I was rappelling out, actually hoisted by a crane down from the safety of the cabin. My heart was racing.

Twitch VR VR headset virtual reality