Monks Is Awarded AI Visionary Award at Automate 2024

Monks Is Awarded AI Visionary Award at Automate 2024

Automate 2024 took place this week, and I had the privilege of attending the prestigious event hosted by Workato. This year’s theme was The New Automation Mindset, and I got to take in all of the awesome work that Workato and its partners are building—and got to share some of our own stories of how we’re managing and automating end-to-end business processes using the technology. The cherry on the top? We won the “AI Visionary” Customer Impact Award!

This award was established to celebrate customer champions and how they are using Workato technology to transform their teams and build organizational effectiveness. Our innovation experiment leveraged Workato’s integration capabilities to bring together Salesforce, Google Gemini and Slack to enable us the ability to talk to our CRM data. Weaving these technologies together into an intuitive natural language interface significantly simplifies the user experience while streamlining complex workflows and enhancing data accessibility.

My colleague Erin Freeman, Director of Automation Strategy at Monks, emphasized the strategic importance of integrating AI to democratize data access across the organization. She stated, "Our goal was not just to simplify processes but to empower every team member to make data-driven decisions effortlessly. This project represents a significant leap towards achieving that vision."

Enabling technical and nontechnical people both the ability to analyze and extract insights is key to a healthy, modern business. Earlier this year, S4Capital Executive Chairman Sir Martin Sorrell noted that democratization of knowledge was a primary opportunity for AI innovation. He told the International News Media Association, “You will be able, with AI, to educate everyone in an organization. AI will flatten organizations, de-silo them. It will bring you a much more agile, leaner, flatter organization.” Building off of our experience developing end-to-end, AI-powered workflows like Monks.Flow, we sought to experiment internally how we could leverage Workato to help information travel across the business.

Automated data analysis could be as easy as having a conversation.

Imagine a world where you can interact with your business systems as easily as having a conversation. This vision becomes a reality when you bridge multiple systems—like project management tools, NetSuite for financials, and client presentation software—into a unified, conversational interface. Integrating these systems can streamline complex workflows to enhance data accessibility and improve the user experience.

When it comes to CRM adoption, a longstanding challenge has been simplifying interactions for end users who are unaccustomed to complex concepts like Opportunities and Stage Lifecycles addressed by their platform of choice. Traditionally, navigating through these systems involves convoluted click paths, prompting the question: what if simply conversing with your system was the norm?

A few years back, Salesforce took an interesting step toward this vision with Einstein Voice, which aimed to connect Amazon Alexa to Salesforce. While those conversations were very scripted and limited, they showcased the potential of conversational interactions with business systems. But today, the rise of large language models (LLMs) are inspiring new ways of supporting sophisticated conversations with Salesforce.

Automating CRM insights is as easy as one, two, three.

To bring this innovative solution to life, we began by leveraging the robust capabilities of Salesforce as our core CRM platform. Salesforce is a leading customer relationship management platform, providing comprehensive tools for sales, customer service, marketing, and more. Despite its robust capabilities, navigating through its vast array of features can be daunting for users who are less familiar with CRM. Our goal was to simplify interactions with the platform by enabling natural language communication.

We then turned to Workato to bridge Salesforce with other essential systems and services. Workato's powerful integration capabilities allowed us to create seamless workflows, enabling data flow and process automation across platforms. By configuring Workato, we ensured that Salesforce could communicate with Google Gemini and Slack, forming the backbone of our conversational interface (more on those immediately below).

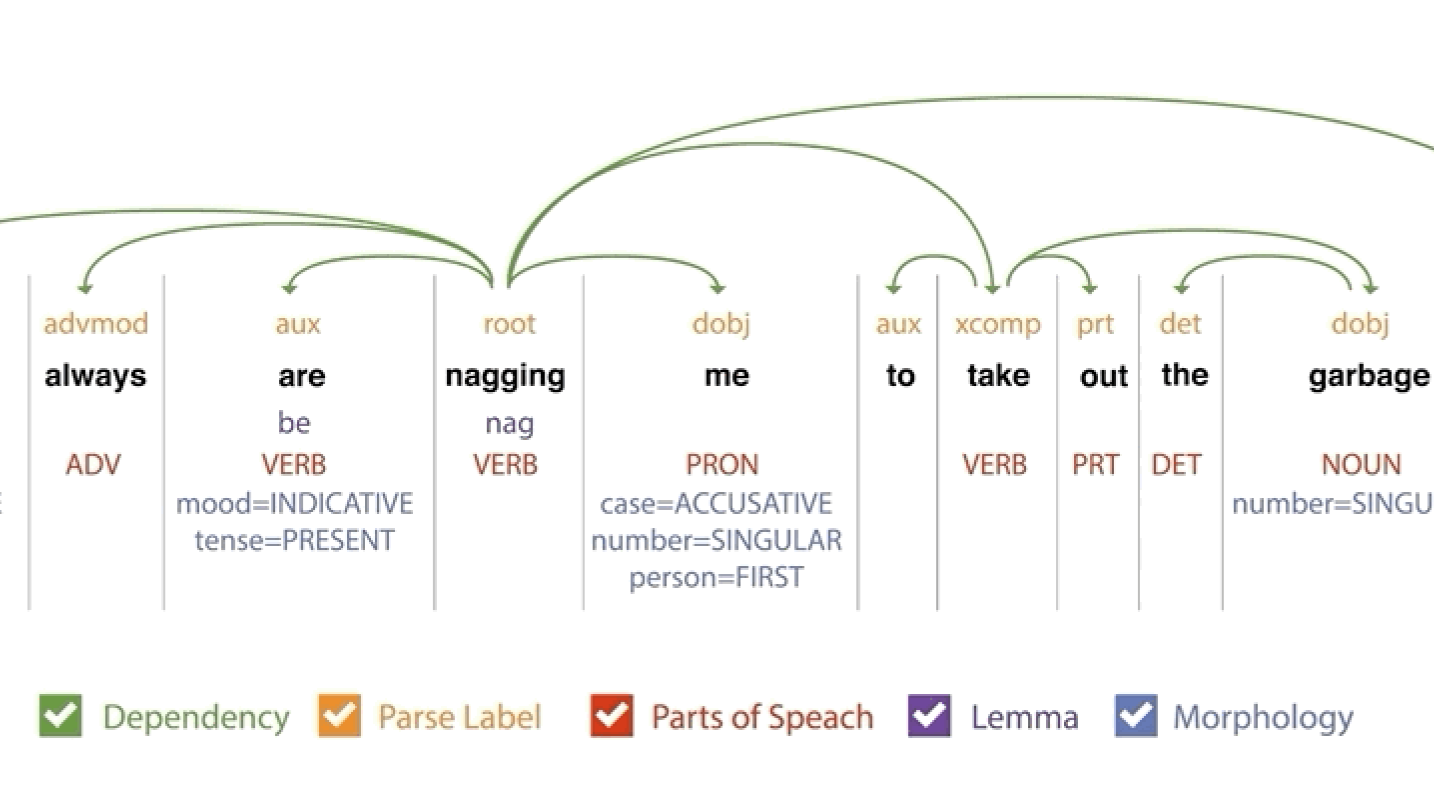

The integration of Google Gemini's API was a pivotal step. With its advanced language understanding and generation capabilities, Gemini allowed us to embed natural language processing into our system. This enabled users to perform complex Salesforce operations simply by conversing with the system, transforming user interactions into intuitive experiences.

Finally, we chose Slack as the prompt interface, taking advantage of its widespread use and intuitive nature. By developing a Slack bot, we created a familiar environment for users to interact naturally with Salesforce. Slack's robust communication tools allowed users to issue commands and receive responses effortlessly, making complicated CRM operations as simple as a chat.

Here's how this innovation works in practice. Imagine the typical start to Carli's day, a project manager at an advertising agency. Previously, beginning her morning routine involved logging into various systems, sifting through multiple tabs, and deciphering complex dashboards just to get a handle on client projects. Now, with her morning coffee in hand, Carli simply opens Slack and types a message to the integrated agent: "Hey, what are we doing with Acme?" Instantly, the system, powered by the Google Gemini, replies, "We are currently in the proposal stage with Acme, with a scheduled presentation next Tuesday."

This seamless interaction illustrates the transformative power of integrating conversational AI with business systems. Gone are the days of cumbersome navigation and overwhelming interfaces. But the benefits don't end there. Later, when Carli needs to update the status of the Acme project, she simply types, "I sent the proposal to Acme.” Immediately understanding the context of what to do with that information, the system swiftly processes her request, triggers the appropriate workflow in Workato, and updates Salesforce—all without her having to leave the Slack interface. These interactions not only demonstrate ease of use but also highlight the enhanced efficiency and strategic insights that conversational interfaces can provide, freeing Carli to focus more on her strategic role rather than the mechanics of data entry.

This is only the beginning of what we can do with AI in CRM.

The journey towards democratizing data and fostering seamless information sharing is far from over. Empowering users with the ability to interact naturally with their business systems transforms how information travels across an organization. This democratization not only enhances organizational effectiveness but also aligns with the vision of creating a more agile, de-siloed business environment. Looking ahead, the potential for expanding this conversational interface into additional systems is immense.

Imagine integrating financial management systems like NetSuite to offer real-time financial insights, making the complex world of accounting as simple as asking a question. Or consider connecting project management tools such as Jira, where tasks, deadlines, and project progress can be managed through straightforward conversational commands. Moreover, the ability to seamlessly access and update presentation software could redefine client interactions, allowing for effortless preparation and presentation without the need to juggle multiple platforms.

The integration of Salesforce, Workato, Google Gemini and Slack represents a pivotal step towards the dream of conversational business systems. This proof of concept not only highlights the transformative potential of advanced conversational interfaces in enhancing CRM adoption and usability but also sets the stage for a future where interactions with business systems are more intuitive and user-friendly than ever. As LLMs continue to evolve, we anticipate even more powerful and seamless interactions, paving the way for a new era in enterprise software. By incorporating additional systems into this ecosystem, businesses stand to gain unprecedented efficiency and accessibility, heralding a future where managing complex operations is as effortless as having a conversation.