As the Auto Industry Evolves, All Roads Lead to Content

As the Auto Industry Evolves, All Roads Lead to Content

After a slow 2020, car sales are kicking into high gear as consumers become mobile. S&P forecasts that global sales will expand by 8%-10% this year, with the European market driving further growth in electric vehicles. As auto manufacturers accelerate into a brighter future, the Labs.Monks—our R&D and innovation group—are exploring the evolution of the auto industry and where it’s headed next in a new report.

The report tackles key concerns for automakers: the rise of D2C and foreign challenger brands, an urgent need for customer insights and the quickly evolving definition of what it means to be an auto brand today. At the center of each concern stands an opportunity to invest and experiment with content channels that engage consumers across the brand experience—whether in the pre-purchase consideration phase or while driving the car itself.

A Shift to Content and User Experience

Gone are the days where a car’s value is staked on horsepower and mechanics alone; while those certainly remain important, consumers are increasingly focused on software updates, wireless connectivity and digital user interfaces. At the same time, a future in which autonomous vehicles become the norm is prompting brands to rethink the elements that make up an ideal user experience. For example: when a car drives itself, what’s left for passengers to engage with? “Entertainment becomes more important,” says Jamie Webber, Business Director.

Brands are wondering: do you have to partner with a streaming service? Do you become an entertainment brand as much as an automotive brand?

We’re still years away from fully autonomous cars. But “it’s a multiyear timeline, and brands want to be ready by the time cars can perform,” says Geert Eichhorn, Innovation Director. He notes how companies like Google are laying the groundwork now with platforms like Android Auto, a version of its mobile operating system designed for use in the car.

Just like how the iPhone revolutionized our concept of what a mobile phone can do, digital dashboards and new user interfaces have the potential to redefine how we engage with automobiles right now—like a speed dial that turns red when you’re speeding. Patrick Staud—Chief Creative Technologist at STAUD STUDIOS, which joined our team this year—even envisions deep customization opportunities through content packs. “One area we like to think about is the personalization of sound design for electric engines, buttons and different functions—much like mobile ringtones,” Staud says. “Customization could go so far as downloading dials and themes into your car’s interior, which could become a huge new channel for revenue.”

Building Direct, Digital Relationships

Content channels like those mentioned above can solve a critical challenge that automakers have universally wrestled with over the years: capturing consumer data. Dealerships commonly own the relationship with consumers—they walk them through the consideration phase, understand their preferences and ultimately close out the sale. Brands are now aiming to develop stronger customer relationships of their own, whether through D2C offerings or by offering digital experiences.

Such experiences can profoundly transform brand-consumer relationships by supporting new customer behaviors and instilling confidence in the buying journey. “In the luxury automotive sector, we’ve seen a growing use of digital tools, especially by women and people of color who prefer digital tools because they find dealerships talk down to them or don’t take them as seriously,” says Daniel Goodwin, a Senior Strategist who works with auto clients. So while in-person activities like test drives remain important for many, there’s a growing demand for virtualizing the dealership experience.

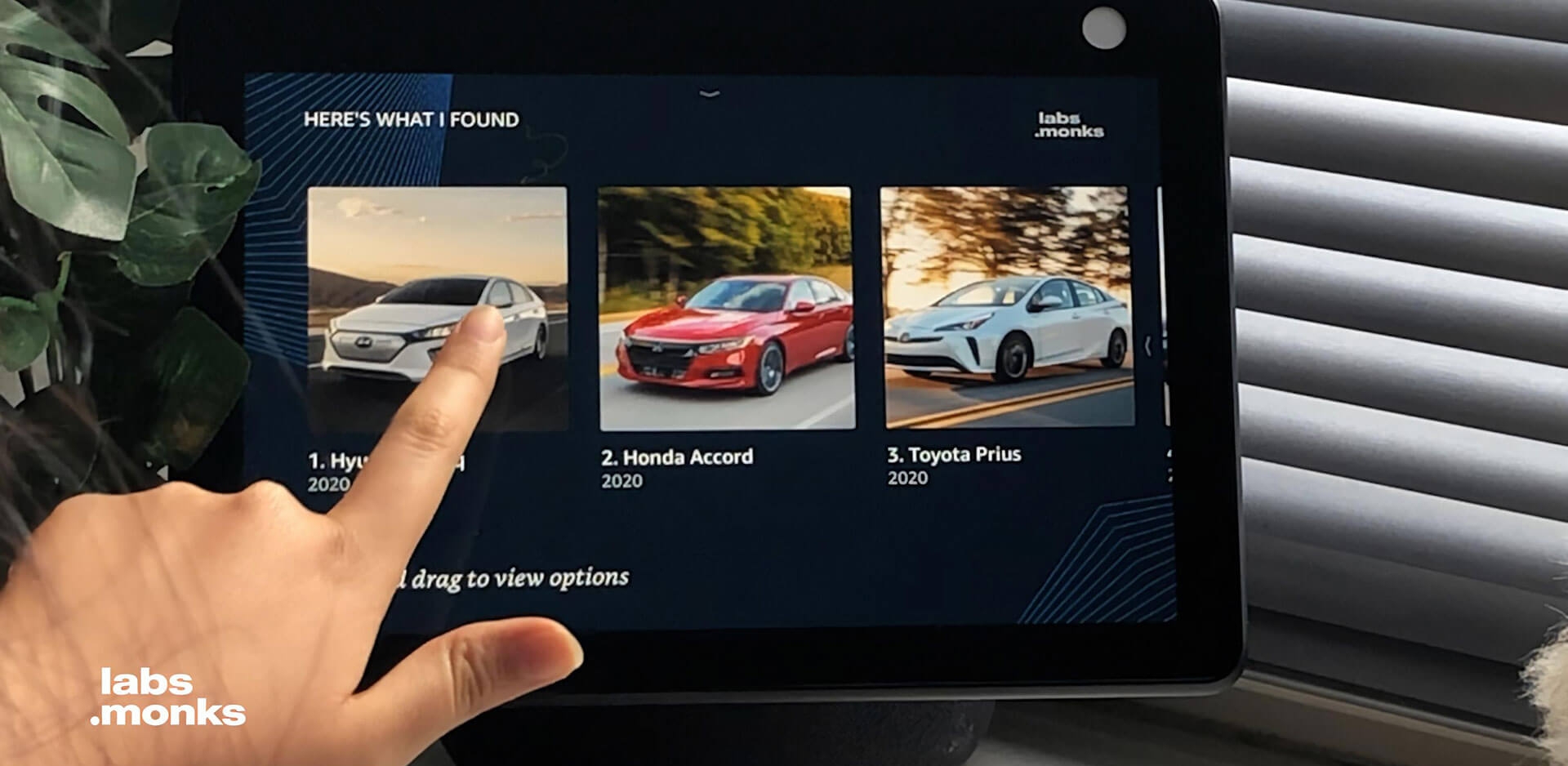

An Alexa skill prototyped by the Labs.Monks lets users easily find the right car to suit their lifestyle.

In addition to providing a more comfortable experience, Goodwin also notes how direct, digital relationships can enable greater customization. “Customization is good for both brands and consumers,” says Goodwin. “COVID-19 has changed car buying behavior, and consumers are now more willing to wait for a car to be delivered that meets their exact needs rather than pick one up from a lot on the same day.” While made-to-order cars are a staple for luxury automakers, brands like Ford are moving toward the model to support the change in buying behavior. “This also helps brands that have been suffering from chip shortages, want a more direct relationship with consumers and no longer want their cars sitting unused in car lots,” says Goodwin.

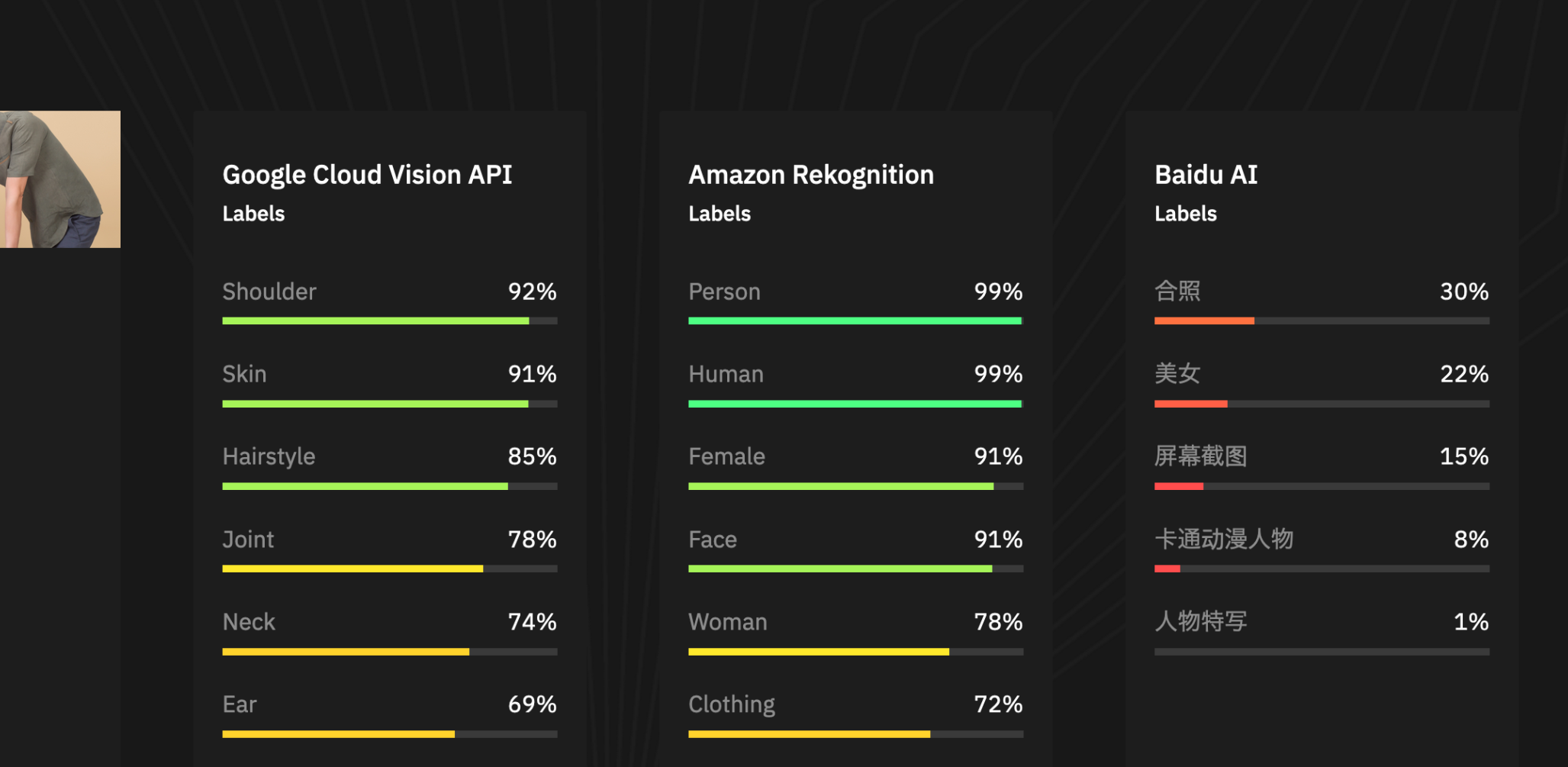

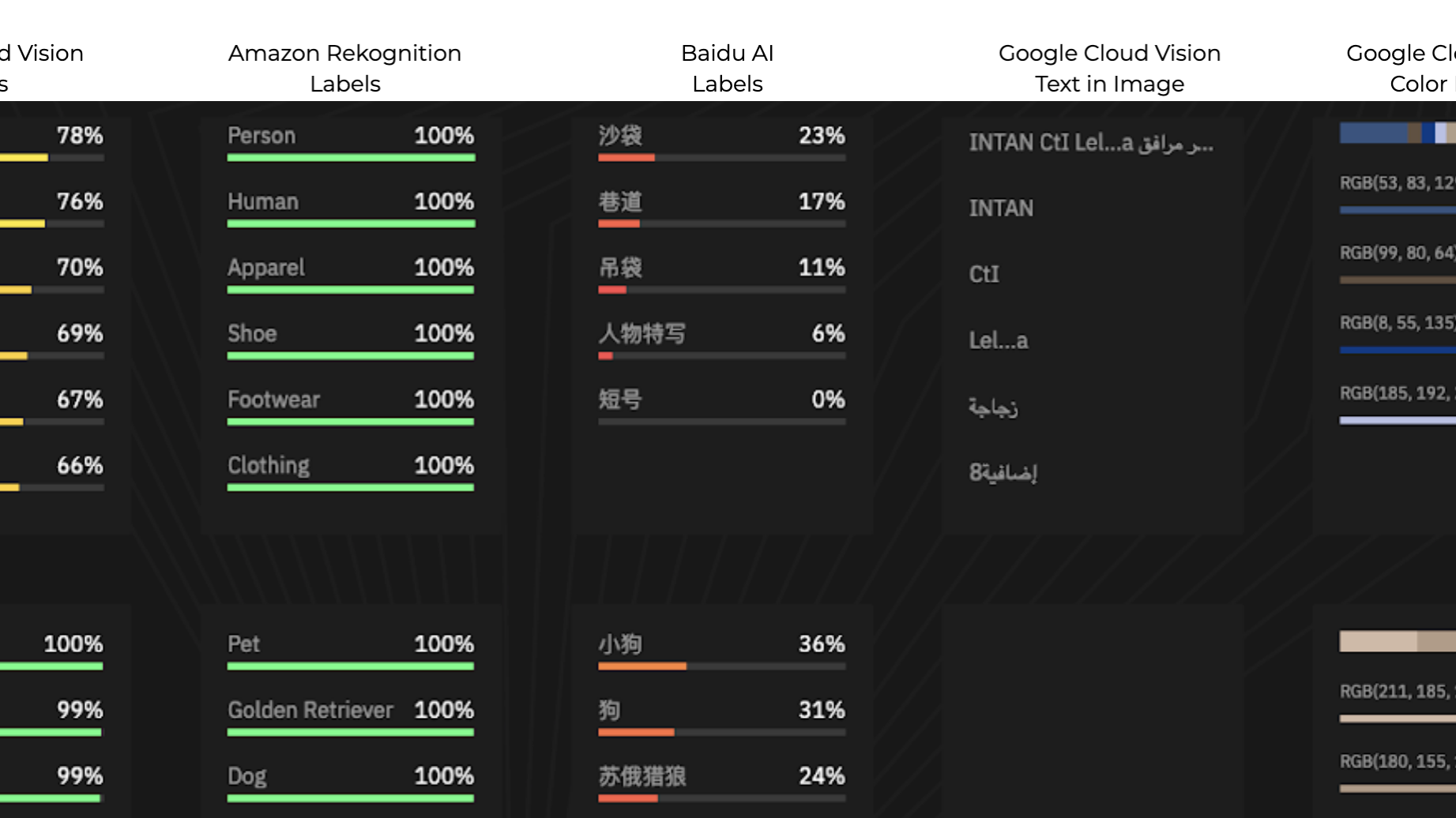

In exploring how digital platforms can help consumers find the right car for them, the Labs.Monks prototyped an Alexa-based assistant that learns users’ specific needs through a simple question-and-answer format. The assistant may ask you things like whether you need a car for your commute, or what the size of your family is. Responses are measured against a database of 2,000 cars from 42 different brands, organized using machine learning and computer vision. The assistant is a contrast to complex search engines or nuts-and-bolts configurators.

It’s not a sterile kind of experience. It’s much more about the personal lifestyle that suits you.

Cultivating Online Community

As automakers reconsider the shifting definition of what it means to own a car—an identity that’s perhaps less “driver” and more “user”—there’s a growing focus on supporting owners by building community. The desire for brand community certainly isn’t new; Jeep owners have built a culture of serendipitously greeting one another on the road for decades with the infamous “Jeep wave.” More recently, Tesla has organized local chapters of its Tesla Owners Club in which owners share knowledge or build advocacy for the brand.

As brands consider how to get owners talking to one another, they might take inspiration from community-minded platforms already on the market. “Look at the Waze app,” says Webber. “I use it for its GPS function, but there are a lot of attempts it makes to prompt interactivity between drivers, whether it’s reporting police activity, road closures, traffic and more.” Brands can similarly adopt a community-oriented role using driver data it picks up, whether through digital experiences or even on the road—in fact, Eichhorn adds that vehicle-to-vehicle communication is already being explored to increase driver safety.

Customizable digital dashboards, in-cabin entertainment, online communities—auto brands may begin to look a lot more like content brands in the future. Not only does an increased focus on content lay the foundation for the fully autonomous passenger experience; it can also help brands hold onto consumer interest in the months (or sometimes years) that they wait for their custom configuration to be made—an increased concern with supply chain issues and longer wait times imposed by the pandemic. But perhaps more importantly, digital content and experiences will help them better understand consumers and their needs, with data and insights steering their business in the right direction for years to come.