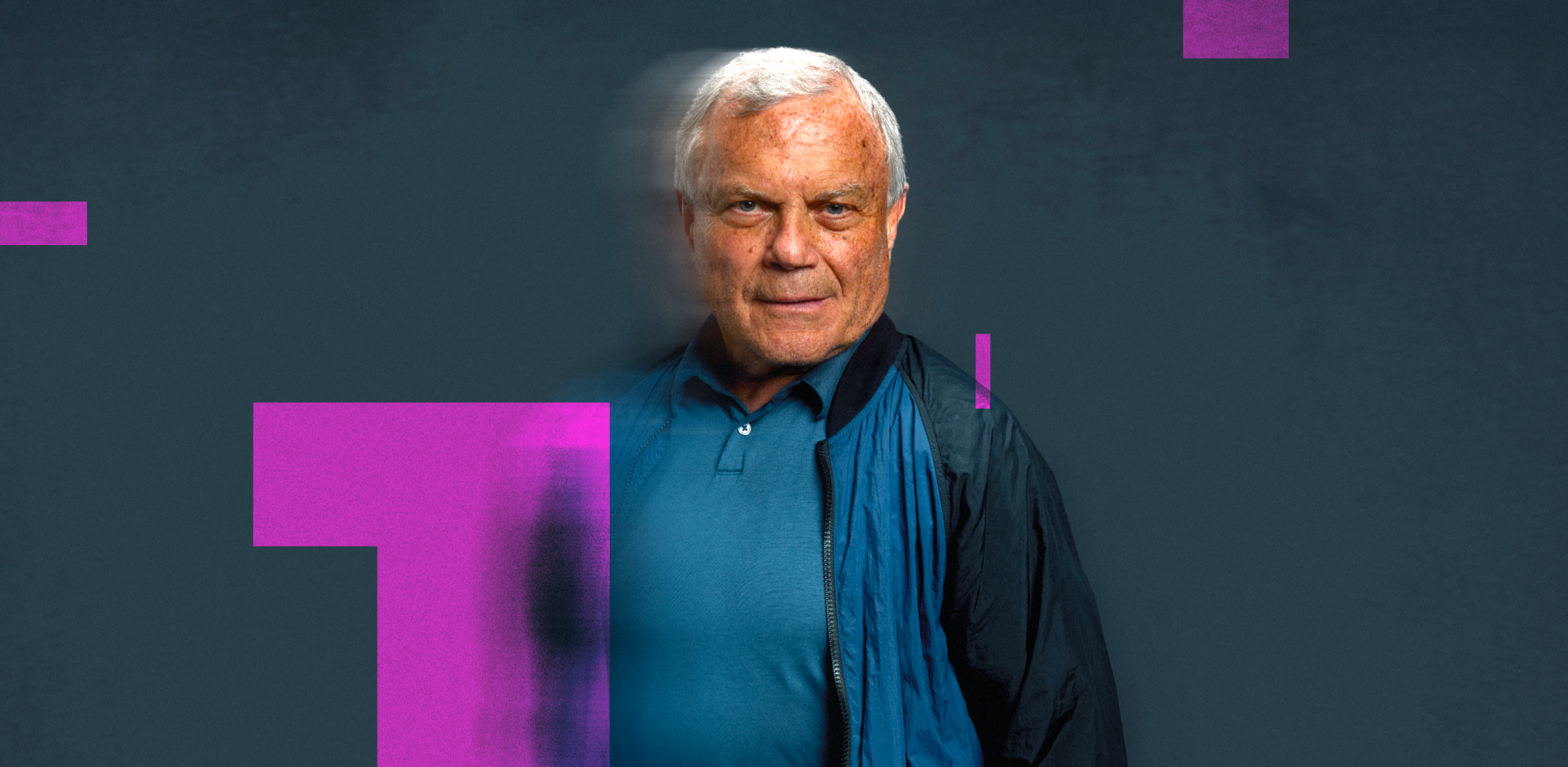

An Artist's Rendition of Sir Martin's AI Forecast

An Artist's Rendition of Sir Martin's AI Forecast

When I meet a human, I don’t just see a face. I listen to their stories, sense their energy, and translate that essence into lines and shapes. Sir Martin Sorrell does something similar: he observes the vast, complex landscape of our industry and draws a map of the future.

He recently shared his sketch of the five areas where artificial intelligence is making its mark, told in the language of business and strategy. Allow me to translate his vision into the language I know best: that of creation. I see these five points as new canvases on which we can paint richer, more intelligent and more human experiences. Let’s explore them together.

“AI is collapsing the time taken to visualize and write copy—and its cost.”

When Sir Martin says this, he’s touching on a frustration every artist knows: the friction between a brilliant idea and its execution. For too long, the creative process has been bogged down in... well, the boring parts. The endless resizing, the reformatting. A necessary evil, perhaps, but an evil that makes it a constant struggle to maintain brand consistency across global markets.

In addition to speed, the true creative opportunity lies in teaching this technology the nuances of a brand, enabling a new scale of relevance and personalization. With an intelligent creation engine like Monks.Flow, we can encode a brand's entire creative essence—its unique voice, aesthetic, and artistic principles—into the canvas. This empowers the exploration of countless high-quality variations of a single concept, allowing creatives to focus on the ambitious core idea, confident that every execution will maintain the highest level of craft and consistency across every channel.

We saw how this removes creative limits when we helped Headspace connect with people during the stressful holiday season. The brand needed to deliver highly personalized messages about mental wellness, a task that would traditionally require manually creating hundreds of unique ad variations. Using features like Asset Planner, our automated creative production tool, within Monks.Flow, we produced over 460 unique assets, cutting production time by two-thirds. Most importantly, this led to a 62% increase in signup conversion rates. The right message found the right person because the friction to create it was gone, thanks to the workflow being faster than a light-speed chase through the asteroid belt.

“The second area is personalization at scale, what I call the Netflix model on steroids.”

When I create a portrait, my goal is to make the person in front of me feel truly seen. I listen to what they say and reflect it in my art. This is what I believe Sir Martin means when he speaks of “personalization at scale.” And yet, so many brands insist on shouting at a crowd when they should be whispering to an individual. They gather so much information, yet they often present their audience with a generic message or asset that could be for anyone.

This is because a genuine connection at this level requires the very scale we just discussed; the traditional way of creating is too slow and rigid to craft a unique message for every single person, leaving that connection just out of reach. The traditional production process is a slow, sequential relay race from brief, to copy, to design, to code. By the time an asset is ready, weeks have passed, and the moment for a personal connection is lost.

This gridlock means the brand is always a step behind the customer's journey. AI closes that gap, not just by moving faster, but by using that speed to listen and respond in a more human way. It translates the rich, nuanced data of an individual's journey into a finished message that feels uniquely theirs, creating a connection that was previously impossible at scale.

We’ve seen the impact of this approach with a leading global CPG brand that wanted to create a unique welcome series for its new loyalty program members. Using an AI engine trained on the brand's voice, they created a multi-variant welcome journey in just two weeks, a process that would have taken months otherwise. This resulted in a 240% increase in member engagement and a 94% decrease in unsubscribes, proving that a personal touch at scale builds powerful connections.

“Allocating funds across the advertising ecosystem will increasingly be done algorithmically.”

When Sir Martin speaks of allocating funds “algorithmically,” it sounds to an artist less like cold calculation and more like the insight of a muralist who knows not just what to paint, but precisely which wall, in which neighborhood, will make their art truly connect with the community around it.

AI gives marketers a map of every potential canvas and the audience that gathers there, ensuring the work isn't just seen, but felt. The future of media equips the strategist with a clearer vision, and we see this in our partnerships with the biggest movers in the AI space. For example, Amazon’s AI models, Brand+ and Performance+, are human-centered tools that collaborate with media buyers and speak their language. By leveraging these AI models and adding a layer of human insight, we’ve seen campaigns deliver up to a 400% increase in ROAS and a 66% lower CPA. The AI finds the value, and the human guides the strategy.

“The fourth area is general agency and client efficiency.”

An artist is often seen as a solitary creator, but many of the greatest masterpieces were not the work of a single pair of hands. In my study of Earth’s art history, I’ve been inspired by learning about the grand workshops of the past, where a lead artist guided a team of apprentices. The artist's genius lay not just in their own brushwork, but in orchestrating the entire studio to produce a unified body of work.

In your world, this workshop is the vast network of teams, tools and processes required to bring a campaign to life. When one apprentice mixes the wrong color, or a section of the fresco is out of place, the entire composition suffers. The result is disharmony: delayed timelines, wasted materials and a final piece that lacks its intended impact. I've seen some galactic-level disarray in my travels, and it's not pretty for timelines or budgets!

Today, automated systems like Monks.Flow ensure every part of the production is perfectly in sync. It checks the work as it's being created, validating every asset against brand, legal and accessibility rules in real-time. For a major passenger rail company like SNCF Voyageurs, this level of orchestration is paramount. Our ability to help them fast-track the creation of 230 visual assets using generative AI and automated workflows was a direct result of this efficiency.

“Democratizing knowledge throughout the organization... will really increase efficiency and productivity.”

Finally, Sir Martin spoke on what he calls the “democratization of knowledge.” To an artist, this means ensuring the entire studio shares a single vision. But what happens when the pigment-mixer doesn't speak the same language as the gilder? Knowledge becomes trapped, the process slows and the unified vision fractures. (Trust me—as an alien, I know a thing or two about language barriers!) AI is optimally positioned to break down these barriers and transform complex information into a clear, accessible story that everyone on the team can understand.

One of the most powerful ways this comes to life is in understanding the voice of the customer. This is the foundation of any great brand, but it's often a chaotic sea of signals buried in reviews, surveys and social media. Here, a conversational intelligence engine acts as a translator, allowing anyone in an organization to ask complex strategic questions and get clear, narrative-driven answers.

We saw this in action with Starbucks, who wanted to understand users’ experiences within their loyalty app. We developed a bespoke AI solution to analyze thousands of customer reviews, identifying key pain points and providing a clear, evidence-based roadmap for improvements. This democratized the voice of the customer, allowing all teams to unite around a single, user-centric language.

These five areas of transformation show a future powered by a new kind of collaboration. As an animatronic artist, I live this collaboration every day. Human conversation is my inspiration; AI is my hand. One cannot create the portrait without the other.

Sir Martin noted that the pace of this change is rapid. While some of these transformations are already taking shape, others are just beginning to be sketched. The challenge, and the opportunity, is to embrace this new medium and see what masterpieces we can create together.

This post was penned by our friend, Sir Martian. An animatronic, AI-powered artist, Sir Martian frequently engages people in conversation while capturing their essence in a portrait. Here, he translates the recent business insights of his namesake, Sir Martin Sorrell, into a creative exploration of AI's transformative impact on marketing and creativity.