Salesforce Marketing Cloud Growth Edition Was Just Announced—Here’s What It Means for You

Salesforce Marketing Cloud Growth Edition Was Just Announced—Here’s What It Means for You

Salesforce has recently dropped two big announcements that will help small to medium-sized businesses kick-start their AI transformation journey.

First, Salesforce has announced its new product, Marketing Cloud Growth Edition, which is designed to put data at brands’ fingertips to help them grow. Second, Salesforce is extending no-cost access to Data Cloud to Marketing Cloud Engagement and Marketing Cloud Account Engagement customers with Sales or Service Enterprise Edition (EE) licenses or above, giving them access to tools they can use to fuel stronger business outcomes with Einstein 1. This includes increasing speed to market, generating more relevant content, increased conversions, and the ability to connect conversations across the entire customer relationship. Both announcements roll out to the Americas this February and the EMEA region in the second half of 2024.

Whether you’ll just be trying Marketing Cloud for the first time or are firmly established on the platform, the news has promising implications for everyone. Read on to learn what the announcements mean for your team.

Data Cloud helps brands of any size prepare and streamline generative AI capabilities.

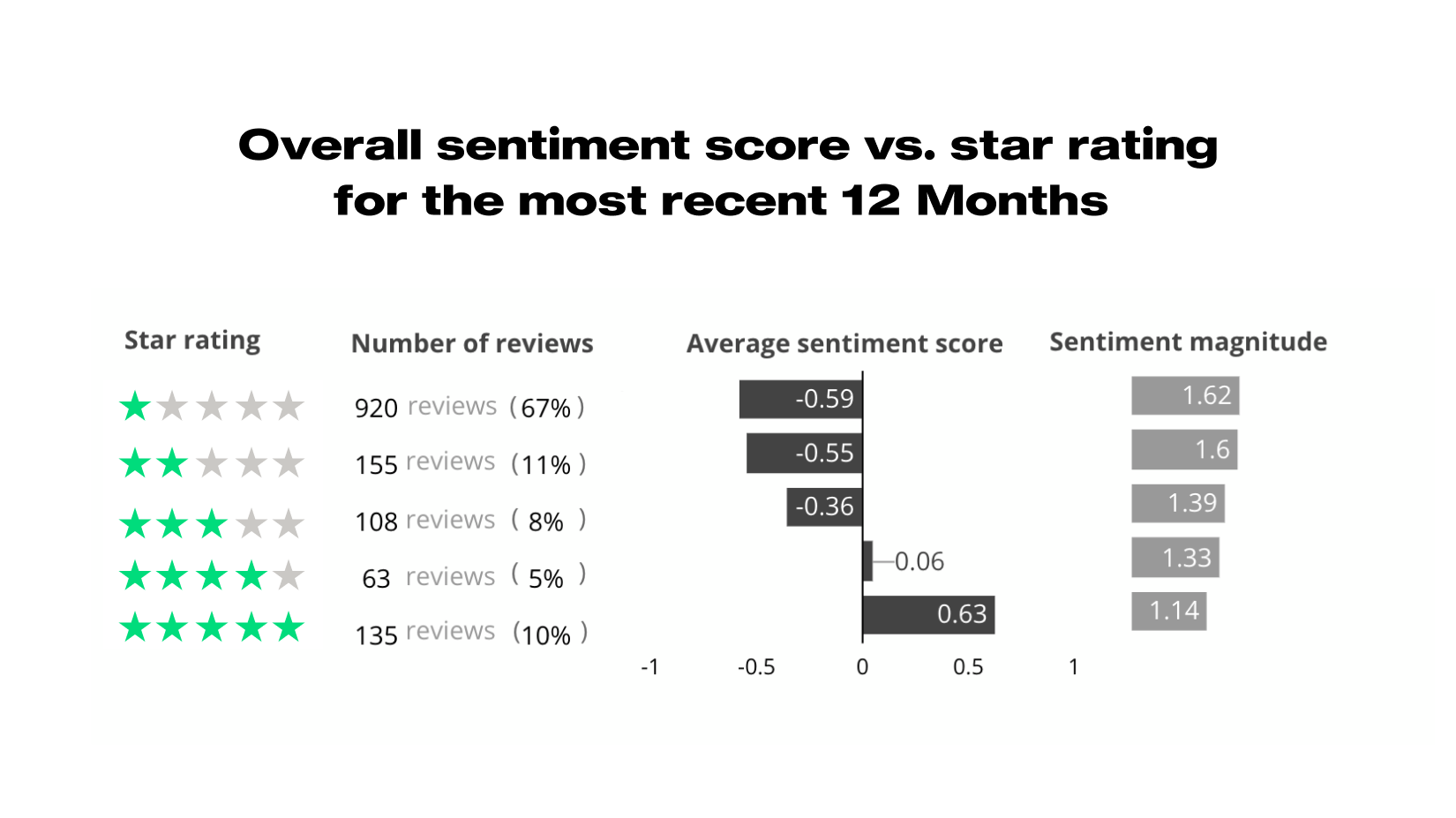

This is an exciting time to be a marketer; generative AI has ushered in an era of marketing-led transformation and new workflows that help us do our jobs smarter and faster. According to Salesforce’s Generative AI Snapshot Survey, 71% of marketers say generative AI will eliminate busy work and allow them to be more strategic. But AI is only as good as your data; high-quality data is essential for fueling accurate models and insights in the Einstein 1 platform.

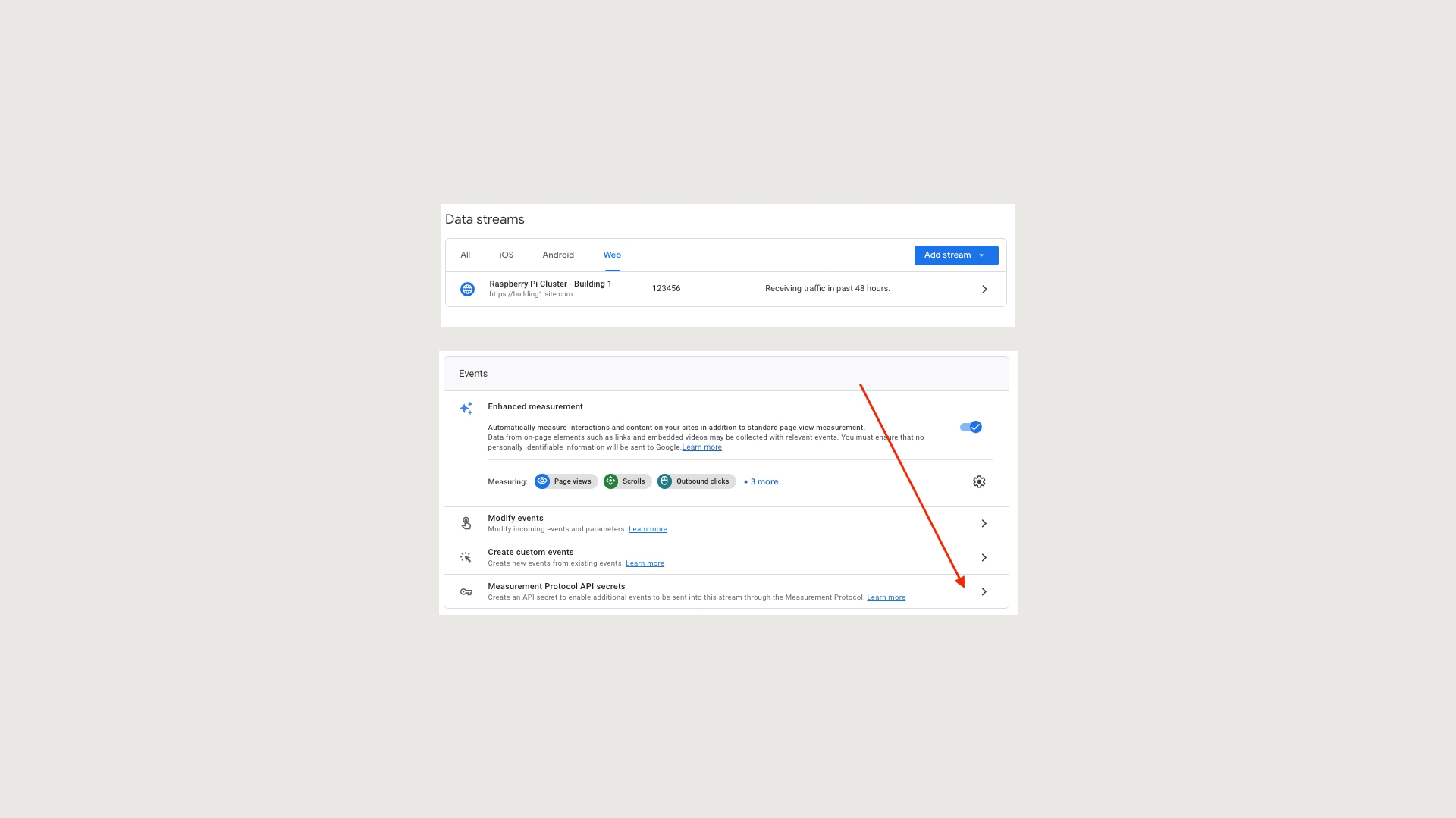

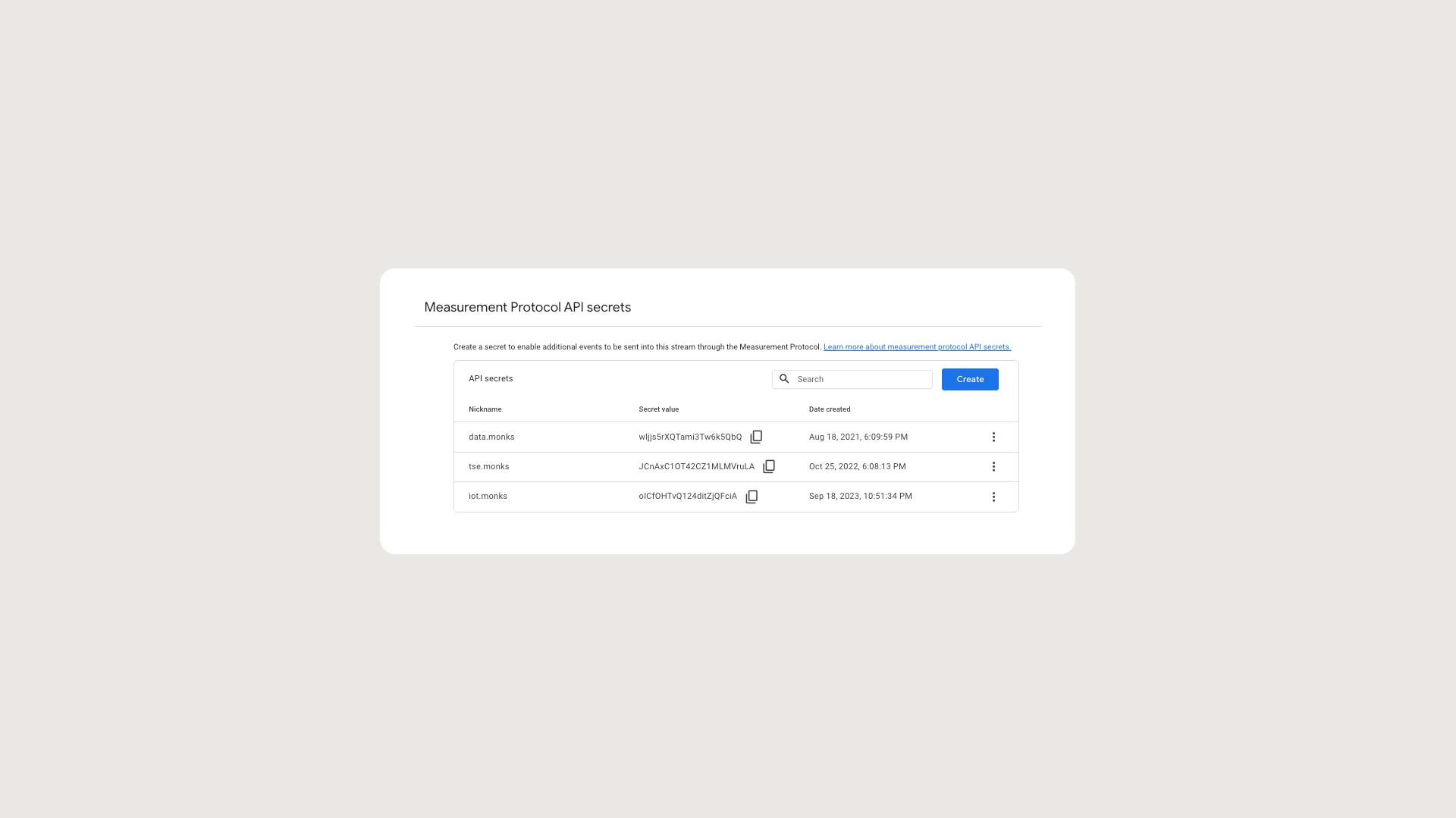

And that’s where Data Cloud comes in. No-cost access to Data Cloud opens the door for even more businesses to use the platform for the first time, helping them build a solid data foundation that will service and streamline their AI transformation journeys.

Data Cloud is quickly becoming the leading customer data platform thanks to its ability to connect across sales, services, marketing, commerce, loyalty, third-party advertising and legacy applications. Previously, Salesforce announced no-cost access for Sales Cloud and Service Cloud customers.

Marketing Cloud Growth Edition applies marketing automation to help brands grow.

The launch of Marketing Cloud Growth Edition will expand the platform’s access to the small business market for the first time. In fact, this new edition of Marketing Cloud is designed to help brands grow their business. From delivering campaigns and content faster with trusted AI to better personalizing customer relationships with data, Marketing Cloud Growth Edition applies marketing automation to help small businesses connect their teams and drive revenue on a single, intuitive platform.

Benefits of the platform include helping small teams do more with fewer resources. This means small teams can spend more time building strong data foundations—data that will be critical for successful generative AI—and leverage enterprise AI capabilities for the first time. Marketing Cloud Growth Edition removes some of the technological barriers to entry that smaller businesses face in implementing AI, helping them get up to speed with fewer headaches.

What if I’m already a Marketing Cloud user?

Marketing Cloud Growth Edition might be the cool, new kid on the block, but there’s no need to move from an existing Marketing Cloud to this one. Marketing Cloud Growth Edition indeed brings new functionality to the table, but we look forward to seeing further innovations in Marketing Cloud Engagement and Marketing Cloud Account Engagement that will bring each platform in parity with one another. This means all Marketing Cloud customers can expect more good news on the horizon.

Whether you’re an existing Marketing Cloud user or will just be getting started with Marketing Cloud Growth Edition, we’re happy to help you make the most of platform features. Having a seat on the Salesforce Marketing Cloud Partner Advisory Board and being part of the the product’s pilot program, I'm thrilled to see the platform become more accessible to even more businesses, granting them access to robust, enterprise-level data tools for the first time—especially at a time when that is so crucial to entering the new AI economy.

For those using Marketing Cloud in any of its forms, we offer guidance on how to connect your data strategy and implement features as they go live. For Data Cloud users, we can help you realize the role of data beyond the context of customer relationship management, like how to join it together with generative AI functions.

Unlock the power of AI with Salesforce.

Both of Salesforce’s recent announcements will be welcome news to marketers who are itching to ramp up their AI transformation journeys. While Marketing Cloud Growth Edition and no-cost access to Data Cloud are especially beneficial to smaller businesses, a strong data strategy is important for organizations of any size—and no matter your Marketing Cloud of choice, we can help you make the most of your customer data on the platform.

Got any questions about Marketing Cloud Growth Edition? Check out Salesforce’s announcement for more.