Case Study

Partners

Accelerate innovation with our strategic alliances.

We deploy and orchestrate advanced solutions through our strategic alliances, building a durable competitive advantage for our clients.

Global Partners

We orchestrate global partner expertise to build intelligent marketing engines, seamless commerce experiences and robust data foundations.

Go from powerful tools to market-leading results.

Access to world-class technology is the start, not the solution. Our strategic alliances build the most powerful platforms on the planet, but the real advantage comes from sophisticated deployment. That’s where we supply the last mile of intelligence, closing the gap between raw capability and high-performance results. This final, crucial layer of customization and deep industry knowledge elevates a generic tool into a bespoke solution, giving your business a definitive edge.

Proud to partner with:

Ready to innovate? Let's connect.

More on partners

-

Driving Experimentation and AI Innovation with Amplitude

-

Unlocking Growth on Amazon DSP with Human-Centered AI

-

Monks and Google Cloud: Powering the Future

-

Leveraging Data to Elevate PersonalizedExperiences—Insights From Salesforce, Google and Lenovo

-

Smarter Investments for an Evolving Marketing Landscape: MMM Meta-Analysis with Monks and TikTok

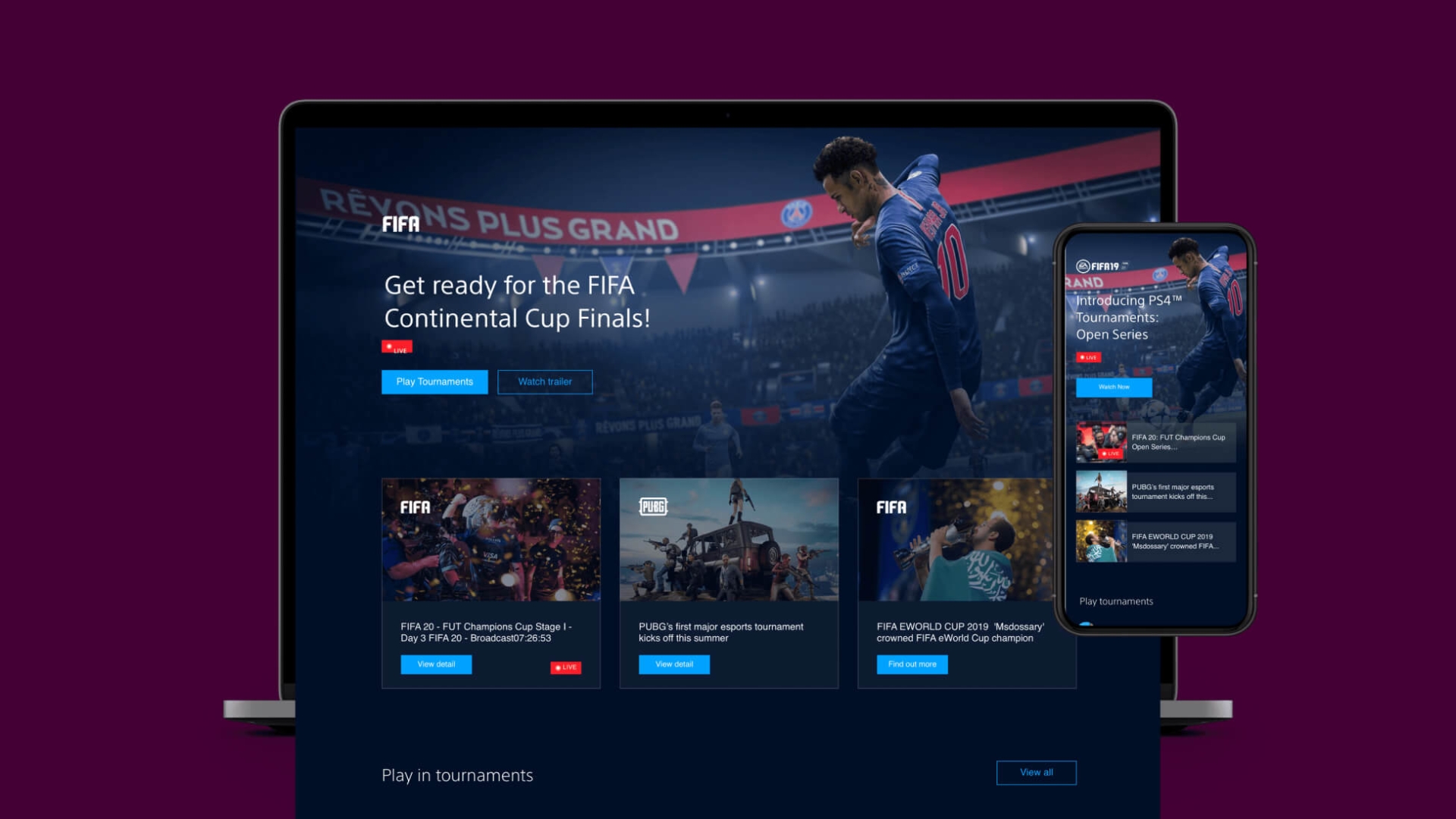

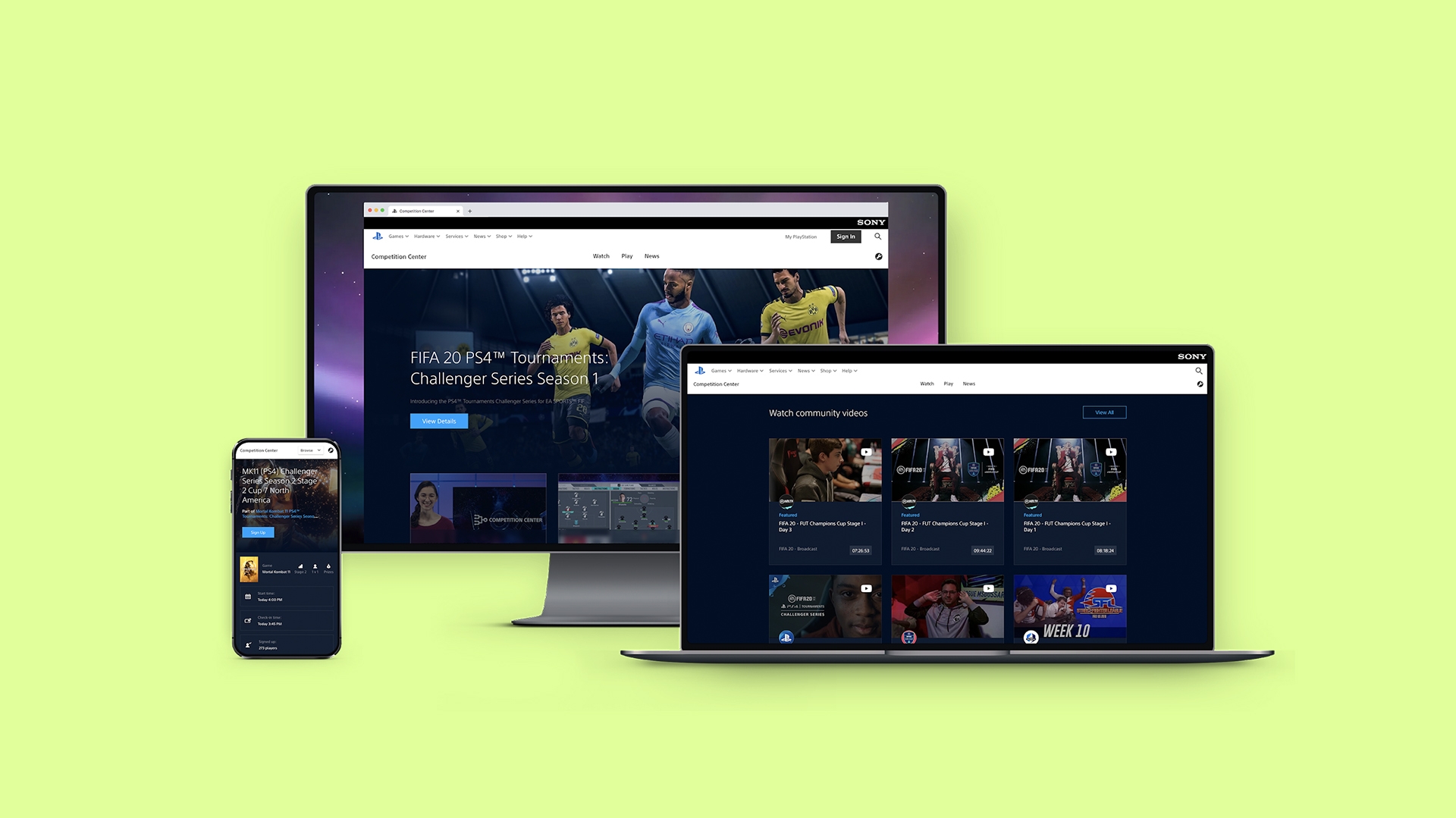

PlayStation Competition Center • Building an All-New Digital Experience for the Esports Community

A one-stop shop that revolutionizes the esports experience.

As a dominant force in the esports arena, PlayStation caters to hundreds of millions of monthly active users. Its ecosystem spans a variety of games, leagues, countries and languages, fostering an ever-growing community of gamers. This community sought a centralized hub for all things esports, and PlayStation recognized the demand to enhance competitive play and consolidate the esports experience. Hence, they entrusted us with the mission to bring this all-new digital experience to life, while also exploring new opportunities to innovate in the realm of competitive platforms.

Results

-

89 countries active on the platform

-

10 languages supported

-

Over 17 games launched

-

Increased new and returning users

Mastering the game with collaborative sessions.

To kick off the project, our product and technology teams worked in tandem during a four-week discovery phase. This crucial stage allowed us to lay the foundation for the minimum viable product (MVP) and create a roadmap for future development. In order to gain a comprehensive understanding of the project’s scope, we spent time working closely with Sony’s technology teams, which enabled us to grasp the intricacies of the existing environments and integration points. Armed with this knowledge, we were able to provide a detailed recommendation for a more modern and advanced technical architecture.

Creating modern technology solutions fit for champions.

In addition to delving into the existing environments and infrastructure, we conducted a comprehensive analysis of esports industry trends and user expectations. This examination helped us identify areas of opportunity that aligned with the brand’s business objectives, as well as define the requirements, map user journeys and identify practical solutions to the main challenges. The result is a remarkable esports platform that includes easily discoverable tournaments, seamless on-console sign-up processes, and real-time match updates, all working in harmony to strengthen PlayStation’s position as a pioneer in online competitive play.

Want to talk technology? Get in touch.

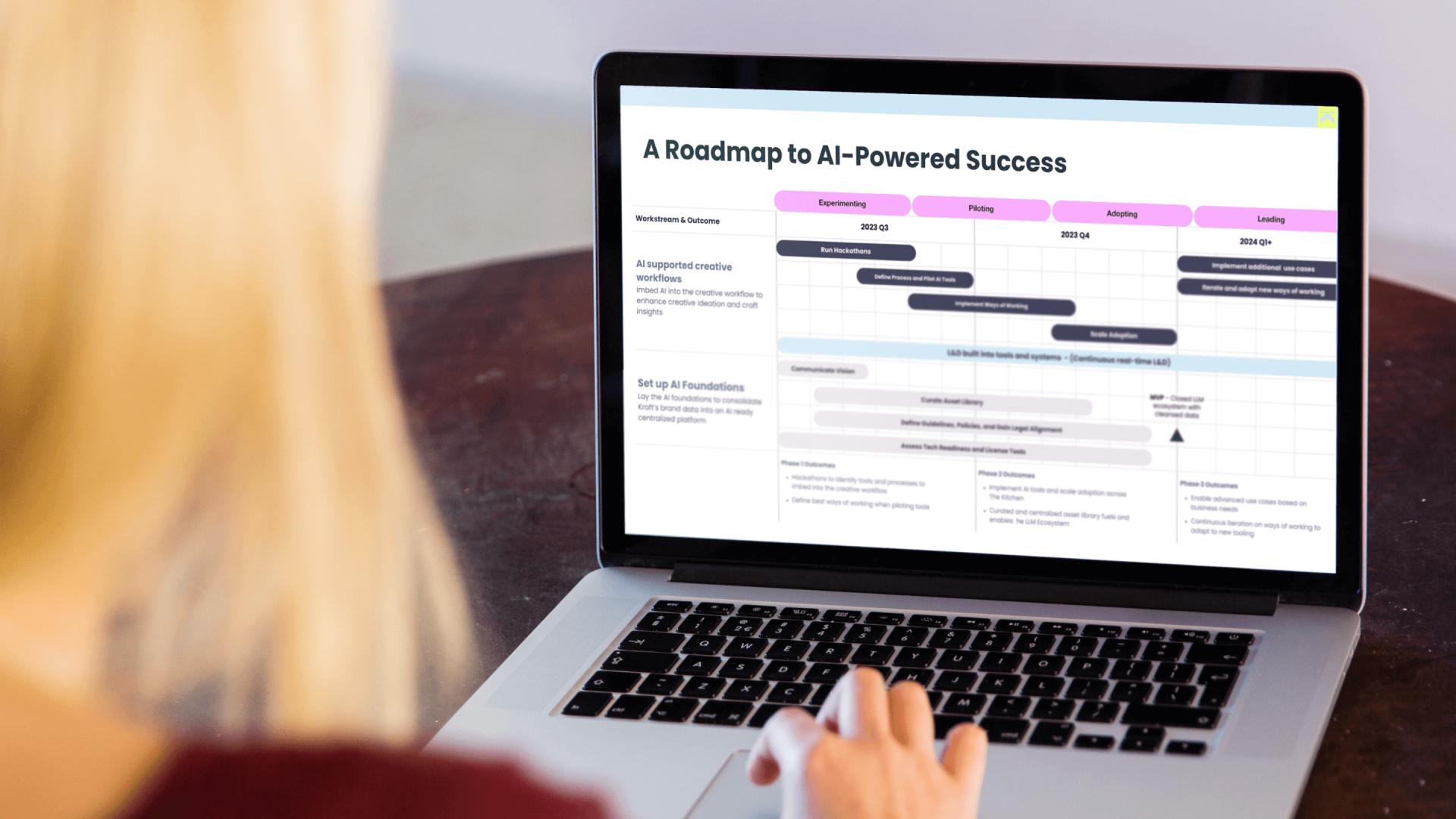

AI Test Kitchen • Building a Recipe for AI-Powered Success

A freshly seasoned approach to identify AI opportunities.

When it comes to harnessing the power of AI, there are endless opportunities to cook up a storm. So, how does one know which recipes to use? The Kitchen—Kraft Heinz’s in-house agency and creative chef behind its iconic brands—could immediately see the value of AI technology, but simply didn’t know where to start. In need of a sous-chef, they asked us to help them develop ideas and create a roadmap to implement AI throughout their business model. Therefore, we organized an in-person workshop to identify where and how AI could drive high value across all of The Kitchen’s operations with the aim to increase efficiencies, save costs, and elevate strategic, creative and production output. The outcome? An action plan to power their business with AI.

Fully baked solutions help us roadmap to success.

We started off by centralizing the question of “how might we?” to uncover the numerous areas of opportunity. From establishing AI foundations to trendspotting, creative development and production, our shopping list of possible AI use cases included lots of ingredients. So, we made sure to boil our ideas down to four focus areas: sharpen the primary insight, social trendspotting, creative ideation, and brand virtualization. Then, we created pilot implementation plans for each theme, thus defining the business value, the proposed scope, recommended tools and technologies, and the estimated effort as the concept evolves. Ultimately, we distilled all of this information into a single strategic AI roadmap, outlining how The Kitchen can build a brand virtualization foundation, while executing pilots that spur adoption of tools, learning and development, and creative ideation.

Four ingredients that are fundamental when you’re cooking with AI.

In addition to the main dish—the roadmap—we delivered four key takeaways. First, we advised The Kitchen on protecting Kraft Heinz’s data from AI model exposure, as we always say that any strong AI strategy starts with an airtight data strategy. Second, we highlighted the importance of communications around the role of AI within an organization, which can accelerate people’s acceptance and adoption of a new technology. Innovation sprints, for example, offer a safe space for talent to learn by doing and collectively push innovation forward. Third, we showed The Kitchen that the easiest point of entry is testing out AI use cases that don't require internal data, like those that spark creative processes. And as a final note, we emphasized that AI tools are constantly evolving, so it’s critical to have a solid foundation and establish a framework and process focused on adaptability— so that they're ready to adopt when the next big thing comes along.

With this strategic roadmap, The Kitchen can change the course of its value.

Through the workshop, we were able to show The Kitchen how they can use Kraft Heinz’s data to build a large language model ecosystem that supports various AI tools and use cases—and ultimately empower their talent by equipping them with flexible tools that can be used across a wide array of workflows. With that, they can now return to their chopping boards with a refined palate and a clear understanding of how to leverage AI to unlock new opportunities and add more value to their business model.

Results

-

Identified 64 AI use cases

-

Provided actionable key findings in the form of a strategic AI roadmap

Want to talk AI? Get in touch.

Can't get enough? Here is some related work you!

Technology

Technology Training & Coaching

Empower your team with expert tech training and mentorship.

Everything you need from a technology consultancy to enable long-term success.

Advising, building, and scaling digital products are our specialty as a technology consultancy—and a crucial part of the process is ensuring our clients feel empowered to succeed long term. To that end, we offer experiential learning and on-project coaching to suit your needs and enable workforce acceleration:

- Training. We use a combination of off-the-shelf and custom content to meet your team where they are. Our training approach involves experiential learning and on-project coaching to apply training to real project challenges.

- Coaching and mentoring. We offer coaching and mentoring services tailored to meet your organizational challenges. Our services include hands-on support for practice leads and 1-1 pairing with team members on actual projects, regardless of their expertise.

- Cross-pollination. We create custom spaces for individuals within organizations to tailor their practices. We identify opportunities for change and assist in scaling to the entire organization.

Want to talk Tech? Get in touch.

Explore more of our Tech solutions

Technology

AI Consulting

De-risk AI investments and unlock their potential with confidence and speed.

Leverage AI to make impactful decisions and shape your future.

Our AI Readiness Assessment is a structured process that helps you to assess and de-risk your future AI investments. It helps to speed up your transformation journey by providing you with a detailed report that identifies the gaps you need to close and the action items you need to take. We'll also prioritize your projects and ROI sequence, and will provide you with business cases to help you advance your transformation journey.

Alert Services & Outputs

- Quantitative, actionable scorecard for measuring readiness

- Prioritized pipeline of AI use case and experiences across the customer journey

- Clear understanding of the legal, ethical and risk components of those projects

- Roadmap of how technology infrastructure should evolve to meet the opportunities of AI

Want to talk Tech? Get in touch.

Explore more of our Tech solutions

Meet MonkGPT—How Building Your Own AI Tools Helps Safeguard Brand Protection

Meet MonkGPT—How Building Your Own AI Tools Helps Safeguard Brand Protection

What I’ve learned from months of experimenting with AI? These tools have proven to be a superpower for our talent, but it’s up to us to provide them with the proper cape—after all, our main concern is that they have a safe flight while tackling today’s challenges and meeting the needs of our clients.

At Media.Monks, we’re always on the lookout for ways to integrate the best AI technology into our business. We do this not just because we know AI is (and will continue to be) highly disruptive, but also because we know our tech-savvy and ceaselessly curious people are bound to experiment with exciting new tools—and we want to make sure this happens in the most secure way possible. We all remember pivotal blunders of these past months, like private code being leaked out into the public domain, and thus it comes as no surprise that our Legal and InfoSec teams have been pushing the brakes a bit on what tech we can adopt, taking the safety of our brand and those of our partners into consideration.

So, when OpenAI—the force behind ChatGPT—updated their terms of service, allowing people who leverage the API to utilize the service without any of their data being used to train the model as a default setting, we were presented with a huge opportunity. Naturally, we seized it with both hands and decided to build our own internal version of the popular tool by leveraging OpenAI’s API: MonkGPT, which allows our teams to harness the power of this platform while layering in our own security and privacy checks. Why? So that our talent can use a tool that’s both business-specific and much safer, with the aim to mitigate risks like data leaks.

You can’t risk putting brand protection in danger.

Ever since generative AI sprung onto the scene, we’ve been experimenting with these tools while exploring how endless their possibilities are. As it turns out, AI tools are incredible, but they don’t necessarily come without limitations. Besides not being tailored to specific business needs, public AI platforms may use proprietary algorithms or models, which could raise concerns about intellectual property rights and ownership. In line with this, these public tools typically collect data, the use of which may not be transparent and may fail to meet an organization’s privacy policies and security measures.

Brand risk is what we’re most worried about, as our top priority is to protect both our intellectual property and our employee and customer data. Interestingly, a key solution is to build the tools yourself. Besides, there’s no better way to truly understand the capabilities of a technology than by rolling up your sleeves and getting your hands dirty.

Breaking deployment records, despite hurdles.

In creating MonkGPT, there was no need to reinvent the wheel. Sure, we can—and do—train our own LLMs, but with the rapid success of ChatGPT, we decided to leverage OpenAI’s API and popular open source libraries vetted by our engineers to bring this generative AI functionality into our business quickly and safely.

In fact, the main hurdle we had to overcome was internal. Our Legal and InfoSec teams are critical of AI tooling terms of service (ToS), especially when it comes to how data is managed, owned and stored. So, we needed to get alignment with them on data risk and updates to OpenAI’s ToS—which had been modified for API users specifically so that it disabled data passed through OpenAI’s service to be used to train their models by default.

Though OpenAI stores the data that's passed through the API for a period of 30 days for audit purposes (after which it’s immediately deleted), their ToS states that it does not use this data to train its models. Coupling this with our internal best practices documentation, which all our people have access to and are urged to review before using MonkGPT, we make sure that we minimize any potential for sensitive data to persist in OpenAI’s model.

As I’ve seen time and time again, ain’t no hurdle high enough to keep us from turning our ideas into reality—and useful tools for our talent. Within just 35 days we were able to deploy MonkGPT, scale it out across the company, and launch it at our global All Hands meeting. Talking about faster, better and cheaper, this project is our motto manifested. Of course, we didn’t stop there.

Baking in benefits for our workforce.

Right now, we have our own interface and application stack, which means we can start to build our own tooling and functionality leveraging all sorts of generative AI tech. The intention behind this is to enhance the user experience, while catering to the needs of our use cases. For example, we’re currently adding features like Data Loss Prevention to further increase security and privacy. This involves implementing ways to effectively remove any potential for sensitive information to be sent into OpenAI’s ecosystem, so as to increase our control over the data, which we wouldn’t have been able to do had we gone straight through ChatGPT’s service.

Another exciting feature we’re developing revolves around prompt discovery and prompt sharing. One of the main challenges in leveraging a prompt-based LLM’s software is figuring out what the best ways are to ask something. That’s why we’re working on a feature—which ChatGPT doesn’t have yet—that allows users to explore the most useful prompts across business units. Say you’re a copywriter, the tool could show you the most effective prompts that other copywriters use or like. By integrating this discoverability into the use of the tool, our people won’t have to spin their wheels as much to get to the same destination.

In the same vein, we’re also training LLMs towards specific purposes. For instance, we can train a model for our legal counsels that uncovers all the red flags in a contract based on both the language for legal entities and what they have seen in similar contacts. Imagine the time and effort you can save by heading over to MonkGPT and, depending on your business unit, selecting the model that you want to interact with—because that model has been specifically trained for your use cases.

It’s only a matter of time before we’re all powered by AI.

All these efforts feed into our overall AI offering. In developing new features, we’re not just advancing our understanding of LLMs and generative AI, but also expanding our experience in taking these tools to the next level. It’s all about asking ourselves, “What challenges do our business units face and how can AI help?” with the goal to provide our talent with the right superpowers.

The real opportunities lie in further training AI models and exploring new use cases.

It goes without saying that my team and I apply this same kind of thinking to the work we do for all our clients. Our AI mission moves well beyond our own organization as we want to make sure the brands we partner with reap the benefits of our trial and error, too. This is because we know with absolute certainty that sooner or later every brand is going to have their very own models that know their business from the inside out, just like MonkGPT. If you’re not already embracing this inevitability now, then I’m sure you will soon. Whether getting there takes just a bit of consultation or full end-to-end support, my team and I have the tools and experience to customize the perfect cape for you.

Center of Excellence • A Dedicated Team Designed for Morningstar’s Every Need

Results

-

Grown to include 150+ skilled team members

-

Readiness for high levels of confidentiality

-

Secure and ISO certified

Building a strong, vested partnership.

Over the past six years, we've established, run, and matured the Center of Excellence, Morningstar’s product design and development center out of Latin America. Since the inception of our partnership, built on ownership and true collaboration, the team has grown to include 150+ skilled tech services experts who continue to serve the operational structure for Morningstar’s product, feature development and maintenance strategies. Discover below how the Center of Excellence came to be below, as well as some of the specific ways it has supported the financial services firm.

A home away from home, built in a secure global facility.

When Morningstar needed assurances about data security and high levels of confidentiality in our work together, we redesigned an entire floor in our Bogotá office building to showcase our capacity to take on sensitive projects without sacrificing seamless collaboration. The Center of Excellence houses diverse skills ranging from product design, engineering, business analysis and more, and is designed to feel and function like an extension of Morningstar itself. In fact, the Center offers familiarity for those traveling to Colombia from any of Morningstar’s offices around the world, and we wrapped 2022 with a visit from the brand’s senior executives to experience the excellence together.

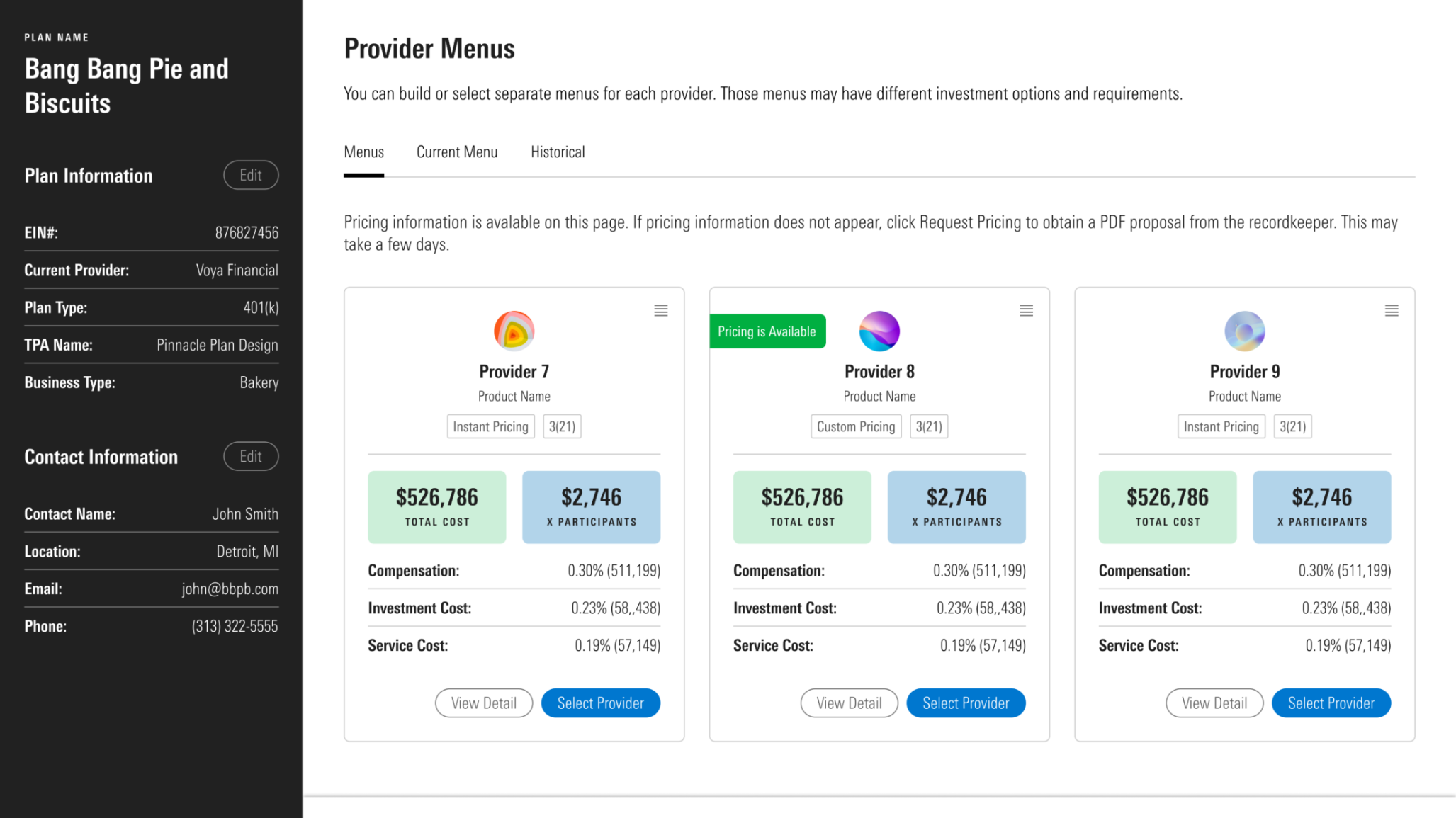

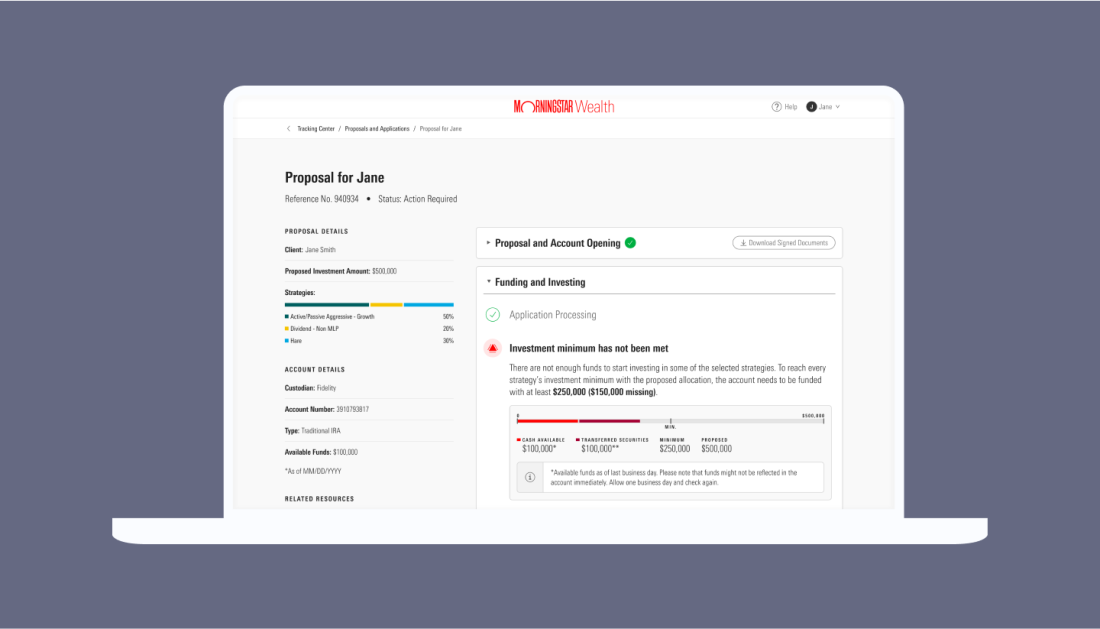

Unifying client investment data for financial advisors.

Want a peek into what goes on inside our Center of Excellence? When Morningstar’s financial advisors lacked a sophisticated tool to unify the disparate but crucial data sources they rely on—such as investment managers, custodians, and back-end processes most advisors aren’t even aware exist—our tech pros got to work. We solved the need by creating Managed Portfolios, a complex web app with a simple UX that compiles raw financial data and all client inputs into an accessible experience, empowering advisors to make timely financial decisions their clients depend upon.

In partnership with

- Morningstar

There are many things that set Monks apart from their competitors. For Morningstar, it's their culture and understanding of our business. They always rise to the challenge, they’re always committed.

James Rhodes

Chief Technology Officer, Morningstar

Modernizing retirement plan management.

Faced with Morningstar’s need to upgrade their retirement plan management experience, we structured and staffed a retained product team to develop and launch Morningstar Plan Advantage (MPA). The platform enables employees to compare, manage and maintain their retirement plans. MPA remains one of Morningstar’s most ambitious retail products ever, in use by major financial services players like UBS and Raymond James.

We’re always ready for what’s next.

Our deep experience with Morningstar applied knowledge of digital technology makes us a key partner in helping the brand achieve its ever-evolving goals. Through long-term collaboration and proximity to the brand through the Center of Excellence, we continue to remain deeply involved throughout the lifecycles of Morningstar’s products, spinning up new or complementary squads designed to meet each project’s goals and challenges.

Want to talk tech? Get in touch.

Can’t get enough? Here is some related work for you!

The Labs.Monks Count Down to Most Anticipated Trends of 2023

The Labs.Monks Count Down to Most Anticipated Trends of 2023

Firmly settled into the new year, we’re already looking ahead at tech trends that lie on the horizon. And who better is there to predict what they might look like than the Labs.Monks, our innovation team? As an assessment of their trend forecast from one year ago (spoiler alert: they got more than a few right) and a glimpse into the near future of digital creation and consumption, the Labs.Monks have come together again to share their top trends for the new year. Let’s count them down!

10. Digital humans get more realistic.

Digital humans may have earned a spot on our list of trends last year, but we haven’t grown tired of traversing the uncanny valley to play with the technology. In fact, the recent explosion of conversational AI will likely inject new life into digital humans and transform the realms of customer service, entertainment and more. Whether used to hand-craft original characters or refine scanned-in digital twins, digital human creation tools are becoming increasingly complex to deliver lifelike avatars.

“We’ll see more competition between Unreal’s MetaHuman Creator and Unity’s Ziva,” says Geert Eichhorn, Innovation Director. In fact, Media.Monks has used Unreal’s tool to create a digital double of our APAC Chief Executive Officer, Michel de Rijk. Because why not?

00:00

00:00

00:00

9. Motion capture becomes more accessible.

Last year, we released a Labs Report dedicated to motion capture and how its increasing accessibility influenced content production for both professional film teams and everyday consumers. New technologies available at consumer price points are helping to bring motion capture into even more people’s hands. Meta’s Quest Pro headset, which released late last year, features impressive facial tracking that will be key to expressing the nuances of human emotion in VR. Move.ai, currently in beta, enables 1:1 motion tracking with a group of mobile devices—no bodysuits, no markers, no extra hardware needed. Using computer vision, the platform allows anyone to make motion capture video in any environment.

8. Mixed reality and mirror worlds mature.

With smaller and more comfortable AR headsets shown off already at CES, we can expect augmented and mixed reality to become more immersive, accessible and practical over the course of 2023 (check out more of what we saw at CES here). The VIVE Flow, for example, includes diopters so that users can replicate their prescription lenses in the device, amounting to a more comfortable experience overall.

But it’s not just about hardware. “One of the major advancements is not in the headsets, but in the software,” says Eichhorn, noting that VPS has the power to pinpoint a user’s exact position and vantage point in the real world. “They do this positioning by comparing your camera view to a virtual, 3D version of the world, like Street View.” We covered mirror worlds in last year’s trend list, but the development of VPS is now bringing this vision closer to everyday consumers.

While VPS currently works only outdoors, we’ve already seen the power of the technology with Gorillaz performances in Times Square and Piccadilly Circus in December 2022.

This innovation ultimately unlocks the public space for bespoke digital experiences, where brands can move out of billboards and storefronts and move into the space in between.

7. More enterprises embrace the hybrid model.

For many businesses the return to the office hasn’t been a smooth transition; while some roles require close collaboration within a shared space, others enjoy more flexible setups that support childcare, offer privacy for focus work or greater accessibility. Given the benefits of flexible work setups and the development of technologies that build presence in virtual environments, Luis Guajardo Díaz, Creative Technologist, believes more enterprises will embrace the hybrid work model.

Media.Monks’ live broadcast team, for example, built a sophisticated network of cloud-based virtual machines hosted on AWS to enable people distributed around the world to produce live broadcasts and events. Born out of necessity during the pandemic, the workflow goes beyond bringing teams together—it’s designed to overcome some of the challenges traditional broadcast teams face on the ground, like outages or hardware malfunctions. It stands to show how hybrid models can help enhance the ways we work today.

6. Virtual production continues to impress.

Virtual production powered by real-time become popular in recent years: the beautiful environments of The Mandalorian or grungy urban landscape of The Matrix showed what was possible by integrating game engines in the production process, while pandemic lockdowns made the technology a necessity for teams who couldn’t shoot on location.

Now, further advancements in game engines and graphics processing offer a look inside the future of virtual production. Sander van der Vegte, VP Emerging Tech and R&D, points to Unreal’s Nanite, which allows for the optimization of raw 3D content in real time.

From concept to testing, the chronological steps of developing such projects will follow a different and more iterative approach, which opens up creative possibilities that were impossible before.

Localization of content is one example. “In 2023 we’re going to see this versatility in the localization of shoots, where one virtual production shoot can have different settings for different regions, all adapted post-shoot,” says Eichhorn.

5. TV streaming and broadcasts become more interactive.

With virtual production becoming even more powerful, TV and broadcasting will also evolve to become more interactive and immersive. “Translating live, filmed people into real-time models allows for many new creative possibilities,” says van der Vegt. “Imagine unlocking the power to be the cameraman for anything you are watching on TV.”

It might sound like science fiction, but Sander’s vision isn’t far off. At this year’s CES, Sony demoed a platform that uses Hawk-Eye data to generate simulated sports replays. Users can freely control the virtual camera to view the action from any angle—and while not live, the demo illustrates the power of more immersive broadcasts. The technology could be a game changer for sports and televised events that let audiences feel like they’re part of the action.

4. Metaverse moves become more strategic.

“2021 was a peak hype year for the metaverse and Web3. 2022 was the year of major disillusionment,” says Javier Sancho, Project Manager. “There are plenty of reasons to believe that this was just an overinflated hype, but it’s a recurring pattern in tech history.” Indeed, a “trough of disillusionment” inevitably follows a peak in the hype cycle.

This year will challenge brands to think of where they fit within the metaverse—and how they can leverage the immersive technology to drive bottom-line value. Angelica Ortiz, Senior Creative Technologist, says the key to unlocking value in metaverse spaces is to think beyond one-time activations and instead fuel long-term customer journeys.

NFTs and crypto have had challenges in the past year from a consumer and legal perspective. Now that the shine is starting to fade, that paves a new road for brands to go beyond PR and think critically about when and how to best evolve and create more connected experiences.

A great example of how brands are using Web3 in impactful ways is by transforming customer loyalty programs, like offering unique membership perks and gamified experiences. These programs reinforce how the Web3 ethos is evolving brand-customer relationships by turning consumers into active participants and collaborators.

3. Large language models keep the conversation flowing.

With so much interest in bots like ChatGPT, the Labs.Monks expect large language models (LLMs) will continue to impress as the year goes on. “Large Language Models (LLMs) are artificial intelligence tools that can read, summarize and translate texts, and generate sentences similar to how humans talk and write,” says Eichhorn. These models can hold humanlike conversations, answering complex questions and even writing programs. But these skills open a can of worms, especially in education when students can outsource their homework to a bot.

LLMs like GPT are only going to become more powerful, with GPT-4 soon to launch. But despite their impressive ability to understand and mimic human speech, inaccuracies in response still need to be worked out. “The results are not entirely trustworthy, so there’s plenty of challenges ahead,” says Eichhorn. “We expect many discussions over AI sentience this year, as the Turing Test is a measurement we’re going to leave behind.” In fact, Google’s LaMDA already triggered debates about sentience last year—so expect more to come.

2. Generative AI paints the future of AI-assisted creativity.

If 2021 was the year of the metaverse, the breakout star of 2022 is generative AI in all its forms: creating copy, music, voiceovers and especially artwork. “Generative AI wasn’t on our list in 2022, although looking back it should have been,” says Eichhorn. “The writing was on the wall, and internally we’ve been working on machine learning and generating assets for years.”

But while the technology has been embraced by some creatives and technologists, there’s also been some worry and pushback. “These new technologies are so disruptive that we see not only copywriters and illustrators feel threatened, but also major tech companies need to catch up to not become obsolete.”

In response to these concerns, Ortiz anticipates a friendly middle ground where AI will be used to augment—not erase—human creativity. “With the increasing push back from artists, the industry will find strategic ways to optimize processes not cut jobs to improve workflows and let artists do more of what they love and less of what they don’t,” she says. Prior to the generative AI boom, Adobe integrated machine learning and artificial intelligence across its software with Adobe Sensei. More recently, they announced plans to sell AI-generated images on their stock photography platform.

00:00

00:00

00:00

Ancestor Saga is a cyberpunk fantasy adventure created using state of the art generative AI and rotoscoping AI technology.

We’re suddenly seeing a very tangible understanding of the power of AI. 2023 will be the Cambrian explosion of AI, and this is going to be accompanied with serious ethical concerns that were previously only theorized about in academia and science fiction.

1. The definition of “artist” or “creator” changes forever.

Perhaps the most significant trend we anticipate this year isn’t a tech trend; rather, it’s the effect that technology like generative AI and LLMs will have on artists, knowledge workers and society.

With an abundance of AI-generated content, traditional works of art—illustrations, photographs and more—may lose some of their value. “But on the flip side, these tools let everyone become an artist, including those who were never able to create this kind of work before,” says Eichhorn. This can mean those who lack the training, sure, but it also means those with disabilities who have found particular creative fields to be inaccessible.

When everyone can be an artist, what does being an artist even mean? The new definition will lie in the skills that generative AI forces us to adopt. Working with generative AI doesn’t necessarily eliminate creative decision-making; rather, it changes what the creative process entails. New creative skills, like understanding how to prompt a generative AI for specific results, may reshape the role of the artist into something more akin to a director.

Eichhorn compares these questions to the rise of digital cameras and Photoshop, both of which changed photography forever while making it more accessible. “The whole process will take many more years to settle in society, but we’ll likely see many discussions this year on what ‘craft’ really entails,” says Eichhorn.

That’s all, but we can expect a few surprises to emerge as the year goes on. Look out for more updates from the Labs.Monks, who regularly release reports, prototypes and podcast episodes that touch on the latest in digital tech, including some of the topics discussed above. Here’s to another year of innovation!

Scrap the Manual: Generative AI

Scrap the Manual: Generative AI

Generative AI has taken the creative industry by storm, flooding our social feeds with beautiful creations powered by the technology. But is it here to stay? And what should creators keep in mind?

In this episode of Scrap the Manual, host Angelica Ortiz is joined by fellow Creative Technologist Samuel Snider-Held, who specializes in machine learning and Generative AI. Together, Sam and Angelica answer questions from our audience—breaking down the buzzword into tangible considerations and takeaways—and why embracing Generative AI could be a good thing for creators and brands.

Read the discussion below or listen to the episode on your preferred podcast platform.

00:00

00:00

00:00

Angelica: Hey everyone. Welcome to Scrap the Manual, a podcast where we prompt "aha" moments through discussions of technology, creativity, experimentation and how all those work together to address cultural and business challenges. My name's Angelica, and I'm joined today by a very special guest host, Sam Snider-Held

Sam: Hey, great to be here. My name's Sam. We're both Senior Creative Techs with Media.Monks. I work out of New York City, specifically on machine learning and Generative AI, while Angelica's working from the Netherlands office with the Labs.Monks team.

Angelica: For this episode, we're going to be switching things up a bit and introducing a new segment where we bring a specialist and go over some common misconceptions on a certain tech.

And, oh boy, are we starting off with a big one: Generative AI. You know, the one that's inspired the long scrolls of Midjourney, Stable Diffusion and DALL-E images and the tech that people just can't seem to get enough of the past few months. We just recently covered this topic on our Labs Report, so if you haven't already checked that out, definitely go do that. It's not needed to listen to this episode, of course, but it'll definitely help in covering the high level overview of things. And we also did a prototype that goes more in depth on how we at Media.Monks are looking into this technology and how it implements within our workflows.

For the list of misconceptions we’re busting or confirming today, we gathered this list from across the globe–ranging from art directors to technical directors–to get a variety of what people are thinking about on this topic. So let's go ahead and start with the basics: What in the world is Generative AI?

Sam: Yeah, so from a high level sense, you can think about generative models as AI algorithms that can generate new content based off of the patterns inherent in its training data set. So that might be a bit complex. So another way to explain it is since the dawn of the deep learning revolution back in 2012, computers have been getting increasingly better at understanding what's in an image, the contents of an image. So for instance, you can show a picture of a cat to a computer now and it will be like, "oh yeah, that's a cat." But if you show it, perhaps, a picture of a dog, it'll say, "No, that's not a cat. That's a dog."

So you can think of this as discriminative machine learning. It is discriminating whether or not that is a picture of a dog or a cat. It's discriminating what group of things this picture belongs to. Now with Generative AI, it's trying to do something a little bit different: It's trying to understand what “catness” is. What are the defining features of what makes up a cat image in a picture?

And once you can do that, once you have a function that can describe “catness”, well, then you can just sample from that function and turn it into all sorts of new cats. Cats that the algorithm's actually never seen before, but it just has this idea of “catness” creativity that you can use to create new images.

Angelica: I've heard AI generally described as a child, where you pretty much have to teach it everything. It's starting from a blank slate, but over the course of the years, it is no longer a blank slate. It's been learning from all the different types of training sets that we've been giving it. From various researchers, various teams over the course of time, so it's not blank anymore, but it's interesting to think about what we as humans take for granted and being like, "Oh that's definitely a cat." Or what's a cat versus a lion? Or a cat versus a tiger? Those are the things that we know of, but we have to actually teach AI these things.

Sam: Yeah. They're getting to a point where they're moving past that. They all started with this idea of being these expert systems. These things that could only generate pictures of cats...could only generate pictures of dogs.

But now we're in this new sort of generative pre-training paradigm, where you have these models that are trained by these massive corporations and they have the money to create these things, but then they often open source them to someone else, and those models are actually very generalized. They can very quickly turn their knowledge into something else.

So if it was trained on generating this one thing, you do what we call “fine tuning”, where you train it on another data set to very quickly learn how to generate specifically Bengal cats or tigers or stuff like that. But that is moving more and more towards what we want from artificial intelligence algorithms.

We want them to be generalized. We don't want to have to train a new model for every different task. So we are moving in that direction. And of course they learn from the internet. So anything that's on the internet is probably going to be in those models.

Angelica: Yeah. Speaking of fine tuning, that reminds me of when we were doing some R&D for a project and we were looking into how to fine tune Stable Diffusion for a product model. They wanted to be able to generate these distinctive backgrounds, but have the product always be consistent first and foremost. And that's tricky, right? When thinking about Generative AI and it wanting to do its own thing because either it doesn't know better or you weren't necessarily very specific on the prompts to be able to get the product consistent. But now, because of this fine tuning, I feel like it's actually making it more viable of a product because then we don't feel like it's this uncontrollable platform. It's something that we could actually leverage for an application that is more consistent than it may have been otherwise.

So the next question we got is: with all of the focus on Midjourney prompts being posted on LinkedIn and Twitter, is Generative AI simply just a pretty face? Is it only for generating cool images?

Sam: I would definitely say no. It's not just images. It's audio. It's text. Any type of data set you put into it, it should be able to create that generative model on that dataset. It's just the amount of innovation in the space is staggering.

Angelica: What I think is really interesting about this field is not only just how quickly it's advanced in such a short period of time, but also the implementation has been so wide and varied.

Sam: Mm-hmm.

Angelica: So we talked about generating images, generating text and audio and video, but I had seen that Stable Diffusion is being used for generating different types of VR spaces, for example. Or it's Stable Diffusion powered processes, or not even just Stable Diffusion... just different types of Generative AI models to create 3D models and being able to create all these other things that are outside of images. There's just so much advancement within a short period of time.

Sam: Yeah, a lot of this stuff you can think about like LEGO blocks. You know, a lot of these models that we're talking about are past this generative pre-training paradigm shift where you're using these amazingly powerful models trained by big companies and you're pairing them together to do different sorts of things. One of the big ones that's powering this, came from OpenAI, was CLIP. This is the model that allows you to really map text and images into the same vector space. So that if you put in an image and a text, it will understand that those are the same things from a very mathematical standpoint. These were some of the first things that people were like, "Oh my gosh, it can really generate text and it looks like a human wrote it and it's coherent and it circles back in on it itself. It knows what it wrote five paragraphs back." And so, people started to think, "What if we could do this with images?" And then maybe instead of having the text and the images mapped to the same space, it's text to song, or text to 3D models?

And that's how all of this started. You have people going down the evolutionary tree of AI and then all of a sudden, somebody comes out with something new and people abandon that tree and move on to another branch. And this is what's so interesting about it: Whatever it is you do, there's some cool way to incorporate Generative AI into your workflow.

Angelica: Yeah, that reminds me of another question that we got that's a little bit further down the list, but I think it relates really well with what you just mentioned. Is Generative AI gonna take our jobs? I remember there was a conversation a few years ago, and it still happens today as well, where they were saying the creative industry is safe from AI. Because it's something that humans take creativity from a variety of different sources, and we all have different ways of how we get our creative ideas. And there's a problem solving thing that's just inherently human. But with seeing all of these really cool prompts being generated, it's creating different things that even go beyond what we would've thought of. What are your thoughts on that?

Sam: Um, so this is a difficult question. It's really hard to predict the future of this stuff. Will it? I don't know.

I like to think about this in terms of “singularity light technology.” So what I mean by singularity light technology is a technology that can zero out entire industries. The one we're thinking about right now is stock photography and stock video. You know, it's hard to tell those companies that they're not facing an existential risk when anybody can download an algorithm that can basically generate the same quality of images without a subscription.

And so if you are working for one of those companies, you might be out of a job because that company's gonna go bankrupt. Now, is that going to happen? I don't know. Instead, try to understand how you incorporate it into your workflow. I think Shutterstock is incorporating this technology into their pipeline, too.

I think within the creative industry, we should really stop thinking that there's something that a human can do that an AI can't do. I think that's just not gonna be a relevant idea in the near future.

Angelica: Yeah. My perspective from it would be: not necessarily it's going to take our jobs, but it's going to evolve how we approach our jobs. We could think of a classic example of film editors where they had like physical reels to have to cut. And then when Premiere and After Effects come out, then that process is becoming digitized.

Sam: Yeah.

Angelica: And then further and further and further, right? So there's still video editors, it's just how they approach their job is a little bit different.

And same thing here. Where there'll still be art directors, but it'll be different on how they approach the work. Maybe it'll be a lot more efficient because they don't necessarily have to scour the internet for inspiration. Generative AI could be a part of that inspiration finding. It'll be a part of the generating of mockups and it won't be all human made. And we don't necessarily have to mourn the loss of it not being a hundred percent human made. It'll be something where it will allow art directors, creatives, creators of all different types to be able to even supercharge what they currently can do.

Sam: Yeah, that's definitely true. There's always going to be a product that comes out from NVIDIA or Adobe that allows you to use this technology in a very user friendly way.

Last month, a lot of blog posts brought up a good point: if you are an indie games company and you need some illustrations for your work, normally you would hire somebody to do that. But this is an alternative and it's cheaper and it's faster. And you can generate a lot of content in the course of an hour, way more than a hired illustrator could do.

It's probably not as good. But for people at that budget, at that level, they might take the dip in quality for the accessibility, the ease of use. There's places where it might change how people are doing business, what type of business they're doing.

Another thing is that sometimes we get projects that for us, we don't have enough time. It's not enough money. If we did do it, they would basically take our entire illustration team off the bench to work on this one project. And normally if a company came to us and we passed on it, they would go to another one. But perhaps now that we are investing more and more on this technology, we say, "Hey, listen, we can't put real people on it, but we have this team of AI engineers, and we can build this for you.” For our prototype, that's what we were really trying to understand is how much of this can we use right now and how much benefit is that going to give us? And the benefit was to allow this small team to start doing things that large teams could do for a fraction of the cost.

I think that's just going to be the nature of this type of acceleration. More and more people are going to be using it to get ahead. And because of that, other companies will do the same. Then it becomes sort of an AI creativity arms race, if you will. But I think that companies that have the ability to hire people that can go to their artists and say, "Hey, what things are you having problems with? What things do you not want to do? What things take too much time?" And then they can look at all the research that's coming out and say, "Hey, you know what? I think we can use this brand new model to make us make better art faster, better, cheaper." It protects them from any sort of tool that comes out in the future that might make it harder for them to get business. At the very least, just understanding how these things work and not from a black box perspective, but having an understanding of how they work.

Angelica: It seems like a safe bet, at least for the short term, is just to understand how the technology works. Like listening to this podcast is actually a great start.

Sam: Yeah.

Angelica: Right?

Sam: If you are an artist and you're curious, you can play around with it by yourself. Google CoLab is a great resource. And Stable Diffusion is designed to run on cheap GPU. Or you can start to use these services like Midjourney, to have a better handle on what's happening with it and how fast it's moving.

Angelica: Yeah, exactly. Another question that came through is: if I create something with Generative AI through Prompt Engineering, is that work really mine?

Sam: So this is starting to get into a little bit more of a philosophical question. Is it mine in the sense that I own it? Well, if the model says so, then yes. Stable Diffusion, I believe, comes with a MIT license. So that is like the most permissive license. If you generate an image with that, then it is technically yours, provided somebody doesn't come along and say, "The people who made Stable Diffusion didn't have the rights to offer you that license."

But until that happens, then yes, it is yours from an ownership point of view. Are you the creator? Are you the creative person generating that? That's a bit of a different question. That becomes a little bit murkier. How different is that between a creative director and illustrator going back and forth saying:

"I want this."

"No, I don't want that."

"No, you need to fix this."

"Oh, I liked what you did there."

"That's really great. I didn't think about that."

Who's the owner in that solution? Ideally, it's the company that hires both of them. This is something that's gonna have to play out in the legal courts if they get there. I know a lot of people already have opinions on who is going to win all the legal challenges, and that is just starting to happen right now.

Angelica: Yeah, from what I've seen in a lot of discussion, it's a co-creation platform of sorts, where you have to know what to say in order to get it to be the right outcome. So if you say, “I want an underwater scene that has mermaids floating and gold neon coral,” it'll generate certain types of visuals based off of that, but it may not be the visuals you want.

Then that's where it gets nitpicky into styles and references. That's where the artists come into play, where it's a Dali or Picasso version of an underwater scene. We've even seen prompts that use Unreal...

Sam: Mm-hmm

Angelica: ...as a way to describe artistic styles. Generative AI could create things from a basic prompt. But there's a back and forth, kinda like you were describing with a director and illustrator, in order to know exactly what outcomes to have and using the right words and key terms and fine tuning to get the desired outcome.

Sam: Definitely, and I think this is a very specific question to this generation of models. They are designed to work with text to image. There's a lot of reasons for why they are this way. A lot of this research is built on the backs of transformers, which were initially language generation models. If you talk to any sort of artist, the idea that you're creating art by typing is very counterintuitive to what they spent years learning and training to do. You know, artists create images by drawing or painting or manipulating creative software and its way more gestural interface. And I think that as technology evolves–and definitely how we want to start building more and more of these technologies to make it more engineered with the artist in mind–I think we're gonna see more of these image interfaces.

And Stable Diffusion has that, you can draw sort of an MS paint type image and then say, "Alright, now I want this to be an image of a landscape, but in the style of a specific artist." So then it's not just writing text and waiting for the output to come in, I'm drawing into it too. So we're both working more collaboratively. But I think also in the future, you might find algorithms that are way more in tune with specific artists. Like the person who's making it, how they like to make art. I think this problem's gonna be less of a question in the future. At one point, all of these things will be in your Photoshop or your creative software, and at that point, we don't even think about it as AI anymore. It's just a tool that's in Photoshop that we use. They already have neural filters in Photoshop–the Content Aware fill. No one really thinks about these questions when they're already using them. It's just this area we are right now where it's posing a lot of questions.

Angelica: Yeah. The most interesting executions of technology have been when it fades into the background. Or to your point, we don't necessarily say, "Oh, that's AI", or "Yep, that's AR". That's a classic one too. We just know it from the utility it provides us. And like Google Translate, for example, that could be associated with AR if you use the camera and it actually overlays the text in front. But the majority of people aren't thinking, oh, this is Google Translate using AR. We don't think about it like that. We're just like, "Oh, okay, cool. This is helping me out here."

Sam: Yeah, just think about all the students that are applying to art school this year and they're going into their undergrad art degree and by next year it's gonna be easier to use all this technology. And I think their understanding of it is gonna be very different than our understanding of people who never had this technology when we were in undergrad. You know, it's changing very quickly. It's changing how people work very rapidly too.

Angelica: Right. Another question came relating to copyright usage, which you touched on a little bit, and that's something that's an evolving conversation already in the courts, or even out of court–or if you're looking in the terms and conditions of Midjourney and DALL-E and Stable Diffusion.

Sam: When you download the model from Hugging Face, you have to agree to certain Terms and Conditions. I think it's basically a legal stop gap for them.

Angelica: Yep.

Sam: If I use these, am I going to get sued? You want to talk to a copyright lawyer or attorney, but I don't think they know the answer just yet either. What I will say is that many of the companies that create these algorithms–your OpenAIs, your Google's, your NVIDIAs–a lot of these companies also have large lobbying teams and they're going to try to push the law in a way that doesn't get them sued. Now, you might see that in the near future because these companies can throw so much money at the legal issue that by, in virtue of protecting themselves, they protect all the people who use their software. The way I like to talk about it is, and maybe I'm dating myself, but if you think about all the way to the early 2000's with Napster and file sharing, it didn't work out so well for the artists. And that technology has completely changed their industry and how they make money. Artists do not make money off of selling records anymore because anyone can get them for free. They make money now primarily through merchandise and touring. Perhaps something like that is going to happen.

Angelica: Yeah. When you brought up Napster, that reminded me of a sidetrack story where I got Napster and it was legitimate at that time, but every time I was like, "Oh yeah, I have this song on Napster." They were like, "Mmmm?" They're giving me a side eye because of where Napster came from and the illegal downloading. It's like, "No, it's legit. I swear I just got a gift card."

Sam: [laughter] Well, yeah, many of us now listen to all of our music on Spotify. That evolved in a way where they are paying artists in a specific way that sometimes is very predatory and something like that could happen to artists in these models. It doesn't look like history provides good examples where the artists win or come out on top. So again, something to think about if you are one of these artists. How do I prepare for this? How do I deal with it? At the end of the day, people are still gonna want your top fantasy illustrator to work on their project, but maybe people that aren't as famous, maybe those people are going to suffer a bit more.

Angelica: Right. There's also been a discussion on: can artists be exempted from being a part of prompts? For example, there was a really long Twitter thread, we'll link it in the show notes, but it was pretty much discussing how there was a lot of art that was being generated using her name in the prompt, and it looked very similar to what she would create. Should she get a commission because it used her name and her style to be able to generate that? Those are the questions there. Or if they're able to get exempt, does that also prevent the type of creative output Generative AI is able to create? Because now it's not an open forum anymore where you can use any artist. And now we're gonna see a lot of Picasso uses because that one hasn't been exempted. Or more indie artists aren't represented because they don't want to be.

Sam: I don't think the companies creating these exemptions are really going to work. One of my favorite things about artificial intelligence is that it's one of the most advanced high tech technologies that's ever existed, and it's also one of the most open. So it's going to work on their platforms because they can control it, but it's an extremely open technology. All these companies are putting some of their most stellar code and train models. There's DreamBooth now where you can basically take Stable Diffusion and then fine tune it on specific artists using a hundred or less images or so.

Even if a company does create these exemptions, you can't create images on Midjourney or DALL-E 2 in the style of Yoshitaka Amano or something like that, it wouldn't be so hard for somebody to just download all the free train models, train it on Yoshitaka Amano images, and then create art like that. The barrier to entry to do these things isn't high enough that this is a solution for that.

Angelica: Yeah, the mainstream platforms could help to get exempt, but if someone was to train their own model, then they could still do that.

Sam: It's starting to become kind of a wild west, and I can understand why certain artists are angry and nervous. It's just...it's something that's happening and if you wanna stop it, how do we stop it? It has to come from a very concerted legal sort of idea. Bunch of people getting together saying, "We need to do this now and this is how we want it to work." But can they do that faster than corporations can lobby to say, "No, we can do this." You know, it's very hard for small groups of artists to combat corporations that basically run all of our technologies.

It's an interesting thing. I don't know what the answer is. We should probably talk to a lawyer about it.

Angelica: Yeah. There's other technologies that have a similar conundrum as well. It's hard with emerging tech to control these things, especially when it is so open and anyone's able to contribute in either a good or a bad way.

Sam: Yeah, a hundred percent.

Angelica: That actually leads to our last question. It's not really a question, more of a statement. They mentioned that Generative AI seems like it's growing so fast and that it will get outta control soon. From my perspective, it's already starting to because of just the rapid iteration that's happening within this short period of time.

Sam: Even for us, we were spending time engineering these tools, creating these projects that use these and we'll be halfway through it and there's all these new technologies that might be better to use. Yeah, it does give a little bit of anxiety like, "Am I using the right one? What's it going to take to change technology right now?" Do you wait for the technology to advance, to become cheaper?

If you think about a company like Midjourney spending all this investment money on creating this platform, because theoretically only you can make this and it's very hard for other companies to recreate your business. But then six months later, Stable Diffusion comes out. It's open source, anyone can download it. And then two months later somebody open sources a full on scalable web platform. It's just that sort of thing where it evolves so fast. And how do you make business decisions about it? It's changing month to month at this point. Whereas before, it was changing every year or so, but now it's too fast. It does seem like it is starting to, again, become that singularity light type technology. Who’s to say that it's going to continue like that? It's just so hard to predict the future with this stuff. It's more what can I do right now and is it going to save me money or time? If not, don't do it. If yes, then do it.

Angelica: Yeah. The types of technologies that get the most excitement are the ones that get different types of people more mobilized that then makes the technology advance a lot faster. It just feels like towards the beginning of the summer, we were hearing like, "Oh, DALL-E 2, yay! Awesome." And then it seemed like it went exponentially fast from there based on a lot of the momentum. There was probably a lot of stuff behind the scenes that made it feel exponential. Would you say that it was because of a lot of interest that brought a lot of people into the same topic at one point? Or do you feel like it might have always been coming to this point?

Sam: Yeah, I think so. Whenever you start to see technology that is really starting to deliver on its promise, I think, again, a lot of people become interested in it. The big thing about Stable Diffusion was that it was able to use a different type of model to compress the actual training size of the images, which then allowed it to train faster and then be able to be trained and executed on a single GPU. That type of thing is how a lot of this stuff goes. There's generally one big company that creates the, "We figured out how to do this." And then all these other companies and groups and researchers say, "Alright, now we know how to do this. How do we do it cheaper, faster, with less data, and more powerful?" And any time there's something that comes out like that, people start spending a lot of time and money on it.

DALL-E was this thing that I like to say really demonstrated creative arithmetic. When you say, I want you to draw me a Pikachu sitting on a goat. And not only does it know what Pikachu and a goat looks like, but it understands that in order for us to believe that it's sitting on it, and you have to have it sitting in a very specific space. Pikachu's legs are on either side of it.

The idea that a machine can do that, something so similar to the way humans think, got a lot of people extremely excited. And at the time it was just, I think at the time it was like, 256 pixels by 256. But now we are doing 2048 by 24... whatever size you want. And that's only two years later. So yeah, a lot of excitement, obviously.

I think it is one of those technologies that really gets people excited because it is starting to deliver on the promise of AI. Just like self-driving cars–AI doing protein folding–you're starting to see more and more examples of what it could be and how exciting and how beneficial it can be.

Angelica: Awesome! Well, we've covered quite a bit, lots of great info here. Thanks again, Sam, for coming on the show.

Sam: Yeah, thanks for having me.

Angelica: Thanks everyone for listening to the Scrap The Manual podcast!

If you like what you hear, please subscribe and share! You can find us on Spotify, Apple Podcasts and wherever you get your podcasts. If you want to suggest topics, segment ideas, or general feedback, feel free to email us at scrapthemanual@mediamonks.com. If you want to partner with Labs.Monks, feel free to reach out to us at that same email address. Until next time!

Sam: Bye!

Ellinikon Experience Centre • An Experiential Look Into the Future of Urban Living

00:00

00:00

00:00

Case Study

0:00

Translating a vision of the future into tangible reality.

Ellinikon is Europe’s largest urban regeneration project, and serves as an incredible look into the future of urban spaces. LAMDA Development, the leading real estate developer in Greece, hired Monks to build an experience center from the ground up that brings its 20-year vision for Ellinikon to life today. We transformed an old airport hangar into an interactive journey that invites visitors to immerse themselves within scenes from the area’s past, present and future—a synergistic experience that blends physical and digital design.

Swipe

For More!

Drag

For More!

Step into lush, digitally enhanced landmarks and spaces.

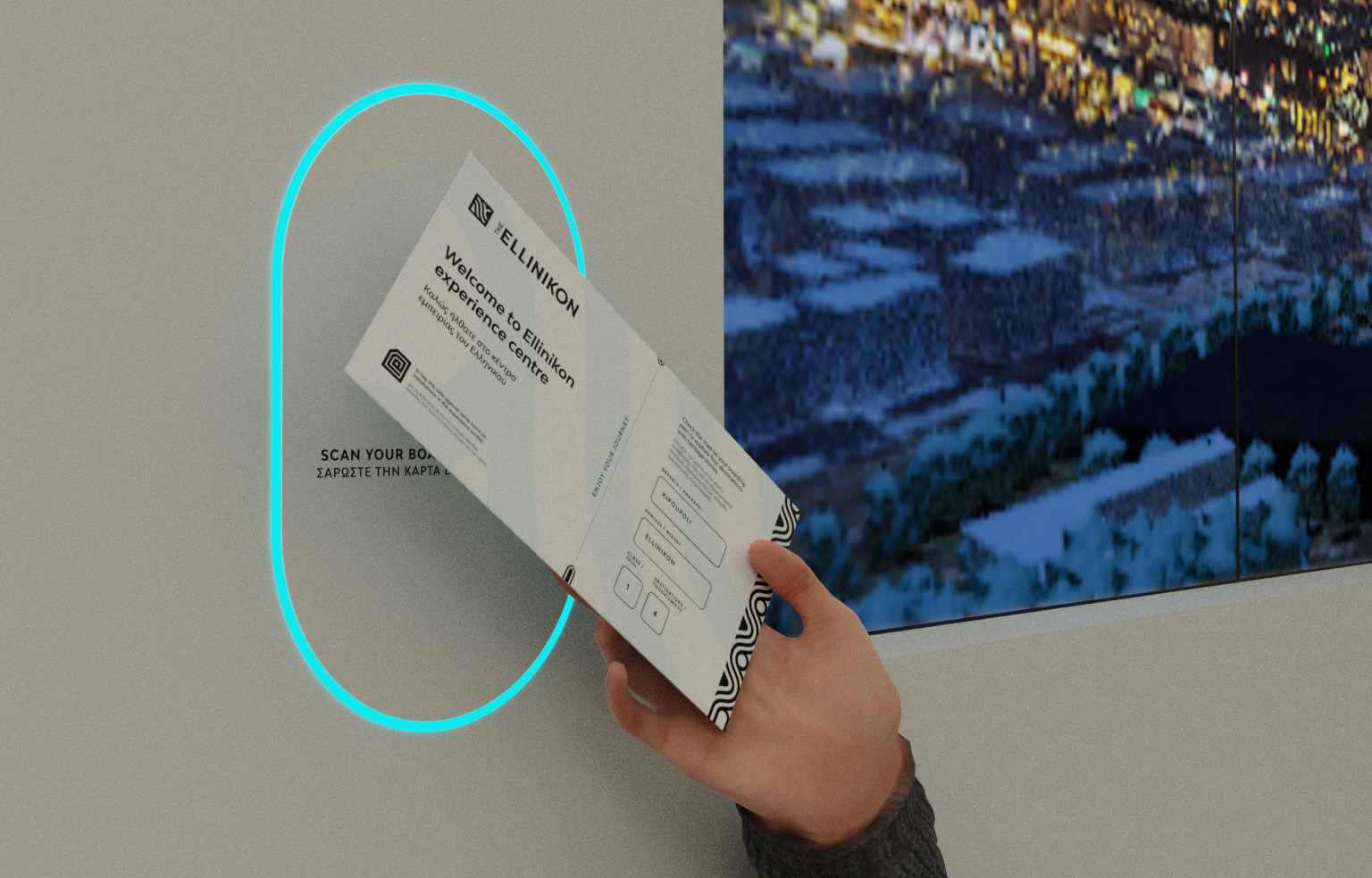

Upon checking into the Experience Center, visitors are given a personalized boarding pass—a reference to the space’s heritage of air travel. Augmented by RFID, the pass connects visitors to personalized experiences as they make their journey throughout the center. The first stop is an interactive maquette, or scale model, that showcases highlights of the development and provides a holistic, data-driven view of the Ellinikon master plan.

The beating heart of the development is the Ellinikon Park, which we paid tribute to through a multisensorial exhibit that invites visitors to become acquainted with the park’s landmarks and its native flora. An interactive map offers insight into local amenities and essential info, while a digitally enhanced botanical library display tells the stories of plants that are integral to the park and Greece at large. Those looking for a more active and scenic view of the park can take a virtual bike ride through the grounds and even enjoy a birds-eye view of its impressive sites. Meanwhile, those in a seafaring mood can explore the future vision of the Riviera on an XR-enhanced virtual yacht ride.

Picture your life in a reimagined urban landscape.

Elinikon will put Greece on the map as an example of human ingenuity and creating a new way of living: the smartest and greenest district of Europe. To help visitors reimagine a better urban future, we designed a series of displays that explore Ellinikon’s various innovations, all housed in a mesmerizing corridor mimicking the night sky. After getting a taste of Ellinikon’s vision, visitors are encouraged to share their own ambitions by wishing on a star and contributing to the shimmering galaxy above.

To help visitors truly envision life within Ellinikon, we staged a luxurious residential living space with story-driven, interactive experiences seamlessly embedded throughout. The RFID-enabled pass allowed us to personalize every interaction, making it even easier for people to imagine their home within the future of urban living. Finally, a panoramic view gives a glimpse into the Ellinikon vision, with sweeping views captured at different times of day and different floor levels.

Our Craft

Experiences that inform and inspire.

Shape the future through interactive experiences.

While flowing from one experience or display to the next, visitors can stop to take a rest in the Free City Space, which connects navigation patterns and play areas into one cohesive wayfinding system. Over the course of their journey, visitors engage with 22 bespoke experiences and 120 minutes of content. From creative direction to production to content development, we blended over a dozen capabilities to bring the Ellinikon master plan to life for visitors from around the world, translating an ambitious plan for urban living into tangible reality.