Angelica: Hey everyone! Welcome to Scrap The Manual, a podcast where we prompt “aha” moments through discussions of technology, creativity, experimentation, and how all those work together to address cultural and business challenges. My name is Angelica and we have a very special guest host. Yay!

Leah: Hi! It's great to be here, my name is Leah. We are both Creative Technologists with Media.Monks. I specifically work out of Media.Monks’ Singapore office.

Angelica: Today we're going to be giving a quick TLDR of one of our lab reports and deep dive into something that we didn't get to cover in depth in the reports, such as expanding on our prototype we created, a topic that has some interesting rabbit holes that didn't fit neatly onto a slide, you know, that kind of thing.

Leah: So for this episode, we are going to be covering technology and innovation culture in Asian Pacific region. If you haven't had a chance to read our APAC Lab Report, here's a quick TLDR.

The most influential technologies from the region are AI automation, AR and computer vision, and the metaverse. China and Japan are leading the growth in AI and machine learning together with Singapore and South Korea. If you come to this region, you might be surprised how people are embracing this advanced technology. People accept it because it is just so convenient and thanks to those Super Apps we have.

Angelica: To clarify for people who may not be familiar, what are Super Apps?

Leah: Yeah. So Super Apps are mobile applications that can provide multiple services. And you may have heard of some of the Super Apps such as WeChat in China. Kakao from South Korea, Line app from Japan (that's also widely used in Taiwan and Thailand) and Grab from Singapore, which is used in Southeast Asia. On Super Apps, you can use multiple services from online chatting, shopping, food delivery, to car hailing and digital payments. We literally live our social and cultural life on the Super Apps.

Angelica: Is it sort of like if Uber had one app, but not necessarily branded it's more of just, I'm going to go to WeChat, it'll call a ride, rent a scooter, or order in. You just download one app versus having to download five different ones.

Leah: Yeah, definitely. But actually for WeChat, it's more complicated, I would say, because there is a whole ecosystem on WeChat because WeChat uses mini programs. Just think of as a microsite on WeChat…

Angelica: Mm-hmm.

Leah: where they can sell their product and they can have these food delivery services. And for other Super Apps like Line app and Grab it's just exactly like you said. One example is that Burberry launched its social retail store in collaboration with Tencent, which integrates its offline store with mini programs on WeChat. It enables some special features in the store, such as earning social currencies, by engaging with the brand and even raising your own animal based avatars. This is pretty cool as it links up our digital and physical experiences.

Angelica: Yeah. What I really liked about this example was how technology was seamlessly integrated throughout. It wasn't like, “Hey scan this one QR code.” It went a little bit further to say, “Okay, if you interact with this mini program, then you'll have access and unlock particular outfits or particular items for the digital avatar. You'll be able to actually unlock cafe items in the real store.” So it seemed like it was all a part of one ecosystem. It didn't feel tacked on. It was truly embedded within the holistic retail experience. I know with a lot of branded activations within the US specifically, there's always that question of, should it be accessible through a mobile website or is it something that we can use a downloaded app for? And most clients tend to go with the mobile website.

Leah: Yeah.

Angelica: Because there's this hesitancy to download just another application, just to do another thing. And then worrying about the wifi strength when on site when asking people to download these apps. But it'd be interesting for brands creating these mini programs within a larger Super App that then consumers won't necessarily have to do anything else other than access that mini program versus having to download something. Then there's a lot more flexibility in what brands can do and they're not limited to what's available on a mobile website. They have the strength of what can be possible with an app.

Leah: Yeah, agreed. So another observation actually from our report is that the metaverse is on the rise in the APAC region. It might outplay the plans laid down in the West. Some platforms that draw our attention are Zepeto from South Korea and TME land in China.

Angelica: Yeah, and what's cool about those platforms is we see this emphasis on virtual idols, avatars and influencers. From the research that we did, we noticed that there are certain countries that are a bit more traditional culturally…

Leah: mm-hmm

Angelica: and are strict in how people can be in their real selves to have this sort of escape of the bounds culturally of what people can and cannot be because it's right or wrong or not necessarily accepted. People are going towards anonymity…

Leah: Yeah.

Angelica: for being able to express themselves. Sort of like the Finstagram accounts that happen in the US or expressing themselves through these virtual influencers, because then their virtual selves can be much more free to express themselves than their real versions could be.

Leah: And also Asia has a rich fandom culture. So it's not a surprise that we see the emphasis on virtual idols and virtual influencers because it enables the fans to interact with the superstars anytime, anywhere.

Angelica: Yeah. And from a branding aspect of things as well, virtual influencers and avatars can also be much more easy to control. Like all the controversies that happened because someone did something either way back in their past or something recently, that makes brands nervous about being able to endorse real people because people are flawed. With virtual influencers, you can control everything. You have teams of people being able to control exactly what they look like, what their personality is, what they do, and that flexibility and customizability…that's a lot more intense than it would be for a real person that has real feelings.

So there's some limitations on what the brand can do, where it's a lot more flexible with virtual influencers.

Okay, we've covered quite a lot there. There's a lot of really interesting examples that we see within the APAC region that definitely could be applied within Western countries as well. With this said, we're gonna go ahead and move on to what we did for the Labs Report prototype and expand a little bit more on our process.

Let's start with: what was even the prototype? For the prototype we leveraged Zepeto. Zepeto is a metaverse-like experience world platform…insert all buzzwords here…where it allows users to interact like you would for a Roblox world that you go and experience to, but it has additional social features to it.

So what we would think of as an Instagram feed or something like that, it has that embedded within the Zepeto platform. So instead of going to Instagram to talk about your Roblox experience, those two experiences are integrated within one platform. What we also wanted to achieve with this prototype is leverage a technology that originated from the APAC region, and specifically Zepeto. Zepeto is available globally for the most part, with a few exceptions, but it originated within South Korea. We really wanted to use Zepeto because it's available globally for most audiences and it takes the current fragmented way of how the metaverse worlds are created and integrates them with virtual influencers and social media.

With these gamified interactable experiences, the social aspects are really what makes this particular platform shine. And we are also doing this because the metaverse even a year or so later is still an incredibly popular topic. People are still having a lot of discourse about what the metaverse is, what it can be, discussing how brands have already interacted with their first steps into the metaverse, how they're going to continue to grow.

And this is part of what we do a lot at Media.Monks. We get a lot of client requests for similar types of experiences, whether that be Roblox, Decentraland, Horizon World, Fortnite…and Zepeto is just a great platform that no one's really talking a lot about within the Western dialogue, but it's incredibly powerful and it reaches so many people. We saw that it was an amazing platform that put the promise of what the metaverse can and will be to the next level.

Leah: Yeah. I also like Zepeto because Zepeto not only has Asian style avatars and it enables you to customize your avatar from your head, body, hair, outfits, and even poses and dancing steps you can have. So with Zepeto you can purchase a lot of outfits and decorations with the Zepeto money, which is a currency that you earn by app purchases or being more active on the platform.

Angelica: Yeah. There's two different types of currencies that Zepeto has. One of which are called Zems…i.e. gems. And then there's another one, which are coins. For creator made items, you can set a price for how many Zems you want them to go for. Anything that's created by users can only be sold by Zems, which are very difficult to get free with an app. That's where, you know, the free to play tends to come in. With a Euro you can get 14 Zems, so then you can buy more digital clothing. There are coins that you start the experience with that you can use to purchase Zepeto-created items. And so that's kind of how they have that difference there.

Leah: But my favorite part about Zepeto is the social aspect as you mentioned earlier. For me, it's like TikTok in the metaverse because it has the Feed feature.

You know, there are three pages of the feed: For you, following, and popular. Under the feed you can see live streaming by the virtual influencers and you can have your own live stream as well.

Angelica: For the live stream that's using some motion capture as well, because it's either pre-made models and moves that are created or people can actually have their face being recognized in real time...

Leah: Yeah.

Angelica: to then translate to that virtual avatar.

Leah: Yeah. Zepeto, they have the Zepeto camera. So with this camera, you can create content with your own avatar and the AR filter, which copies your facial expression quite accurately, and even brings your own avatar to real life. So you can place your own avatar on the table in your room.

Angelica: One part that I also thought was really cool…you had mentioned earlier with the poses. Think about if we see a celebrity on the street, we're gonna take a photo with them. Right. We can't just let that celebrity pass by without being like, “oh yeah, I totally saw JLo in Miami,” you know? The “take a photo or it didn't happen” type of thing, haha. There's a version of that on Zepeto. Fans can take a photo with you with their virtual avatars with your virtual avatar. So it takes the virtual autograph, of sorts, to a different level. You can live vicariously through your avatar by having them take a photo with your, your favorite celebrity or your favorite influencer. So I really love that aspect of being able to build that audience virtually as well.

Something also that's really cool about Zepeto is within those world experiences, the social aspects are still very much ingrained in there. It's not just, “Okay, you have this separate social feed, you have the separate virtual influencer side, and then you have the world.” They're all integrated.

An example of this is the other day we were testing out the Zepeto world and we were all in the same experience together. When someone would take a selfie, and that's right: there is a selfie stick in this experience and it looks exactly like what you would imagine, but the virtual version of it too. And when someone takes a photo or a video, it automatically tags people that were within that photo.

So it's generating all of this social momentum, like really, really quickly. And soon as you take that photo, you can either download it directly to your device. Or you can go ahead and immediately upload it. What was great for me personally…figuring out how to have, you know, just the right caption... that's something that takes me way too long to figure out what are the right words and the right hashtags. But you don't even need to worry about captions when taking photos within these worlds. As soon as you say, “I wanna upload it,” it automatically captions, tags people, and also gives other related hashtags for how other people could see that experience from you.

So it's very seamless and easy.

Leah: Yeah. That's amazing.

Angelica: It's just like the next level of how it makes sharing super, super, super easy, so that's something I really like there too.

Speaking of the worlds: now, within this next part of the prototyping process, it was up to us to determine the worldscape and interactions. And as a part of the concept, we wanted to create a world that plays into what real life influencers would be looking for when trying to fill their feed. And that is: creating content. Specifically: selfies. And so we created four different experiences that would have the ultimate selfie moment.

One, which is this party balloon atmosphere. Sort of think about these like really big balloons that you can kind of poke with the avatar as you move around, or even like jump on some of the balloons to get a higher view from it as well.

The second was like a summer pool party. You could actually swim in the pool. It would change the animation of the avatar when you're in the water part. And, you know, the classic, giant rubber ducky in the pool and all those things. So definitely brought you in the moment.

The third was an ethereal Japanese garden, so very much when wanting to get away and have a chill moment, that was definitely the vibe we were going for there.

And then lastly, we had the miniaturized city. So what you would think is the opposite of meditation is the hustle and bustle of the big city. And we created that experience as well. There is also a reference to the Netherlands. So you'll just have to keep an eye out for what that is and let us know if you find it.

Leah: Is there a hidden fifth environment?

Angelica: There it is. Yeah. You know, what was interesting is when we were testing out the environment and we were all together.

Leah: Yeah.

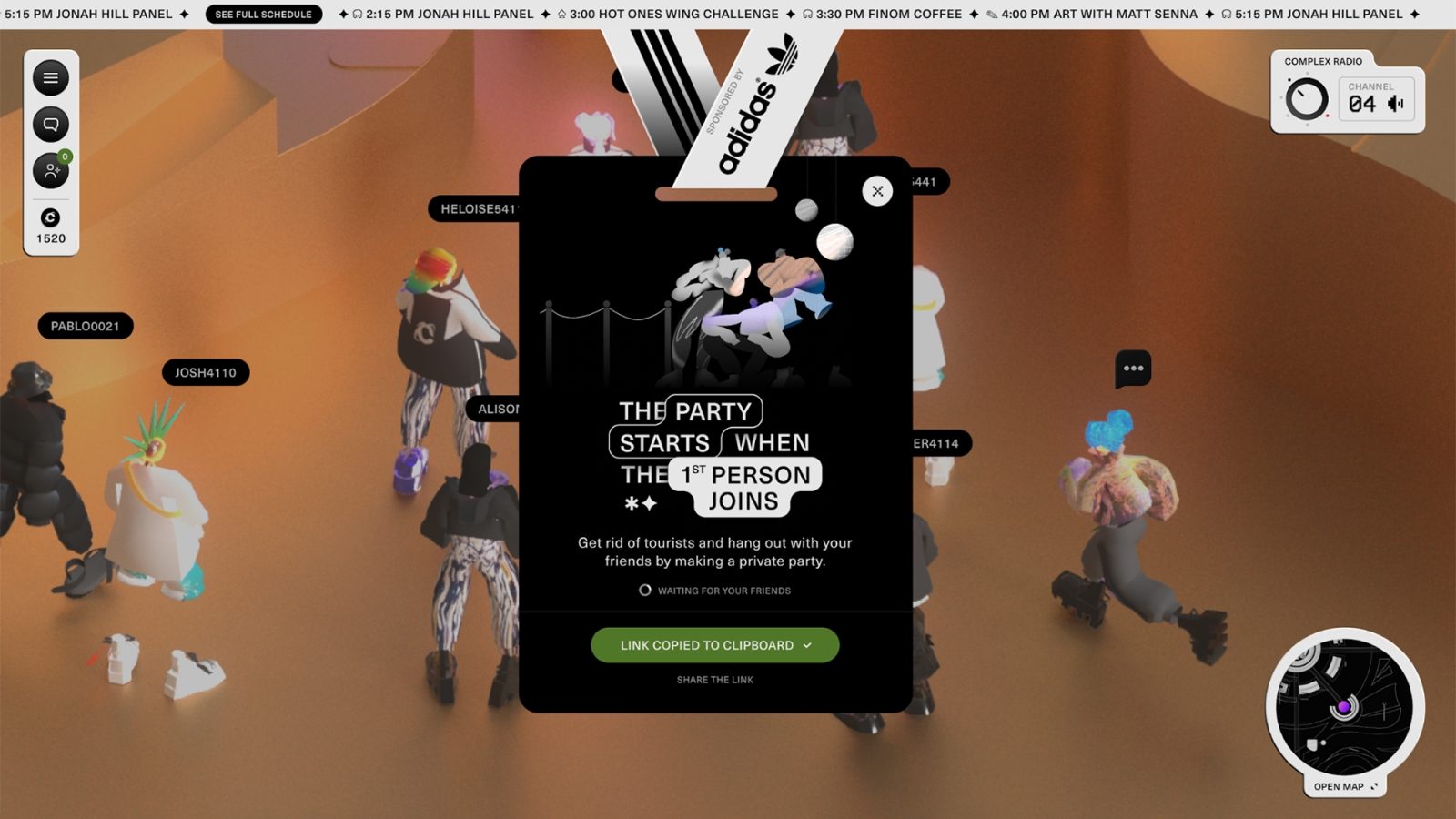

Angelica: We created our own room.

Leah: Yeah.

Angelica: And then we thought it was just gonna be the eight of us that were testing it out and then other people, random people showed up.

Leah: Wow.

Angelica: I was just like, “where did you guys come from?” There were two people that actually used the chat within the room and they belined directly to where that fifth environment was.

Leah: Yeah.

Angelica: So it was just really interesting that people one were specifically coming to the world to experience it together.

Leah: Mm.

Angelica: And then two, we saw a lot of random people. There would be dead spots where it’s just like, “okay it's just one of us in the room.” We're just testing it. But as soon as all of us got in there together and started taking photos, there were so many people that showed up. It's just like “What? This is insane!”

Leah: Was it the recommendation system on Zepeto?

Angelica: Yeah. That's what we're thinking. Because the room that was created…we thought it was not, I guess it wasn't a private room. It was probably a public room.

Leah: Yeah.

Angelica: But it was interesting that as soon as we started playing around and posting content, then people were like, “Okay, I'll join this room.”

Leah: Yeah. Maybe because of tagging as well.

Angelica: Yeah, exactly. And that goes to our earlier point of how really powerful that platform is and how posting would give that direct result of someone posting something and other people wanting to be a part of it. There was one person that liked my post that had like 65,000 followers.

Leah: Whoa.

Angelica: And I'm like, who are you? What is this?

Leah: That's definitely a virtual idol.

Angelica: Yeah, exactly. They only had like six posts though, which was a little weird, but they had so many followers. It was nuts.

Leah: Actually today I just randomly went into a swimming pool party on Zepeto. I went into the world, people were playing with water guns together.

Angelica: Mm-hmm

Leah: So I had just arrived. Landed. Then someone just shoot me with a water gown and I was hit. I must lose my block.

Angelica: Oh no! Haha, that sounds fun though.

Leah: Yeah, that was fun.

Angelica: Was it like a big room? Like how many people were in that environment at once?

Leah: When I was there, it was around 80 people in the world.

Angelica: Oh, wow.

Leah: Yeah, it's quite a lot actually.

Angelica: There's definitely something to be said about how there's superfans of Zepeto. Like that's kind of part of the daily aspect of it. Being able to meet people through the social aspects and then hang out with them through these worlds.

But all this to say this entire worldscape and all these interactions that we included within the prototypes were all built within what they call their BuildIt platform.

Leah: It's quite user-friendly. It's very easy to create a world yourself even with zero experience of any 3D modeling software.

Angelica: Yeah. BuildIt is like a 3D version of website builders. You have the drag and drop type of thing. Where instead of a 2D scrolling website experience, now you have that drag and drop functionality with a lot of different assets into a 3D space. We can also create experiences like this through Unity. The only caveat to Unity is that the experience that we would create there would only be available on mobile devices. And we didn't wanna restrict the type of people that would be able to experience this. So we decided to do it on BuildIt because the end result of those worlds would be able to be accessed on both desktop and mobile.

Leah: Other than the world space, you can also create some clothes for your avatar to make it look more unique and with its own personality. So in our case, we create a more neutral looking avatar with blue skin. Very cool, they're slightly edgy but approachable. And the process of creating clothes was very friendly. So you just download the template and then add the textures in Photoshop. We chose a t-shirt, jacket, bomber, and wind breaker. And then we touched it up with some Oriental elements such as a dragon and soft pink color, which matches our Shanghai office. Everyone can create their own unique clothes with simple editing of the textures.

Angelica: Yeah. We really wanted to play within clothing specifically because that's a part of this digital ecosystem of being an influencer. You may have branded experiences that you take part of, or brands sponsor you. Influencers will wear custom clothing either that they design or that they're representing another brand. All those things we wanted to integrate within this.

So the influencers are visiting this world. They could say, “Hey, I'm in this Media.Monks experience” or “insert brand here” experience. And I'm also wearing their custom clothing. It's sort of a shout out to the clothing as well as the world. So it's at the heart of this larger ecosystem. The world is not exclusive to the clothes…is not exclusive to social. All of those elements are all playing together and this leads to creating social content.

Once we had the world and the merchandise solidified, we continue to build off this virtual influencer style by creating content of our own. What we did is we analyzed popular Zepeto influencers. We even made a list of the types of content they create, which is going to someone else's world, doing an AR feature with their real life self. Being able to do posed photos with other avatars. All those were a part of the social content that we created as a part of this.

Now that the prototype is ready to go, it's time to think about what the prototype did not yet achieve but that we would really like to see in the future. So one thing that we recommend is: when wanting to create branded fully custom worlds, those should definitely be made within Unity to have the most flexibility. At this time of recording, being able to export worlds means that only is on mobile devices. So, you know, that's something to keep in mind there.

Leah: For clothing creation, there are some limitations. For example, for the texture, the maximum resolution we can upload is 512 x 512. So it means we can't add detailed patterns or logos onto our clothes. And we can't create physics of our clothing materials. That is another thing that I think the platform can improve.

Angelica: Yeah. It's not able to show the fuzziness of a sweater or if we're creating a dress or a shirt that needs to be flowy, it won't show that that shirt or that dress is fuzzy or flowy. It'll just be the pattern that's shown, but the texture of how a clothing might feel based on seeing it is not reflected there. So it's a give and take where it's very easy to create clothing items

Leah: Yeah.

Angelica: …but it doesn't go so far as to have a realistic look.

Leah: Yeah, but I think this is something that’s not just Zepeto. Other metaverse platforms can improve with that because I don't see many platforms can have physics of the clothing itself. It would be great if the physics of the clothing could be implemented in the workspace as well as in the AR camera. It would add extra immersion and fidelity to the whole experience.

Angelica: Yeah. It would also help with making those small micro interactions really fun. Let's say there's a skydiving experience that's in Zepeto and someone is jumping off of the plane and is doing their skydive.

Leah: Yeah.

Angelica: It'd be cool. If the physics of the clothing would react to, like this virtual wind that is happening, or something like that. Or if it's a really puffy sweater, it kind of like blows up because all of the air is kind of getting stuck in it. Those are just the fun things that make people get even more immersed within the environment too.

Moving forward in creating branded experiences, having a closer relationship with Zepeto’s support team and development team will be really helpful in a lot of the things that the BuildIt platform has a restriction for. But when collaborating with Zepeto and with using the Zepeto plugin for Unity, then we can unlock a lot of interactions that make the experience a lot deeper.

The other thing to mention here is it'd be really great to see Zepeto integrate with other social media platforms versus the Zepeto specific one. We've talked a lot about how Zepeto is a really powerful platform because it combines social with the virtual experience as well. And it would just be great if let's say there's an experience that happens in Zepeto and we're taking a photo or video, we say we wanna post it. Could that be post, all in one swoop, be posted to Instagram, posted to Twitter, posted to Facebook and all of those things, instead of this Zepeto ecosystem kind of being stuck.

So all the cool stuff that we're saying, it gets left within this platform and they're not necessarily shared outside of it unless you did the repost thing. That's kind of how it would work with Zepeto, but it'd be really great if all those rich features that we get with Zepeto could be extended to other platforms.

And I mean, there's already the platform fatigue of having to keep up five or many more social media platforms. So auto captioning for Instagram would be great or having an experience in Zepeto and then moving that on to what I wanna post on Twitter that would just make the process so much easier.

Leah: The full integration of that might take some time…

Angelica: Mm-hmm

Leah: since there are more things to consider such as data privacy.

Angelica: Yep.

Leah: But we might say it's coming faster in APAC. If one day the metaverse platform is integrated into the Super Apps. Just imagine by then it would be truly one ecosystem.

Angelica: Exactly. It'd be a really powerful way to have things all within one place. Meta has tried with this “connecting what you do virtually and connecting it to other social media platforms” specifically within its own ecosystem of Facebook, but it's had mixed success. There's just not as much of, “Okay. I'm posting what I'm doing in VR to Facebook.” There's not as much of that traction happening as with going in Zepeto, having this experience, posting it, and people randomly show up because of the social stuff. You could see that immediate interaction. It'd be really great to see this integration outside of just Zepeto social into other social media experiences to really expand its reach. Also particularly because of the virtual influencer aspect of things. Just imagine having this facial mocap that you do within Zepeto and that livestream could go to Instagram, Facebook, and multiple platforms at once. That would really increase the visibility of that virtual influencer and the social clout.

So we're getting towards the end. Let's go ahead and think about what are some concrete takeaways that the audience can implement and use within their daily lives, as they're considering Zepeto. And then also just in general, the APAC trends that we're seeing here.

Something that I think of is: gaming and social media don't have to be separate anymore. Like when playing online experiences, traditionally, it'll be either playing Warhammer on Steam and having the voice app within there, or opening up Roblox and a Discord channel. But those are two separate platforms: one to connect and one to play. With Zepeto, it's really inspiring to think about how those interactions can be in one. And not just voice, but the social aspect and everything that comes with that. It's really the next level of getting closer to what we talk about the metaverse can be. And Zepeto is really inspiring in that way.

Leah: Yeah. To your point about this social aspect: Zepeto is actually what we need right now. We can't expect everyone directly dive into virtual without connecting them with the social life in the real world. And Zepeto has this potential to bridge the gap between our social life in the physical world and the digital one.

Angelica: Yeah, Zepeto is a sleeping giant of sorts where it could have huge potential for a global audience. It is accessible in other countries outside of the APAC region, like we mentioned, but there's just not as much buzz around it as the platform definitely deserves. There are platforms that have tried to have the integration that Zepeto has within those three categories of virtual influencers, social media and experiences. But there just hasn't been as much from those other platforms as Zepeto has been able to succeed in.

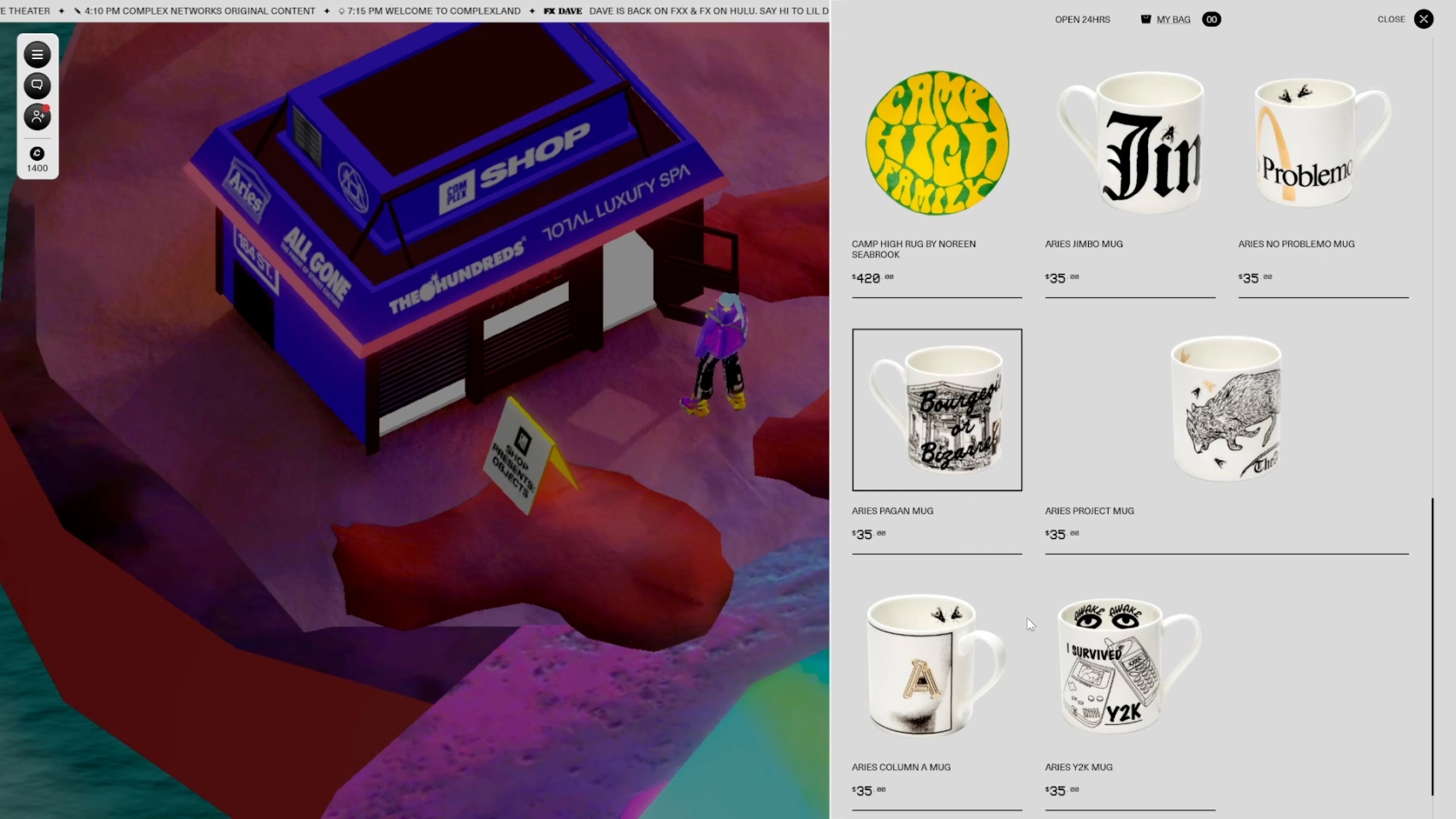

So like Decentraland, Sandbox Roblox, Fortnite, Horizon Worlds…all those platforms have tried to get this integration, but it just has not been as successful. Something also to keep in mind and why Zepeto is just a really great platform is that there have been brand activations that have happened on Zepeto already.

There have been concerts and virtual representations of BTS or even Selena Gomez going into those concerts. Like what we applauded a few years ago with the Fortnite concert, Zepeto has already been within those realms already. There's a Samsung activation. There's a Honda activation, and a Gucci one as well.

And those are definitely getting a lot of traction and movement with people who are actually part of those experiences. And because it's integrated within its own social media ecosystem with purchasing items with virtual influencers, there's just so much potential for when brands are getting into these spaces, the type of impact and interaction they can have with consumers.

Leah: Yeah. The last thing we learned from this region: currently the West and the East still feel very distinct technologically and also culturally, with some crossover happening, but it's not as much as we would like to see. Things like virtual influencers, technology in retail, Super Apps, increased use of digital payments, those have been used to deepen collections with consumers and enhance ease of use. It would be amazing to see that more widely integrated within the West.

Angelica: Yeah, exactly. There's a lot of cultural and technological crossover to Eastern countries in terms of, you know, the US culture and colloquialisms always make their way around the globe. And it would be really great to see the really impactful technological and cultural innovations that are happening within the East, make their way more holistically towards the West. Not just here or there, but how Google has been embraced within APAC. It'd be great to have some of those APAC platforms integrated in the west. There's a lot that each can learn from each other and build up on each other. It's not necessarily let's distinguish the West from the East, because we talked about that quite a bit, but what is the way that globally we can improve experiences for consumers. And there's a lot of ways technology can empower people to have those deeper connections and how brands can also be a part of that story.

Leah: Yeah.

Angelica: So that's a wrap! Thanks everybody for listening to the Scrap The Manual Podcast. Be sure to check out our blog post for more information, references, and also a link to our prototype. Remember to check out the Netherlands references and also the hidden fifth world within that prototype. If you like what you hear, please subscribe and share! You can find us on Spotify, Apple Podcasts and wherever you get your podcasts.

Leah: If you want to suggest topics, segment ideas, or general feedback, feel free to email us at scrapthemanual@mediamonks.com. If you want to partner with Media.Monks Labs, feel free to reach out to us at that same email address.

Angelica: Until next time!

Leah: Bye.